If I just browse some pages on the app, it sits at around 500MB. Many of these pages access the database but at this point in time, I only have roughly a couple of rows each for 10 tables, mostly storing strings and some small icons that are less than 50KB.

The real problem occurs when when I download a file. The file is roughly 140MB and is stored as a varbinary(MAX) in the database. The memory usage suddenly rises to 1.3GB for a split second and then falls back to 1GB. The code for that action is here:

public ActionResult DownloadIpa(int buildId)

{

var build = _unitOfWork.Repository<Build>().GetById(buildId);

var buildFiles = _unitOfWork.Repository<BuildFiles>().GetById(buildId);

if (buildFiles == null)

{

throw new HttpException(404, "Item not found");

}

var app = _unitOfWork.Repository<App>().GetById(build.AppId);

var fileName = app.Name + ".ipa";

app.Downloads++;

_unitOfWork.Repository<App>().Update(app);

_unitOfWork.Save();

return DownloadFile(buildFiles.Ipa, fileName);

}

private ActionResult DownloadFile(byte[] file, string fileName, string type = "application/octet-stream")

{

if (file == null)

{

throw new HttpException(500, "Empty file");

}

if (fileName.Equals(""))

{

throw new HttpException(500, "No name");

}

return File(file, type, fileName);

}

On my local computer, If I don't do anything, the memory usage stays at 1GB. If I then go back and navigate to some pages, it falls back down to 500MB.

On the deployment server, it stays at 1.6GB after the first download no matter what I do. I can force the memory usage to increase by continually downloading files until it reaches 3GB, where it drops back down to 1.6GB.

In every controller, I have overriden the Dispose() method as so:

protected override void Dispose(bool disposing)

{

_unitOfWork.Dispose();

base.Dispose(disposing);

}

This refers to:

public void Dispose()

{

Dispose(true);

GC.SuppressFinalize(this);

}

public void Dispose(bool disposing)

{

if (!_disposed)

{

if (disposing)

{

_context.Dispose();

}

}

_disposed = true;

}

So my unit of work should be disposed every time the controller is disposed. I am using Unity and I register the unit of work with a Heirarchical Lifetime Manager.

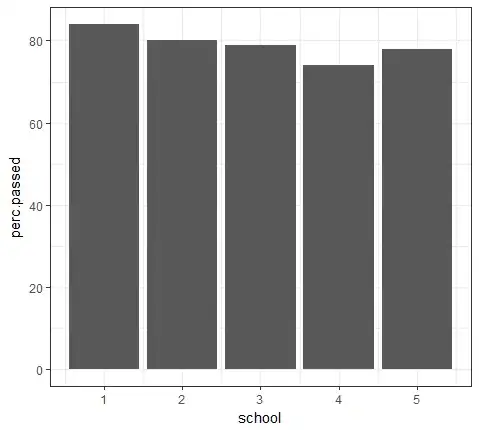

Here are a few of screenshots from the Profiler:

I believe this could be the problem or I am going down the wrong track. Why would Find() use 300MB?

EDIT:

Repository:

public class Repository<TEntity> : IRepository<TEntity> where TEntity : class

{

internal IDbContext Context;

internal IDbSet<TEntity> DbSet;

public Repository(IDbContext context)

{

Context = context;

DbSet = Context.Set<TEntity>();

}

public virtual IEnumerable<TEntity> GetAll()

{

return DbSet.ToList();

}

public virtual TEntity GetById(object id)

{

return DbSet.Find(id);

}

public TEntity GetSingle(Expression<Func<TEntity, bool>> predicate)

{

return DbSet.Where(predicate).SingleOrDefault();

}

public virtual RepositoryQuery<TEntity> Query()

{

return new RepositoryQuery<TEntity>(this);

}

internal IEnumerable<TEntity> Get(

Expression<Func<TEntity, bool>> filter = null,

Func<IQueryable<TEntity>, IOrderedQueryable<TEntity>> orderBy = null,

List<Expression<Func<TEntity, object>>> includeProperties = null)

{

IQueryable<TEntity> query = DbSet;

if (includeProperties != null)

{

includeProperties.ForEach(i => query.Include(i));

}

if (filter != null)

{

query = query.Where(filter);

}

if (orderBy != null)

{

query = orderBy(query);

}

return query.ToList();

}

public virtual void Insert(TEntity entity)

{

DbSet.Add(entity);

}

public virtual void Update(TEntity entity)

{

DbSet.Attach(entity);

Context.Entry(entity).State = EntityState.Modified;

}

public virtual void Delete(object id)

{

var entity = DbSet.Find(id);

Delete(entity);

}

public virtual void Delete(TEntity entity)

{

if (Context.Entry(entity).State == EntityState.Detached)

{

DbSet.Attach(entity);

}

DbSet.Remove(entity);

}

}

EDIT 2:

I ran dotMemory for a variety of scenarios and this is what I got.

The red circles indicate that sometimes there are multiple rises and drops happening on one page visit. The blue circle indicates download of a 40MB file. The green circle indicates download of 140MB file. Furthermore, a lot of the time, the memory usage keeps on increasing for a few more seconds even after the page has instantly loaded.