I have seen several questions in stackoverflow regarding how to fit a log-normal distribution. Still there are two clarifications that I need known.

I have a sample data, the logarithm of which follows a normal distribution. So I can fit the data using scipy.stats.lognorm.fit (i.e a log-normal distribution)

The fit is working fine, and also gives me the standard deviation. Here is my piece of code with the results.

import numpy as np

from scipy import stats

sample = np.log10(data) #taking the log10 of the data

scatter,loc,mean = stats.lognorm.fit(sample) #Gives the paramters of the fit

x_fit = np.linspace(13.0,15.0,100)

pdf_fitted = stats.lognorm.pdf(x_fit,scatter,loc,mean) #Gives the PDF

print "scatter for data is %s" %scatter

print "mean of data is %s" %mean

THE RESULT

THE RESULT

scatter for data is 0.186415047243

mean for data is 1.15731050926

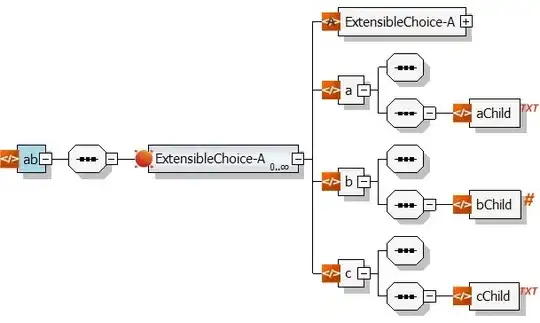

From the image you can clearly see that the mean is around 14.2, but what I get is 1.15??!! Why is this so? clearly the log(mean) is also not near 14.2!!

In THIS POST and in THIS QUESTION it is mentioned that the log(mean) is the actual mean.

But you can see from my above code, the fit that I have obtained is using a the sample = log(data) and it also seems to fit well. However when I tried

sample = data

pdf_fitted = stats.lognorm.pdf(x_fit,scatter,loc,np.log10(mean))

The fit does not seem to work.

1) Why is the mean not 14.2?

2) How to draw fill/draw vertical lines showing the 1 sigma confidence region?