This answer is for PostgreSQL, which the OP asked about in comments to the original question.

Data integrity is the most important thing to me. So I'm reluctant to store aggregate values unless a) performance is poor, and b) the dbms can guarantee the aggregate values are correct.

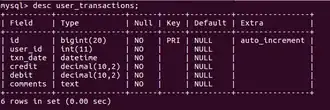

I started with this table.

create table transactions (

trans_id serial primary key,

cust_id integer not null, -- foreign key, references customers, not shown

trans_time timestamp not null default current_timestamp,

trans_amt numeric(14,2) not null

);

create index on transactions (cust_id);

I chose timestamp instead of date, because applications like this usually need to support timestamps, and because in the general case it should perform worse than dates. If we get good performance with timestamps, we should be able to get good performance with dates. I did not assume that timestamps for a single customer were unique.

I loaded 20 million rows of random-ish data into this table, and then I updated the statistics. The data included positive and negative amounts. The amounts were in even hundreds of dollars to make visual inspection easier.

One of the more common queries in this kind of application involves returning a register for a single customer--all transactions with a running balance.

Here's the raw data for customer 128 for the first three days.

cust_id trans_time trans_amt

--

128 2014-01-01 08:36:09 200.00

128 2014-01-01 14:18:10 200.00

128 2014-01-01 14:26:56 0.00

128 2014-01-01 18:17:31 400.00

128 2014-01-01 20:18:53 100.00

128 2014-01-02 00:10:35 0.00

128 2014-01-02 01:44:26 300.00

128 2014-01-02 15:49:31 -300.00

128 2014-01-03 00:33:23 400.00

128 2014-01-03 11:55:13 -200.00

128 2014-01-03 11:56:34 -100.00

128 2014-01-03 14:58:42 -400.00

128 2014-01-03 17:31:11 0.00

We should expect these sums for the first three days.

2014-01-01 900.00

2014-01-02 0.00

2014-01-03 -300.00

And the running balance for the first three days should look like this.

2014-01-01 900.00

2014-01-02 900.00

2014-01-03 600.00

A register of daily balances

select

cust_id

, trans_date

, sum(daily_amt) over (partition by cust_id order by trans_date) daily_balance

from (select

cust_id

, trans_time::date trans_date

, sum(trans_amt) daily_amt

from transactions

where cust_id = 128

group by cust_id, trans_date) x

order by cust_id, trans_date;

cust_id trans_date daily_balance

--

128 2014-01-01 900.00

128 2014-01-02 900.00

128 2014-01-03 600.00

. . .

Execution plan for the register

The execution plan shows that the query above runs in 12 ms. I think that's reasonable for this kind of application, but I might be able to reduce run time below 12 ms by indexing an expression (trans_time::date) or by a compound index.

"WindowAgg (cost=7232.14..7252.94 rows=1040 width=40) (actual time=11.728..12.093 rows=294 loops=1)"

" -> Sort (cost=7232.14..7234.74 rows=1040 width=40) (actual time=11.700..11.733 rows=294 loops=1)"

" Sort Key: transactions.cust_id, ((transactions.trans_time)::date)"

" Sort Method: quicksort Memory: 38kB"

" -> HashAggregate (cost=7156.62..7169.62 rows=1040 width=16) (actual time=11.392..11.466 rows=294 loops=1)"

" -> Bitmap Heap Scan on transactions (cost=39.66..7141.89 rows=1964 width=16) (actual time=0.839..9.753 rows=1961 loops=1)"

" Recheck Cond: (cust_id = 128)"

" -> Bitmap Index Scan on transactions_cust_id_idx (cost=0.00..39.17 rows=1964 width=0) (actual time=0.501..0.501 rows=1961 loops=1)"

" Index Cond: (cust_id = 128)"

"Total runtime: 12.272 ms"