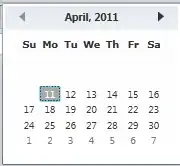

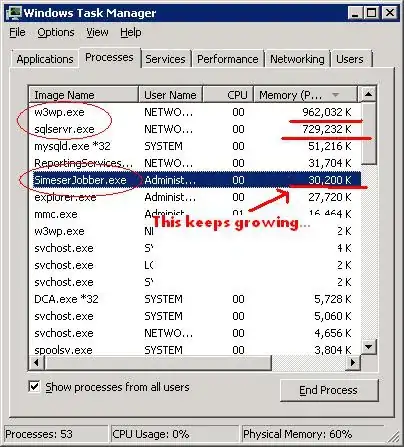

The trick here is to check the requests that are coming in and out of the page-change action when you click on the link to view the other pages. The way to check this is to use Chrome's inspection tool (via pressing F12) or installing the Firebug extension in Firefox. I will be using Chrome's inspection tool in this answer. See below for my settings.

Now, what we want to see is either a GET request to another page or a POST request that changes the page. While the tool is open, click on a page number. For a really brief moment, there will only be one request that will appear, and it's a POST method. All the other elements will quickly follow and fill the page. See below for what we're looking for.

Click on the above POST method. It should bring up a sub-window of sorts that has tabs. Click on the Headers tab. This page lists the request headers, pretty much the identification stuff that the other side (the site, for example) needs from you to be able to connect (someone else can explain this muuuch better than I do).

Whenever the URL has variables like page numbers, location markers, or categories, more often that not, the site uses query-strings. Long story made short, it's similar to an SQL query (actually, it is an SQL query, sometimes) that allows the site to pull the information you need. If this is the case, you can check the request headers for query string parameters. Scroll down a bit and you should find it.

As you can see, the query string parameters match the variables in our URL. A little bit below, you can see Form Data with pageNum: 2 beneath it. This is the key.

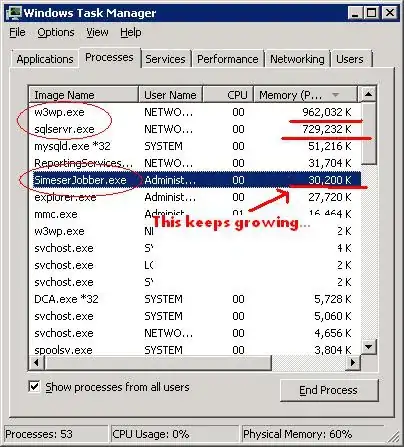

POST requests are more commonly known as form requests because these are the kind of requests made when you submit forms, log in to websites, etc. Basically, pretty much anything where you have to submit information. What most people don't see is that POST requests have a URL that they follow. A good example of this is when you log-in to a website and, very briefly, see your address bar morph into some sort of gibberish URL before settling on /index.html or somesuch.

What the above paragraph basically means is that you can (but not always) append the form data to your URL and it will carry out the POST request for you on execution. To know the exact string you have to append, click on view source.

Test if it works by adding it to the URL.

Et voila, it works. Now, the real challenge: getting the last page automatically and scraping all of the pages. Your code is pretty much there. The only things remaining to be done are getting the number of pages, constructing a list of URLs to scrape, and iterating over them.

Modified code is below:

from bs4 import BeautifulSoup as bsoup

import requests as rq

import re

base_url = 'http://my.gwu.edu/mod/pws/courses.cfm?campId=1&termId=201501&subjId=ACCY'

r = rq.get(base_url)

soup = bsoup(r.text)

# Use regex to isolate only the links of the page numbers, the one you click on.

page_count_links = soup.find_all("a",href=re.compile(r".*javascript:goToPage.*"))

try: # Make sure there are more than one page, otherwise, set to 1.

num_pages = int(page_count_links[-1].get_text())

except IndexError:

num_pages = 1

# Add 1 because Python range.

url_list = ["{}&pageNum={}".format(base_url, str(page)) for page in range(1, num_pages + 1)]

# Open the text file. Use with to save self from grief.

with open("results.txt","wb") as acct:

for url_ in url_list:

print "Processing {}...".format(url_)

r_new = rq.get(url_)

soup_new = bsoup(r_new.text)

for tr in soup_new.find_all('tr', align='center'):

stack = []

for td in tr.findAll('td'):

stack.append(td.text.replace('\n', '').replace('\t', '').strip())

acct.write(", ".join(stack) + '\n')

We use regular expressions to get the proper links. Then using list comprehension, we built a list of URL strings. Finally, we iterate over them.

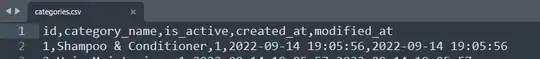

Results:

Processing http://my.gwu.edu/mod/pws/courses.cfm?campId=1&termId=201501&subjId=ACCY&pageNum=1...

Processing http://my.gwu.edu/mod/pws/courses.cfm?campId=1&termId=201501&subjId=ACCY&pageNum=2...

Processing http://my.gwu.edu/mod/pws/courses.cfm?campId=1&termId=201501&subjId=ACCY&pageNum=3...

[Finished in 6.8s]

Hope that helps.

EDIT:

Out of sheer boredom, I think I just created a scraper for the entire class directory. Also, I update both the above and below codes to not error out when there is only a single page available.

from bs4 import BeautifulSoup as bsoup

import requests as rq

import re

spring_2015 = "http://my.gwu.edu/mod/pws/subjects.cfm?campId=1&termId=201501"

r = rq.get(spring_2015)

soup = bsoup(r.text)

classes_url_list = [c["href"] for c in soup.find_all("a", href=re.compile(r".*courses.cfm\?campId=1&termId=201501&subjId=.*"))]

print classes_url_list

with open("results.txt","wb") as acct:

for class_url in classes_url_list:

base_url = "http://my.gwu.edu/mod/pws/{}".format(class_url)

r = rq.get(base_url)

soup = bsoup(r.text)

# Use regex to isolate only the links of the page numbers, the one you click on.

page_count_links = soup.find_all("a",href=re.compile(r".*javascript:goToPage.*"))

try:

num_pages = int(page_count_links[-1].get_text())

except IndexError:

num_pages = 1

# Add 1 because Python range.

url_list = ["{}&pageNum={}".format(base_url, str(page)) for page in range(1, num_pages + 1)]

# Open the text file. Use with to save self from grief.

for url_ in url_list:

print "Processing {}...".format(url_)

r_new = rq.get(url_)

soup_new = bsoup(r_new.text)

for tr in soup_new.find_all('tr', align='center'):

stack = []

for td in tr.findAll('td'):

stack.append(td.text.replace('\n', '').replace('\t', '').strip())

acct.write(", ".join(stack) + '\n')