Detecting outliers could be done with help of DBSCAN R package, well-known algorithm used for cluster identification (see WIKIPEDIA for more details ).

This function has three important inputs:

- x: your data (only numeric value)

- eps: the target maximal distance

- minPts: number of minimal points to consider them as a cluster

Evaluating eps can be done with help of knndist(...) and knndistplot(...) functions:

- knndistplot will plot the eps values on your data set for a given k (i.e. minPts) ==> you could visually select an effective eps value (generally in the knee curve part)

- knndist will evaluate eps values and return them in matrix from. the k input will generate 1:1:k valuations and you could use the result for determining programatically the accurate eps & k values

Next you have just to use dbscan(yourdata, eps, k) for getting a dbscan object with following components:

- eps: the eps used for calculations

- minPts: the minimal number of points for identifying a cluster

- cluster: an integer vector identifying points which belongs to a cluster (=1) or not (=0). The last ones correspond to the outliers you want to eliminate.

Please note the following limitations on dbscan:

- dbscan use euclidean distance and so it is submitted to "Curse of Dimensions". This can be avoided with use of PCA

- dbscan eliminates superposed points which might generate unidentified points. This can be solved either by merging the results with your data with a left outer join or by using jitter(...) function which adds noise to your data. According to the data you displayed, I think it could be the case for your data

knowing this limitations, dbscan package offers two alternative methods : LOF and OPTICS (an extension of DBSCAN)

Edit on 25 Jabuary 2016

Following @rawr answer, I'm giving an example based on mtcars dataset to show how to use dbscan for identifying outliers. Please note that my example will use the excellent data.table package instead of the classical data.frame.

First, I start to replicate rawr's approach for illustrating the use of data.table

require(data.table)

require(ggplot2)

require(dbscan)

data(mtcars)

dt_mtcars <- as.data.table(mtcars)

# based on rawr's approach

plot(wt~mpg, data=dt_mtcars)

lo <- loess.smooth(dt_mtcars[,mpg], dt_mtcars[,wt])

lines(lo$x,lo$y, lwd=3)

lines(lo$x,lo$y * 1.2, lwd=3 , col=2 )

lines(lo$x,lo$y / 1.2, lwd=3 , col=2 )

Thereby, we can assess that we get the same results independently of underlying support.

Second, the following code illustrate the DBSCAN approach which starts with determining eps and k, the necessary number of points to identifying a cluster:

res_knn = kNNdist( dt_mtcars[, .(wt, mpg)] , k = 10)

dim_knn = dim(res_knn)

x_knn = seq(1, dim_knn[1])

ggplot() +

geom_line( aes( x = x_knn , y = sort(res_knn[, 1]) , col = 1 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 2]) , col = 2 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 3]) , col = 3 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 4]) , col = 4 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 5]) , col = 5 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 6]) , col = 6 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 7]) , col = 7 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 8]) , col = 8 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 9]) , col = 9 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 10]) , col = 10 ) ) +

xlab('sorted results') +

ylab('kNN distance')

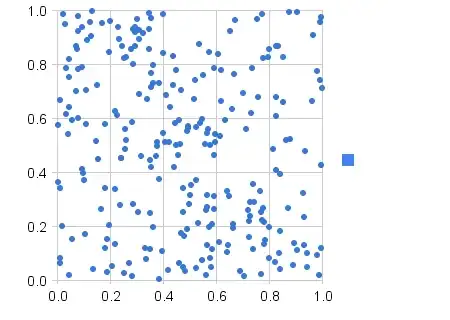

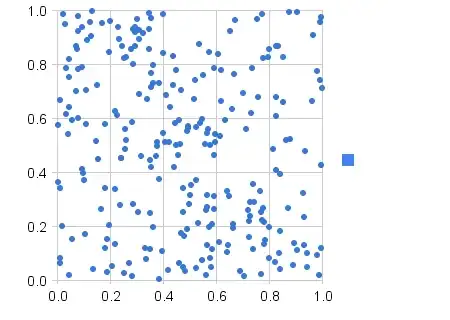

The results are plotted in following graphic:

It shows that the calculated kNN distance is sensitive to the factor k however the accurate eps value for segregating outliers is located in the knee part of the curves ==> suitable eps is located between 2 and 4.

This is a visual assessment which could be automatized with appropriate search algorithms (for instance, see this link).

Regarding k, a tradeoff must be defined, knowing the lower k is, the less stringent results are.

In next part, we will parametrize dbscan with eps = 3 (based on visual estimation) and k = 4 for having slight stringent results. We will plot these results with help of rawr's code:

eps = 3

k = 4

res_dbscan = dbscan( dt_mtcars[, .(wt, mpg)] , eps , k )

plot(wt~mpg, data=dt_mtcars, col = res_dbscan$cluster)

lo <- loess.smooth(dt_mtcars[res_dbscan$cluster>0,mpg], dt_mtcars[res_dbscan$cluster>0,wt])

lines(lo$x,lo$y, lwd=3)

lines(lo$x,lo$y * 1.2, lwd=3 , col=2 )

lines(lo$x,lo$y / 1.2, lwd=3 , col=2 )

we got this figure where we can assess that we got different results from rawr's approach, where the points located in mpg = [10,13] are considered as outliers.

These results could considered as odd comparing to rawr's solution, which works under the assumptions of having bivariate data (Y ~ X). However mtcars is a multidimensional dataset where relationship between variables might be (or not) linear... To assess this point, we could scatterplot this dataset, filtered on numerical values for instance

pairs(dt_mtcars[, .(mpg, disp, hp, drat, wt, qsec)])

If we focus only on the result wt ~ mpg, we might think it is an anti-linear relationship at first look. But with other plotted relationships, this might be not the case and finding outliers in N-Dim environment is a bit trickier. Indeed one point might be considered as outlier when projected in particular 2D comparison... but inversely if we add a new comparison dimension. Indeed we might have collinearity which could be identified and so strengthen the cluster relationship or not.

My friends, I agree it's a lot of if and in order to illustrate this situation, we will proceed to a dbscan analysis on numeric values of mtcars.

So I will replicate the process presented earlier and let's start with the kNN distance analysis:

res_knn = kNNdist( dt_mtcars[, .(mpg, disp, hp, drat, wt, qsec)] , k = 10)

dim_knn = dim(res_knn)

x_knn = seq(1, dim_knn[1])

ggplot() +

geom_line( aes( x = x_knn , y = sort(res_knn[, 1]) , col = 1 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 2]) , col = 2 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 3]) , col = 3 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 4]) , col = 4 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 5]) , col = 5 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 6]) , col = 6 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 7]) , col = 7 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 8]) , col = 8 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 9]) , col = 9 ) ) +

geom_line( aes( x = x_knn , y = sort(res_knn[, 10]) , col = 10 ) ) +

xlab('sorted results') +

ylab('kNN distance')

Comparatively to the analysis produced on wt ~ mpg, we can see that kNNdist(...) produce far more important kNN distance (until 200 with k = 10 for instance). However we still have the knee part which help us to estimate a suitable eps value.

In next part, we will use eps = 75 and k = 5 and

# optimal eps value is between 40 (k=1) and 130 (k=10)

eps = 75

k = 5

res_dbscan = dbscan( dt_mtcars[, .(mpg, disp, hp, drat, wt, qsec)] , eps , k )

pairs(dt_mtcars[, .(mpg, disp, hp, drat, wt, qsec)] , col = res_dbscan$cluster+2L)

Thereby the scatterplot of this analysis highlights that identifying outliers could be tricky in N-Dim environment, due to complex relationships between variables. But please note that in most of cases, the outliers are located in a corner part of the 2D projection, which strengthen the results obtained with wt ~ mpg