In terms of RDD persistence, what are the differences between cache() and persist() in spark ?

- 23,614

- 16

- 68

- 106

6 Answers

With cache(), you use only the default storage level :

MEMORY_ONLYfor RDDMEMORY_AND_DISKfor Dataset

With persist(), you can specify which storage level you want for both RDD and Dataset.

From the official docs:

- You can mark an

RDDto be persisted using thepersist() orcache() methods on it.- each persisted

RDDcan be stored using a differentstorage level- The

cache() method is a shorthand for using the default storage level, which isStorageLevel.MEMORY_ONLY(store deserialized objects in memory).

Use persist() if you want to assign a storage level other than :

MEMORY_ONLYto the RDD- or

MEMORY_AND_DISKfor Dataset

Interesting link for the official documentation : which storage level to choose

-

28Note that `cache()` now uses [MEMORY_AND_DISK](http://spark.apache.org/docs/2.3.0/api/python/pyspark.sql.html#pyspark.sql.DataFrame.cache) – ximiki Jun 21 '18 at 21:56

-

I don't think the above comment is correct. Reading the latest official documentation, using the link ahars provides aligns with the last bullet point... The cache() method is a shorthand for using the default storage level, which is StorageLevel.MEMORY_ONLY (store deserialized objects in memory). – user2596560 Sep 18 '19 at 18:32

-

4@ximiki , `MEMORY_AND_DISK` is the default value only for Datasets. `MEMORY_ONLY` is still the default value for RDD – ahars Oct 28 '19 at 14:30

-

1@user2596560 the comment is correct for the default cache value of the datasets. You are right for the RDD that still keep the MEMORY_ONLY default value – ahars Oct 28 '19 at 14:32

The difference between

cacheandpersistoperations is purely syntactic. cache is a synonym of persist or persist(MEMORY_ONLY), i.e.cacheis merelypersistwith the default storage levelMEMORY_ONLY

But

Persist()We can save the intermediate results in 5 storage levels.

- MEMORY_ONLY

- MEMORY_AND_DISK

- MEMORY_ONLY_SER

- MEMORY_AND_DISK_SER

- DISK_ONLY

/** * Persist this RDD with the default storage level (

MEMORY_ONLY). */

def persist(): this.type = persist(StorageLevel.MEMORY_ONLY)/** * Persist this RDD with the default storage level (

MEMORY_ONLY). */

def cache(): this.type = persist()

see more details here...

Caching or persistence are optimization techniques for (iterative and interactive) Spark computations. They help saving interim partial results so they can be reused in subsequent stages. These interim results as RDDs are thus kept in memory (default) or more solid storage like disk and/or replicated.

RDDs can be cached using cache operation. They can also be persisted using persist operation.

#

persist,cacheThese functions can be used to adjust the storage level of a

RDD. When freeing up memory, Spark will use the storage level identifier to decide which partitions should be kept. The parameter less variantspersist() andcache() are just abbreviations forpersist(StorageLevel.MEMORY_ONLY).

Warning: Once the storage level has been changed, it cannot be changed again!

Warning -Cache judiciously... see ((Why) do we need to call cache or persist on a RDD)

Just because you can cache a RDD in memory doesn’t mean you should blindly do so. Depending on how many times the dataset is accessed and the amount of work involved in doing so, recomputation can be faster than the price paid by the increased memory pressure.

It should go without saying that if you only read a dataset once there is no point in caching it, it will actually make your job slower. The size of cached datasets can be seen from the Spark Shell..

Listing Variants...

def cache(): RDD[T]

def persist(): RDD[T]

def persist(newLevel: StorageLevel): RDD[T]

See below example :

val c = sc.parallelize(List("Gnu", "Cat", "Rat", "Dog", "Gnu", "Rat"), 2)

c.getStorageLevel

res0: org.apache.spark.storage.StorageLevel = StorageLevel(false, false, false, false, 1)

c.cache

c.getStorageLevel

res2: org.apache.spark.storage.StorageLevel = StorageLevel(false, true, false, true, 1)

Note :

Due to the very small and purely syntactic difference between caching and persistence of RDDs the two terms are often used interchangeably.

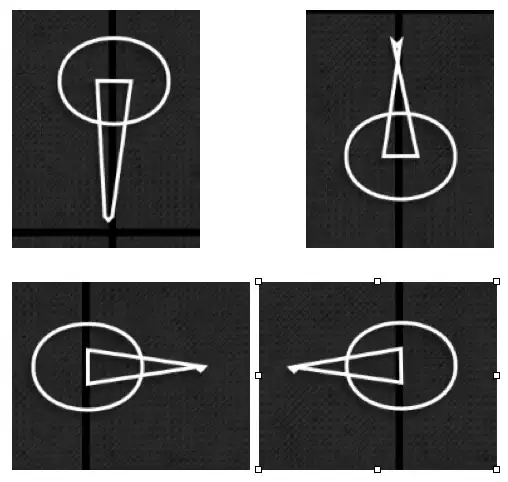

See more visually here....

Persist in memory and disk:

Cache:

Caching can improve the performance of your application to a great extent.

In general, it is recommended to use persist with a specific storage level to have more control over caching behavior, while cache can be used as a quick and convenient way to cache data in memory.

- 28,239

- 13

- 95

- 121

There is no difference. From RDD.scala.

/** Persist this RDD with the default storage level (`MEMORY_ONLY`). */

def persist(): this.type = persist(StorageLevel.MEMORY_ONLY)

/** Persist this RDD with the default storage level (`MEMORY_ONLY`). */

def cache(): this.type = persist()

- 10,845

- 2

- 34

- 50

Spark gives 5 types of Storage level

MEMORY_ONLYMEMORY_ONLY_SERMEMORY_AND_DISKMEMORY_AND_DISK_SERDISK_ONLY

cache() will use MEMORY_ONLY. If you want to use something else, use persist(StorageLevel.<*type*>).

By default persist() will

store the data in the JVM heap as unserialized objects.

Cache() and persist() both the methods are used to improve performance of spark computation. These methods help to save intermediate results so they can be reused in subsequent stages.

The only difference between cache() and persist() is ,using Cache technique we can save intermediate results in memory only when needed while in Persist() we can save the intermediate results in 5 storage levels(MEMORY_ONLY, MEMORY_AND_DISK, MEMORY_ONLY_SER, MEMORY_AND_DISK_SER, DISK_ONLY).

- 50,140

- 28

- 121

- 140

- 61

- 1

- 1

For impatient:

Same

Without passing argument, persist() and cache() are the same, with default settings:

- when

RDD: MEMORY_ONLY - when

Dataset: MEMORY_AND_DISK

Difference:

Unlike cache(), persist() allows you to pass argument inside the bracket, in order to specify the level:

persist(MEMORY_ONLY)persist(MEMORY_ONLY_SER)persist(MEMORY_AND_DISK)persist(MEMORY_AND_DISK_SER )persist(DISK_ONLY )

Voilà!

- 1,787

- 14

- 30