I am using Moment.js to compare dates, but I get something odd. My code:

var extraStackData = function (data, from_date1, end_date1) {

var result = {};

for (var i in data) {

var row = data[i];

if (typeof result[row['know_source']] == 'undefined') {

result[row['know_source']] = {};

}

result[row['know_source']][row['create_date']] = parseInt(row['sum']);

}

// console.log(result);

console.log(from_date1);

console.log(end_date1);

console.log(from_date1 > end_date1);

var cur_date = from_date1;

console.log(cur_date);

console.log(cur_date.isAfter(end_date1));

for (var source in result) {

for (var cur_date = from_date; cur_date.isBefore(end_date); cur_date.add("days", 1)) {

console.log(cur_date);

if (typeof result[source][cur_date] == 'undefined') {

result[source][cur_date] = 0;

}

}

// console.log(result[source])

}

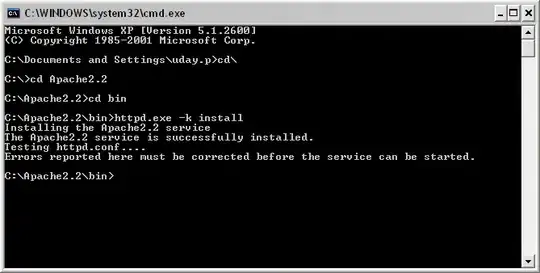

The result is as follows:

According to the output, it seems that the variable from_date='2014-10-1' is larger than end_date='2014-11-18'. Can someone help me out?

Update: I have found an even weirder thing. The following piece of code:

console.log(from_date);

console.log(end_date);

var days = from_date.diff(end_date, 'days');

console.log(days);

in which I use diff to get the interval days, gives the following output: