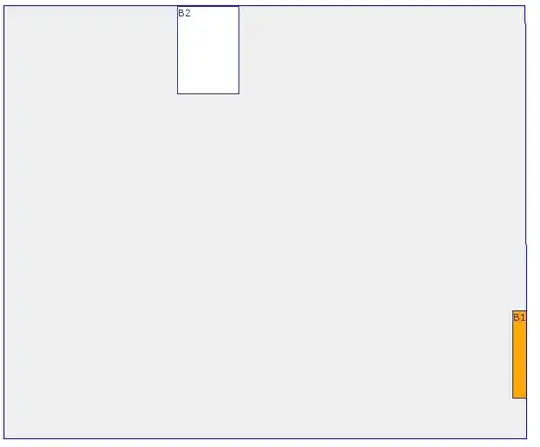

I am trying to implement the following kind of pipeline on the GPU with CUDA:

I have four streams with each a Host2Device copy, a kernel call and a Device2Host copy. However, the kernel calls have to wait for the Host2Device copy of the next stream to finish.

I intended to use cudaStreamWaitEvent for synchronization. However, according to the documentation, this only works if cudaEventRecord has been called earlier for the according event. And this is not the case in this scenario.

The streams are managed by separate CPU threads which basically look as follows:

Do some work ...

cudaMemcpyAsync H2D

cudaEventRecord (event_copy_complete[current_stream])

cudaStreamWaitEvent (event_copy_complete[next_stream])

call kernel on current stream

cudaMemcpyAsync D2H

Do some work ...

The CPU threads are managed to start the streams in the correct order. Thus, cudaStreamWaitEvent for the copy complete event of stream 1 is called (in stream 0) before cudaEventRecord of that very event (in stream 1). This results in a functional no-op.

I have the feeling that events can't be used this way. Is there another way to achieve the desired synchronization?

Btw, I can't just reverse the stream order because there are some more dependencies.

API call order

As requested, here is the order in which CUDA calls are issued:

//all on stream 0

cpy H2D

cudaEventRecord (event_copy_complete[0])

cudaStreamWaitEvent (event_copy_complete[1])

K<<< >>>

cpy D2H

//all on stream 1

cpy H2D

cudaEventRecord (event_copy_complete[1])

cudaStreamWaitEvent (event_copy_complete[2])

K<<< >>>

cpy D2H

//all on stream 2

cpy H2D

cudaEventRecord (event_copy_complete[2])

cudaStreamWaitEvent (event_copy_complete[3])

K<<< >>>

cpy D2H

...

As can be seen, the call to cudaStreamWaitEvent is always earlier than the call to cudaEventRecord.