I am doing a little proof of concept profile and optimize type of example. However, I ran into something that I can't quite explain, and I'm hoping that someone here can clear this up.

I wrote a very short snippet of code:

int main (void)

{

for (int j = 0; j < 1000; j++)

{

a = 1;

b = 2;

c = 3;

for (int i = 0; i < 100000; i++)

{

callbackWasterOne();

callbackWasterTwo();

}

printf("Two a: %d, b: %d, c: %d.", a, b, c);

}

return 0;

}

void callbackWasterOne(void)

{

a = b * c;

}

void callbackWasterTwo(void)

{

b = a * c;

}

All it does is call two very basic functions that just multiply numbers together. Since the code is identical, I expect the profiler (oprofile) to return roughly the same number.

I run this code 10 times per profile, and I got the following values for how long time is spent on each function:

- main: average = 5.60%, stdev = 0.10%

- callbackWasterOne = 43.78%, stdev = 1.04%

- callbackWasterTwo = 50.24%, stdev = 0.98%

- rest is in miscellaneous things like printf and no-vmlinux

The difference between the time for callbackWasterOne and callbackWasterTwo is significant enough (to me at least) given that they have the same code, that I switched their order in my code and reran the profiler with the following results now:

- main: average = 5.45%, stdev = 0.40%

- callbackWasterOne = 50.69%, stdev = 0.49%

- callbackWasterTwo = 43.54%, stdev = 0.18%

- rest is in miscellaneous things like printf and no-vmlinux

So evidently the profiler samples one more than the other based on the execution order. Not good. Disregarding this, I decided to see the effects of removing some code and I got this for execution times (averages):

- Nothing removed: 0.5295s

- call to callbackWasterOne() removed from for loop: 0.2075s

- call to callbackWasterTwo() removed from for loop: 0.2042s

- remove both calls from for loop: 0.1903s

- remove both calls and the for loop: 0.0025s

- remove contents of callbackWasterOne: 0.379s

- remove contents of callbackWasterTwo: 0.378s

- remove contents of both: 0.382s

So here is what I'm having trouble understanding:

- When I remove just one of the calls from the for loop, the execution time drops by ~60%, which is greater than the time spent by that one function + the main in the first place! How is this possible?

- why is the effect of removing both calls from the loop so little compared to removing just one? I can't figure out this non-linearity. I understand that the for loop is expensive, but in that case (if most of the remaining time can be attributed to the for loop that performs the function calls), why would removing one of the calls cause such a large improvement in the first place?

I looked at the disassembly and the two functions are the same in code. The calls to them are the same, and removing the call simply deletes the one call line.

Other info that might be relevant

- I'm using Ubuntu 14.04LTS

- The code is complied by Eclipse with no optimization (O0)

- I time the code by running it in terminal using "time"

- I use OProfile with count = 10000 and 10 repetitions.

Here are the results from when I do this with -O1 optimization:

- main: avg = 5.89%, stdev = 0.14%

- callbackWasterOne: avg = 44.28%, stdev = 2.64%

- callbackWasterTwo: avg = 49.66%, stdev = 2.54% (greater than before)

- Rest is miscellaneous

Results of removing various bits (execution time averages):

- Nothing removed: 0.522s

- Remove callbackWasterOne call: 0.149s (71.47% decrease)

- Remove callbackWasterTwo call: 0.123% (76.45% decrease)

- Remove both calls: 0.0365s (93.01% decrease) (what I would expect given the profile data just above)

So removing one call now is much better than before, and removing both still carries a benefit (probably because the optimizer understands that nothing happens in the loop). Still, removing one is much more beneficial than I would have anticipated.

Results of the two functions using different variables: I defined 3 more variables for callbackWasterTwo() to use instead of reusing same ones. Now the results are what I would have expected.

- main: avg = 10.87%, stdev = 0.19% (average is greater, but maybe due to those new variables)

- callbackWasterOne: avg = 46.08%, stdev = 0.53%

- callbackWasterTwo: avg = 42.82%, stdev = 0.62%

- Rest is miscellaneous

Results of removing various bits (execution time averages):

- Nothing removed: 0.520s

- Remove callbackWasterOne call: 0.292s (43.83% decrease)

- Remove callbackWasterTwo call: 0.291% (44.07% decrease)

- Remove both calls: 0.065s (87.55% decrease)

So now removing both calls is pretty much equivalent (within stdev) to removing one call + the other. Since the result of removing either function is pretty much the same (43.83% vs 44.07%), I am going to go out on a limb and say that perhaps the profiler data (46% vs 42%) is still skewed. Perhaps it is the way it samples (going to vary the counter value next and see what happens).

It appears that the success of optimization relates pretty strongly to the code reuse fraction. The only way to achieve "exactly" (you know what I mean) the speedup noted by the profiler is to optimize on completely independent code. Anyway this is all interesting.

I am still looking for some explanation ideas for the 70% decrease in the -O1 case though...

I did this with 10 functions (different formulas in each, but using some combination of 6 different variables, 3 at a time, all multiplication):

These results are disappointing to say the least. I know the functions are identical, and yet, the profiler indicates that some take significantly longer. No matter which one I remove ("fast" or "slow" one), the results are the same ;) So this leaves me to wonder, how many people are incorrectly relying on the profiler to indicate the incorrect areas of code to fix? If I unknowingly saw these results, what could possible tell me to go fix the 5% function rather than the 20% (even though they are exactly the same)? What if the 5% one was much easier to fix, with a large potential benefit? And of course, this profiler might just not be very good, but it is popular! People use it!

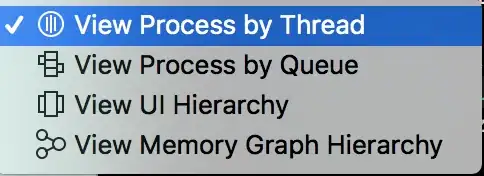

Here is a screenshot. I don't feel like typing it in again:

My conclusion: I am overall rather disappointed with Oprofile. I decided to try out callgrind (valgrind) through command line on the same function and it gave me far more reasonable results. In fact, the results were very reasonable (all functions spent ~ the same amount of time executing). I think Callgrind samples far more than Oprofile ever did.

Callgrind will still not explain the difference in improvement when a function is removed, but at least it gives the correct baseline information...