The visibility for google storage here is pretty shitty

The fastest way is actually to pull the stackdriver metrics and look at the total size in bytes:

Unfortunately there is practically no filtering you can do in stackdriver. You can't wildcard the bucket name and the almost useless bucket resource labels are NOT aggregate-able in stack driver metrics

Also this is bucket level only- not prefixes

The SD metrics are updated daily so unless you can wait a day you cant use this to get the current size right now

UPDATE: Stack Driver metrics now support user metadata labels so you can label your GCS buckets and aggregate those metrics by custom labels you apply.

Edit

I want to add a word of warning if you are creating monitors off of this metric. There is a really crappy bug with this metric right now.

GCP occasionally has platform issues that cause this metric to stop getting written. And I think it's tenant specific (maybe?) so you also won't see it on their public health status pages. And it seems poorly documented for their internal support staff as well because every time we open a ticket to complain they seem to think we are lying and it takes some back and forth before they even acknowledge its broken.

I think this happens if you have many buckets and something crashes on their end and stops writing metrics to your projects. While it does not happen all the time we see it several times a year.

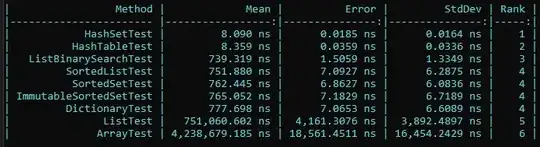

For example it just happened to us again. This is what I'm seeing in stack driver right now across all our projects:

Response from GCP support

Just adding the last response we got from GCP support during this most recent metric outage. I'll add all our buckets were accessible it was just this metric was not being written:

The product team concluded their investigation stating that this was indeed a widespread issue, not tied to your projects only. This internal issue caused unavailability for some GCS buckets, which was affecting the metering systems directly, thus the reason why the "GCS Bucket Total Bytes" metric was not available.