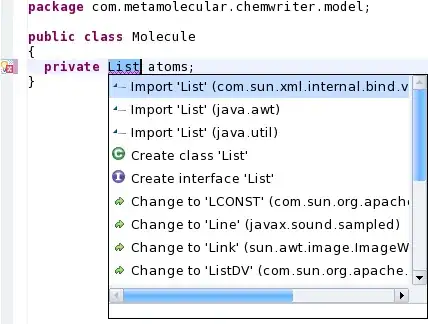

I'm trying to get image from a video, then use this image to generate a still movie The first step works well, but the second step generated a malformed video after I set appliesPreferredTrackTransform=true

normal image extracted from the video

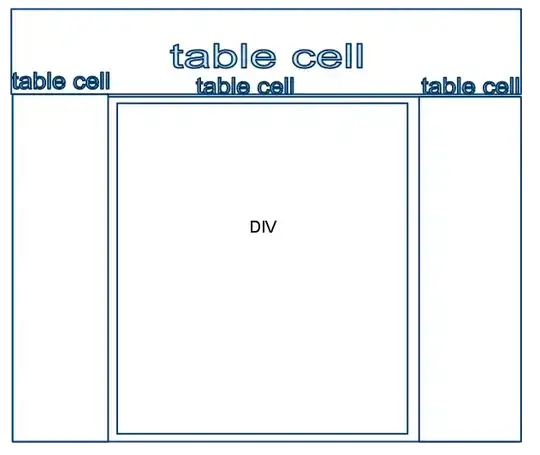

malformed video generated from the image

malformed video generated from the image

How did this come? A normal image generated a malformed video? besides, if I put the GenerateMovieFromImage.generateMovieWithImage block in #2 the app will crash at CGContextDrawImage(context, CGRectMake(0, 0, frameSize.width, frameSize.height), image);

I did as below(in swift):

var asset: AVAsset = AVAsset.assetWithURL(self.tmpMovieURL!) as AVAsset

var imageGen: AVAssetImageGenerator = AVAssetImageGenerator(asset: asset)

var time: CMTime = CMTimeMake(0, 60)

imageGen.appliesPreferredTrackTransform = true

imageGen.generateCGImagesAsynchronouslyForTimes( [ NSValue(CMTime:time) ], completionHandler: {

(requestTime, image, actualTime, result, error) -> Void in

if result == AVAssetImageGeneratorResult.Succeeded {

ALAssetsLibrary().writeImageToSavedPhotosAlbum(image, metadata: nil, completionBlock: {

(nsurl, error) in

// #2

})

GenerateMovieFromImage.generateMovieWithImage(image, completionBlock:{

(genMovieURL) in

handler(genMovieURL)

})

The GenerateMovieFromImage.generateMovieWithImage was from This answer

+ (void)generateMovieWithImage:(CGImageRef)image completionBlock:(GenerateMovieWithImageCompletionBlock)handler

{

NSLog(@"%@", image);

NSString *path = [NSTemporaryDirectory() stringByAppendingPathComponent: [@"tmpgen" stringByAppendingPathExtension:@"mov" ] ];

NSURL *videoUrl = [NSURL fileURLWithPath:path];

if ([[NSFileManager defaultManager] fileExistsAtPath:path] ) {

NSError *error;

if ([[NSFileManager defaultManager] removeItemAtPath:path error:&error] == NO) {

NSLog(@"removeitematpath %@ error :%@", path, error);

}

}

// TODO: image need to rotate programly, not in hand

int width = (int)CGImageGetWidth(image);

int height = (int)CGImageGetHeight(image);

NSError *error = nil;

AVAssetWriter *videoWriter = [[AVAssetWriter alloc] initWithURL:videoUrl

fileType:AVFileTypeQuickTimeMovie

error:&error];

NSParameterAssert(videoWriter);

NSDictionary *videoSettings = [NSDictionary dictionaryWithObjectsAndKeys:

AVVideoCodecH264, AVVideoCodecKey,

[NSNumber numberWithInt:width], AVVideoWidthKey,

[NSNumber numberWithInt:height], AVVideoHeightKey,

nil];

AVAssetWriterInput* writerInput = [AVAssetWriterInput

assetWriterInputWithMediaType:AVMediaTypeVideo

outputSettings:videoSettings] ; //retain should be removed if ARC

NSParameterAssert(writerInput);

NSParameterAssert([videoWriter canAddInput:writerInput]);

[videoWriter addInput:writerInput];

AVAssetWriterInputPixelBufferAdaptor *adaptor = [AVAssetWriterInputPixelBufferAdaptor

assetWriterInputPixelBufferAdaptorWithAssetWriterInput:writerInput

sourcePixelBufferAttributes:nil ];

// 2) Start a session:

NSLog(@"start session");

[videoWriter startWriting];

[videoWriter startSessionAtSourceTime:kCMTimeZero]; //use kCMTimeZero if unsure

dispatch_queue_t mediaInputQueue = dispatch_queue_create("mediaInputQueue", NULL);

[writerInput requestMediaDataWhenReadyOnQueue:mediaInputQueue usingBlock:^{

if ([writerInput isReadyForMoreMediaData]) {

// 3) Write some samples:

// Or you can use AVAssetWriterInputPixelBufferAdaptor.

// That lets you feed the writer input data from a CVPixelBuffer

// that’s quite easy to create from a CGImage.

CVPixelBufferRef sampleBuffer = [self newPixelBufferFromCGImage:image];

if (sampleBuffer) {

CMTime frameTime = CMTimeMake(150,30);

[adaptor appendPixelBuffer:sampleBuffer withPresentationTime:kCMTimeZero];

[adaptor appendPixelBuffer:sampleBuffer withPresentationTime:frameTime];

CFRelease(sampleBuffer);

}

}

// 4) Finish the session:

[writerInput markAsFinished];

[videoWriter endSessionAtSourceTime:CMTimeMakeWithSeconds(5, 30.0) ] ; //optional can call finishWriting without specifiying endTime

// [videoWriter finishWriting]; //deprecated in ios6

NSLog(@"to finnish writing");

[videoWriter finishWritingWithCompletionHandler:^{

NSLog(@"%@",videoWriter);

NSLog(@"finishWriting..");

handler(videoUrl);

ALAssetsLibrary* library = [[ALAssetsLibrary alloc] init];

[library writeVideoAtPathToSavedPhotosAlbum:[NSURL fileURLWithPath:path] completionBlock: ^(NSURL *assetURL, NSError *error){

if( error != nil) {

NSLog(@"writeVideoAtPathToSavedPhotosAlbum error: %@" , error);

}

}];

}]; //ios 6.0+

}];

}

+ (CVPixelBufferRef) newPixelBufferFromCGImage: (CGImageRef)image

{

NSDictionary *options = [NSDictionary dictionaryWithObjectsAndKeys:

[NSNumber numberWithBool:YES], kCVPixelBufferCGImageCompatibilityKey,

[NSNumber numberWithBool:YES], kCVPixelBufferCGBitmapContextCompatibilityKey,

nil];

CVPixelBufferRef pxbuffer = NULL;

CGSize frameSize = CGSizeMake(CGImageGetWidth(image), CGImageGetHeight(image) );

NSLog(@"width:%f", frameSize.width);

NSLog(@"height:%f", frameSize.height);

CVReturn status = CVPixelBufferCreate(kCFAllocatorDefault, frameSize.width,

frameSize.height, kCVPixelFormatType_32ARGB, (__bridge CFDictionaryRef)options,

&pxbuffer);

NSParameterAssert(status == kCVReturnSuccess && pxbuffer != NULL);

CVPixelBufferLockBaseAddress(pxbuffer, 0);

void *pxdata = CVPixelBufferGetBaseAddress(pxbuffer);

NSParameterAssert(pxdata != NULL);

CGColorSpaceRef rgbColorSpace = CGColorSpaceCreateDeviceRGB();

CGContextRef context = CGBitmapContextCreate(pxdata, frameSize.width,

frameSize.height, 8, 4*frameSize.width, rgbColorSpace,

(CGBitmapInfo)kCGImageAlphaNoneSkipFirst

);

NSParameterAssert(context);

CGContextConcatCTM(context, CGAffineTransformIdentity);

CGContextDrawImage(context, CGRectMake(0, 0, frameSize.width, frameSize.height), image);

CGColorSpaceRelease(rgbColorSpace);

CGContextRelease(context);

CVPixelBufferUnlockBaseAddress(pxbuffer, 0);

return pxbuffer;

}