I'm writing a program to run svn up in parallel and it is causing the machine to freeze. The server is not experiencing any load issues when this happens.

The commands are run using ThreadPool.map() onto subprocess.Popen():

def cmd2args(cmd):

if isinstance(cmd, basestring):

return cmd if sys.platform == 'win32' else shlex.split(cmd)

return cmd

def logrun(cmd):

popen = subprocess.Popen(cmd2args(cmd),

stdout=subprocess.PIPE,

stderr=subprocess.STDOUT,

cwd=curdir,

shell=sys.platform == 'win32')

for line in iter(popen.stdout.readline, ""):

sys.stdout.write(line)

sys.stdout.flush()

...

pool = multiprocessing.pool.ThreadPool(argv.jobcount)

pool.map(logrun, _commands)

argv.jobcount is the lesser of multiprocessing.cpu_count() and the number of jobs to run (in this case it is 4). _commands is a list of strings with the commands listed below. shell is set to True on Windows so the shell can find the executables since Windows doesn't have a which command and finding an executable is a bit more complex on Windows (the commands used to be of the form cd directory&&svn up .. which also requires shell=True but that is now done with the cwd parameter instead).

the commands that are being run are

svn up w:/srv/lib/dktabular

svn up w:/srv/lib/dkmath

svn up w:/srv/lib/dkforms

svn up w:/srv/lib/dkorm

where each folder is a separate project/repository, but existing on the same Subversion server. The svn executable is the one packaged with TortoiseSVN 1.8.8 (build 25755 - 64 Bit). The code is up-to-date (i.e. svn up is a no-op).

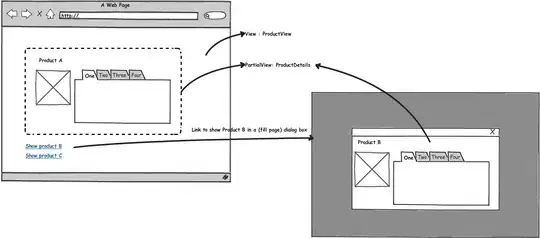

When the client freezes, the memory bar in Task Manager first goes blank:

and sometimes everything goes dark

If I wait for a while (several minutes) the machine eventually comes back.

Q1: Is it copacetic to invoke svn in parallel?

Q2: Are there any issues with how I'm using ThreadPool.map() and subprocess.Popen()?

Q3: Are there any tools/strategies for debugging these kinds of issues?