My goal is to create an interactive web visualization of data from motion tracking experiments.

The trajectories of the moving objects are rendered as points connected by lines. The visualization allows the user to pan and zoom the data.

My current prototype uses Processing.js because I am familiar with Processing, but I have run into performance problems when drawing data with greater than 10,000 vertices or lines. I pursued a couple of strategies for implementing the pan and zoom, but the current implementation, which I think is the best, is to save the data as an svg image and use the PShape data type in Processing.js to load, draw, scale and translate the data. A cleaned version of the code:

/* @pjs preload="nanoparticle_trajs.svg"; */

PShape trajs;

void setup()

{

size(900, 600);

trajs = loadShape("nanoparticle_trajs.svg");

}

//function that repeats and draws elements to the canvas

void draw()

{

shape(trajs,centerX,centerY,imgW,imgH);

}

//...additional functions that get mouse events

Perhaps I should not expect snappy performance with so many data points, but are there general strategies for optimizing the display of complex svg elements with Processing.js? What would I do if I wanted to display 100,000 vertices and lines? Should I abandon Processing all together?

Thanks

EDIT:

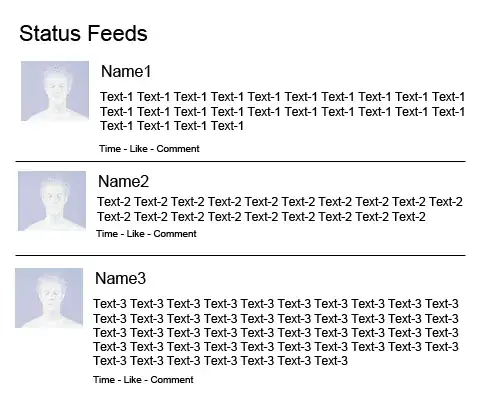

Upon reading the following answer, I thought an image would help convey the essence of the visualization:

It is essentially a scatter plot with >10,000 points and connecting lines. The user can pan and zoom the data and the scale bar in the upper-left dynamically updates according to the current zoom level.