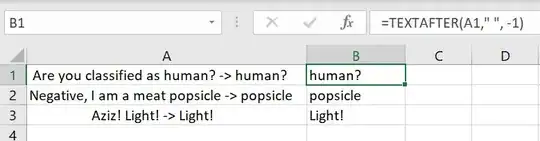

Hello I have a depth image, I want to extract the person(human) silhouette from that. I used pixel thresholding like this:

for i=1:240

for j=1:320

if b(i,j)>2400 || b(i,j)<1900

c(i,j)=5000;

else

c(i,j)=b(i,j);

end

end

end

but there is some part left. Is there any way to remove that?

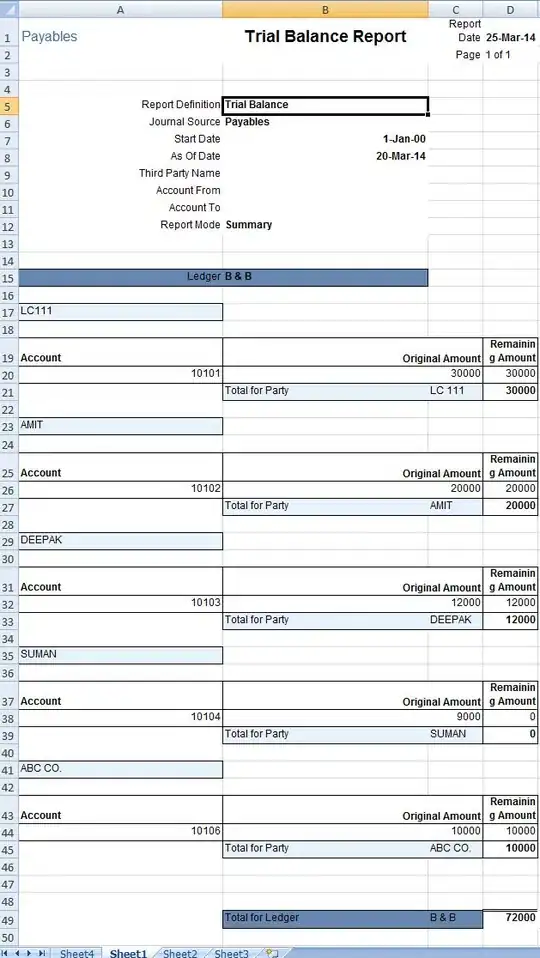

Original_image:

Extracted_silhouette: