This may be more of a bug report than a question, but: why does explicitly using the newdata argument to predict using the same dataset as the training data sometimes produce different predictions than omitting the newdata argument and using the training dataset explicitly?

library(lme4)

packageVersion("lme4") # 1.1.8

m1 <- glmer(myformula, data=X, family="binomial")

p1 <- predict(m1, type="response")

p2 <- predict(m1, type="response", newdata=X)

all(p1==p2) # FALSE

This isn't just a rounding error. I'm seeing cor(p1,p2) return 0.8.

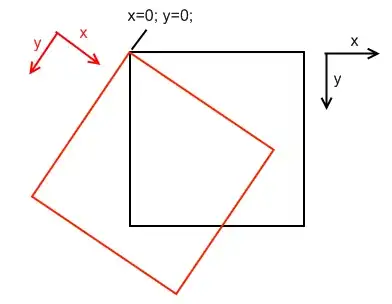

This seems to be isolated to models with slopes. In the following plot, implicit means predict(..., type="response") without newdata, and explicit means predict(..., type="response", newdata=X), where X is the same as training. The only difference between model 1 and the other models is that model 1 contains only (random) intercepts, and the other models have random intercepts and random slopes.