I have been trying to implement back-propagation neural networks for a while now and i am facing issues time after time. The progress so far is that my neural network works fine for XOR, AND and OR.

Following image shows the training of my neural network for XOR over 100000 iterations and it seems to converge well. For this i had 2 input neurons and a single output neuron with a hidden layer with 2 neurons it [ though 1 would have been sufficient ]

Now progressing forward i trained the same network two distinguish coordinates in XY plane into two classes with the same structure of 2 input neuron and 1 output neuron and single hidden layer with two neurons:

For the next i trained it for two classes only but with 2 output neurons and keeping rest of the structure same and it did take long this time to converge but it did.

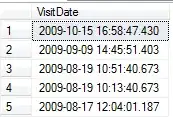

But now i increased to three classes; class A will be 100 and class B will be 010 and class C will be 001 but now when i train it then it never converges and gives me the following result for the data shown below:

But now i increased to three classes; class A will be 100 and class B will be 010 and class C will be 001 but now when i train it then it never converges and gives me the following result for the data shown below:

It never seems to converge. And i have observed this pattern of that if i increase the number of neurons in my output layer the error rate increases like anything? Can i anyone direct me to where i am going wrong?