Unless I'm missing something, it seems that none of the APIs I've looked at will tell you how many objects are in an <S3 bucket>/<folder>. Is there any way to get a count?

-

This question might be helpful: http://stackoverflow.com/questions/701545/how-do-i-delete-count-objects-in-a-s3-bucket – Brendan Long May 19 '10 at 03:20

-

1Solution does exist now in 2015: http://stackoverflow.com/a/32908591/578989 – Mayank Jaiswal Nov 27 '15 at 17:04

-

See my answer below: http://stackoverflow.com/a/39111698/996926 – advncd Aug 23 '16 at 22:39

-

2017 Answer: https://stackoverflow.com/a/42927268/4875295 – cameck Jun 14 '17 at 18:06

-

2020 answer: https://stackoverflow.com/a/64486330/8718377 – veben Nov 02 '20 at 09:18

-

How about S3 storage class analytics - You get APIs as well as on console - https://docs.aws.amazon.com/AmazonS3/latest/dev/analytics-storage-class.html – Prabhat Jun 12 '18 at 00:35

34 Answers

Using AWS CLI

aws s3 ls s3://mybucket/ --recursive | wc -l

or

aws cloudwatch get-metric-statistics \

--namespace AWS/S3 --metric-name NumberOfObjects \

--dimensions Name=BucketName,Value=BUCKETNAME \

Name=StorageType,Value=AllStorageTypes \

--start-time 2016-11-05T00:00 --end-time 2016-11-05T00:10 \

--period 60 --statistic Average

Note: The above cloudwatch command seems to work for some while not for others. Discussed here: https://forums.aws.amazon.com/thread.jspa?threadID=217050

Using AWS Web Console

You can look at cloudwatch's metric section to get approx number of objects stored.

I have approx 50 Million products and it took more than an hour to count using aws s3 ls

- 12,338

- 7

- 39

- 41

-

29

-

5@JosephMCasey I agree. This also works to give the number of objects in a directory with a bucket like this: `aws s3 ls s3://mybucket/mydirectory/ --recursive | wc -l` – tim peterson May 16 '16 at 12:38

-

2Gives this error when I ran the above in cmd prompt - 'wc' is not recognized as an internal or external command, operable program or batch file. - I'm pretty new to this so can someone give a solution? – Sandun Dec 18 '18 at 16:02

-

A warning that CloudWatch does not seem very reliable. I have a mp4 file in one bucket that CloudWatch (NumberOfObjects metric) counts as 2,300 separate objects. I got the correct number with AWS CLI. – AlexK Mar 04 '19 at 22:14

-

-

All CloudWatch statistics are retained for a period of 15 months only. https://docs.aws.amazon.com/AmazonS3/latest/dev/cloudwatch-monitoring.html – Jugal Panchal Jun 19 '20 at 20:52

-

2This is really useful for counting the number of objects in a directory as well: `ls dir | wc -l` – George Ogden Mar 20 '21 at 15:36

-

Aren't all these methods using listObjectsV2 under the hood, listObjectsV2 returns 1000 keys per query. Even doing 10 queries/second for 50m rows this approach would take ~1hr 23 mins (similar to what Mayank got). – Rahul Kadukar Jun 10 '22 at 13:08

-

If you have thousands of millions of objects, this is the only way. Forget about `ls`, which would take forever. – istepaniuk Aug 09 '22 at 12:53

There is a --summarize switch that shows bucket summary information (i.e. number of objects, total size).

Here's the correct answer using AWS cli:

aws s3 ls s3://bucketName/path/ --recursive --summarize | grep "Total Objects:"

Total Objects: 194273

See the documentation

- 3,787

- 1

- 25

- 31

-

6This is great: `Total Objects: 7235` `Total Size: 475566411749` -- so easy. – bonh Aug 16 '17 at 04:35

-

26Still has the major overhead of listing the thousands of objects, unfortunately (currently at 600,000 plus, so this is quite time-consuming) – MichaelChirico Jan 22 '18 at 06:50

-

1

-

5This answer is extremely inefficient, potentially very slow and costly. It iterates over the entire bucket to find the answer. – weaver Oct 07 '19 at 23:17

-

8And might I add, that iterating over 1.2 billion objects stored in standard storage, it can cost about $6000 dollars. – C.J. Jan 24 '20 at 21:50

-

7er - $0.005 per 1000 LIST requests, and each page of results is 1 request, the cli tool (and API) defaults to and is limited to 1000 results in a request. So (1200000000/1000/1000)*0.005) = $6. other storage tiers cost more per 1k requests, of course. AFAIK the charge isn't per object searched/returned, but by actual API request. – keen Dec 22 '20 at 20:56

-

2@CJohnson can you please provide the calculation on which the given $6000 are based? – rexford Apr 22 '22 at 08:59

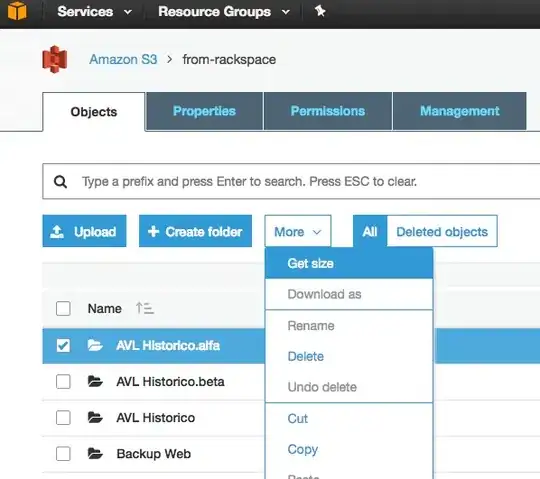

Although this is an old question, and feedback was provided in 2015, right now it's much simpler, as S3 Web Console has enabled a "Get Size" option:

Which provides the following:

- 1,919

- 5

- 27

- 41

-

2Yes, the new AWS Console, although it hurts my eyes, does make calculating number of Objects and total size available at a button's click. – Dev1ce Apr 07 '17 at 08:52

-

15This appears to only work at the folder level. "Get size" is not available at the bucket level – G-. Jun 21 '17 at 09:29

-

2

-

1@gvasquez, yes, that works. Thanks. One can use the select all box next to "Name" above the list of files / folders – G-. Mar 01 '18 at 11:13

-

7@G-. However, the "select all" box only selects the folders and objects that are shown in the page, not all the folders/objects of the bucket. – gparis Jun 01 '18 at 11:41

-

3@gparis good point. Well worth noting. Thanks. So it appears that in the console, we don't have an option if there are more files or folders than can be displayed on a single page – G-. Jun 18 '18 at 12:02

-

@G-. Yup..seems like we cannot count the number of files in a bucket. – Niklas Rosencrantz Sep 03 '18 at 12:50

-

It's not giving files and folder different counts, it's very important – Vishal Zanzrukia Sep 20 '18 at 07:11

-

There is an easy solution with the S3 API now (available in the AWS cli):

aws s3api list-objects --bucket BUCKETNAME --output json --query "[length(Contents[])]"

or for a specific folder:

aws s3api list-objects --bucket BUCKETNAME --prefix "folder/subfolder/" --output json --query "[length(Contents[])]"

- 998

- 1

- 7

- 8

-

2Raphael, your folder query works great, except for when the folder is empty or doesn't exist, then you get: **In function length(), invalid type for value: None, expected one of: ['string', 'array', 'object'], received: "null"** Is there a way to just make it output 0 if the folder is empty or doesn't exit? – user3591836 Nov 23 '15 at 10:49

-

2I get `Illegal token value '(Contents[])]'` (version 1.2.9 of aws-cli), when just using `--bucket my-bucket` and `A client error (NoSuchBucket) occurred when calling the ListObjects operation: The specified bucket does not exist` when using `--bucket s3://my-bucket`. (It definitely exists, and has 1000+ files.) – Darren Cook Feb 01 '16 at 15:55

-

@DarrenCook remove s3:// from the bucket name. The client doesn't seem to support the protocol. – Sriranjan Manjunath Mar 30 '16 at 21:55

-

This is much faster than the wc -l examples. With my buckets it would count roughly 3-4k objects/sec. So ~5mins per million objects. "Get Size" in the S3 web GUI likely uses something similar under the hood as it takes roughly the same time. – notpeter May 20 '17 at 00:16

-

3For __VERY__ large buckets, this was the only solution that worked for me within a reasonable time from (less than 20 minutes) – Nick Sarafa Jun 26 '17 at 06:54

-

4This is interesting and worth noting that even thought `s3api list-objects` command should limit itself to 1000 pagesize, when you perform this query this limit is not in effect. Multiple calls are issued and total number of objects is returned. – dz902 Jul 27 '21 at 02:25

-

If you use the s3cmd command-line tool, you can get a recursive listing of a particular bucket, outputting it to a text file.

s3cmd ls -r s3://logs.mybucket/subfolder/ > listing.txt

Then in linux you can run a wc -l on the file to count the lines (1 line per object).

wc -l listing.txt

- 3,247

- 16

- 22

- 547

- 1

- 4

- 2

-

The `-r` in the command is for `--recursive`, so it should work for sub-folders as well. – Deepak Joy Cheenath Sep 28 '15 at 05:36

-

23 notes on this. a.) you should use `aws s3 ls` rather than s3cmd because it's faster. b.) For large buckets it can take a long time. Took about 5 min for 1mil files. c.) See my answer below about using cloudwatch. – mastaBlasta Feb 04 '16 at 17:49

-

There is no way, unless you

list them all in batches of 1000 (which can be slow and suck bandwidth - amazon seems to never compress the XML responses), or

log into your account on S3, and go Account - Usage. It seems the billing dept knows exactly how many objects you have stored!

Simply downloading the list of all your objects will actually take some time and cost some money if you have 50 million objects stored.

Also see this thread about StorageObjectCount - which is in the usage data.

An S3 API to get at least the basics, even if it was hours old, would be great.

- 25,759

- 11

- 71

- 103

- 7,132

- 3

- 38

- 55

-

-

Sorry: http://developer.amazonwebservices.com/connect/thread.jspa?messageID=164220𨅼 – Tom Andersen Jun 02 '10 at 15:27

-

If you're lazy like me, [Newvem](http://www.newvem.com/) basically does this on your behalf and aggregates/tracks the results on a per-bucket level across your S3 account. – rcoup Oct 10 '12 at 20:32

-

3Could you update your response to include @MayankJaiswal's response? – Joseph Casey Feb 18 '16 at 22:57

-

2the billing dept knows all! no wonder they have the power to take down s3 east coast by accident – ski_squaw Aug 14 '17 at 19:16

- 5,700

- 1

- 24

- 26

-

-

The only real answer, without doing something ridiculous like listing 1m+ keys. I forgot it was there. – Andrew Jul 29 '16 at 10:38

-

This need more upvotes. Every other solution scales poorly in terms of cost and time. – Aaron R. May 10 '19 at 20:32

-

However, please note that "This value is calculated by counting all objects in the bucket (both current and noncurrent objects) and the total number of parts for all incomplete multipart uploads to the bucket." So it will count old versions as well. – dan May 26 '21 at 19:28

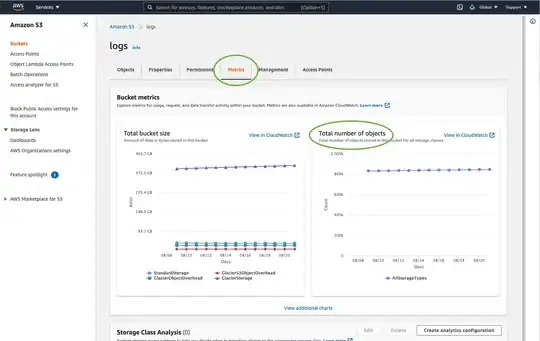

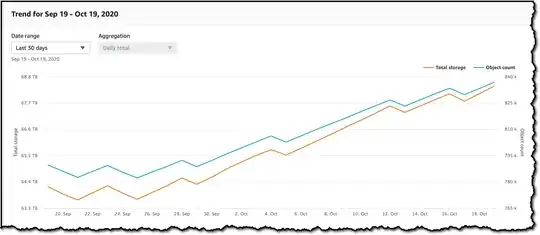

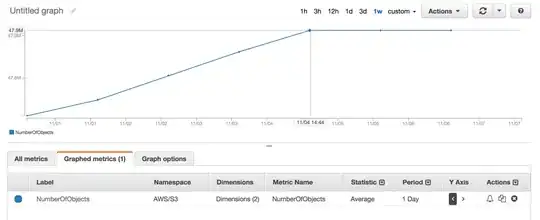

2020/10/22

With AWS Console

Look at Metrics tab on your bucket

or:

Look at AWS Cloudwatch's metrics

With AWS CLI

Number of objects:

or:

aws s3api list-objects --bucket <BUCKET_NAME> --prefix "<FOLDER_NAME>" | wc -l

or:

aws s3 ls s3://<BUCKET_NAME>/<FOLDER_NAME>/ --recursive --summarize --human-readable | grep "Total Objects"

or with s4cmd:

s4cmd ls -r s3://<BUCKET_NAME>/<FOLDER_NAME>/ | wc -l

Objects size:

aws s3api list-objects --bucket <BUCKET_NAME> --output json --query "[sum(Contents[].Size), length(Contents[])]" | awk 'NR!=2 {print $0;next} NR==2 {print $0/1024/1024/1024" GB"}'

or:

aws s3 ls s3://<BUCKET_NAME>/<FOLDER_NAME>/ --recursive --summarize --human-readable | grep "Total Size"

or with s4cmd:

s4cmd du s3://<BUCKET_NAME>

or with CloudWatch metrics:

aws cloudwatch get-metric-statistics --metric-name BucketSizeBytes --namespace AWS/S3 --start-time 2020-10-20T16:00:00Z --end-time 2020-10-22T17:00:00Z --period 3600 --statistics Average --unit Bytes --dimensions Name=BucketName,Value=<BUCKET_NAME> Name=StorageType,Value=StandardStorage --output json | grep "Average"

- 19,637

- 14

- 60

- 80

-

For the number of objects, the `aws s3 ls` solution worked great for me, but the previous solution returned a much higher number, because each object returned by `aws s3api list-objects` is represented as a JSON object spanning 10 lines. For the record, I'm using aws-cli 2.3.2 – TanguyP Nov 17 '21 at 16:00

2021 Answer

This information is now surfaced in the AWS dashboard. Simply navigate to the bucket and click the Metrics tab.

- 5,859

- 2

- 34

- 27

-

This info might not be up-to-date. Metrics have significant delay – Viktor Molokostov Feb 27 '23 at 10:52

If you are using AWS CLI on Windows, you can use the Measure-Object from PowerShell to get the total counts of files, just like wc -l on *nix.

PS C:\> aws s3 ls s3://mybucket/ --recursive | Measure-Object

Count : 25

Average :

Sum :

Maximum :

Minimum :

Property :

Hope it helps.

- 2,636

- 21

- 25

Go to AWS Billing, then reports, then AWS Usage reports. Select Amazon Simple Storage Service, then Operation StandardStorage. Then you can download a CSV file that includes a UsageType of StorageObjectCount that lists the item count for each bucket.

- 3,560

- 1

- 18

- 7

From the command line in AWS CLI, use ls plus --summarize. It will give you the list of all of your items and the total number of documents in a particular bucket. I have not tried this with buckets containing sub-buckets:

aws s3 ls "s3://MyBucket" --summarize

It make take a bit long (it took listing my 16+K documents about 4 minutes), but it's faster than counting 1K at a time.

You can easily get the total count and the history if you go to the s3 console "Management" tab and then click on "Metrics"... Screen shot of the tab

- 51

- 1

- 3

-

could you update the picture to show the `NumberOfObjects (count/day)` chart? It would be better since its directly related to the question. In your screenshot, you are showing the `BucketSizeBytes (bytes/day)` which, while useful, is not directly related to the issue. – guzmonne Jul 24 '19 at 19:21

-

1As of 2019, this should now be the accepted answer. All the rest are outdated or slow. – C.J. Jan 21 '20 at 20:09

One of the simplest ways to count number of objects in s3 is:

Step 1: Select root folder

Step 2: Click on Actions -> Delete (obviously, be careful - don't delete it)

Step 3: Wait for a few mins aws will show you number of objects and its total size.

- 8,093

- 6

- 49

- 79

- 401

- 1

- 7

- 18

-

Nice hack, but there is now an action in the console called "Get Size" which also gives you the number of objects. – Eli Algranti Jul 30 '20 at 06:15

-

@EliAlgranti where is this option exactly? Does it show the number of files, or the total size in kbs? – nclsvh Apr 08 '21 at 10:46

As of November 18, 2020 there is now an easier way to get this information without taxing your API requests:

The default, built-in, free dashboard allows you to see the count for all buckets, or individual buckets under the "Buckets" tab. There are many drop downs to filter and sort almost any reasonable metric you would look for.

- 1,724

- 11

- 11

In s3cmd, simply run the following command (on a Ubuntu system):

s3cmd ls -r s3://mybucket | wc -l

- 47,151

- 38

- 123

- 143

- 4,221

- 1

- 25

- 35

-

14Why did you resurrect a 5-year-old question to post a poorly formatted copy of [an existing answer](http://stackoverflow.com/a/14026892/2588818)? – Two-Bit Alchemist Feb 24 '15 at 21:29

-

-

11IMO this should be a comment on that answer, then. This is a really trivial difference. – Two-Bit Alchemist Feb 25 '15 at 23:22

-

2Seems like a worthy answer- especially since the selected answer for this question starts with 'There is no way...' and @mjsa has provided a one-line answer. – Nan Sep 09 '15 at 17:39

-

This is not a good answer because it doesn't take into account versioned objects. – 3h4x Apr 17 '19 at 18:14

None of the APIs will give you a count because there really isn't any Amazon specific API to do that. You have to just run a list-contents and count the number of results that are returned.

- 38,725

- 6

- 68

- 74

-

For some reason, the ruby libs (right_aws/appoxy_aws) won't list more than the the first 1000 objects in a bucket. Are there others that will list all of the objects? – fields May 19 '10 at 12:39

-

When you request the list, they provide a "NextToken" field, which you can use to send the request again with the token, and it will list more. – Mitch Dempsey May 19 '10 at 17:58

The api will return the list in increments of 1000. Check the IsTruncated property to see if there are still more. If there are, you need to make another call and pass the last key that you got as the Marker property on the next call. You would then continue to loop like this until IsTruncated is false.

See this Amazon doc for more info: Iterating Through Multi-Page Results

- 6,732

- 7

- 46

- 50

You can just execute this cli command to get the total file count in the bucket or a specific folder

Scan whole bucket

aws s3api list-objects-v2 --bucket testbucket | grep "Key" | wc -l

aws s3api list-objects-v2 --bucket BUCKET_NAME | grep "Key" | wc -l

you can use this command to get in details

aws s3api list-objects-v2 --bucket BUCKET_NAME

Scan a specific folder

aws s3api list-objects-v2 --bucket testbucket --prefix testfolder --start-after testfolder/ | grep "Key" | wc -l

aws s3api list-objects-v2 --bucket BUCKET_NAME --prefix FOLDER_NAME --start-after FOLDER_NAME/ | grep "Key" | wc -l

- 141

- 1

- 10

Select the bucket/Folder-> Click on actions -> Click on Calculate Total Size

Old thread, but still relevant as I was looking for the answer until I just figured this out. I wanted a file count using a GUI-based tool (i.e. no code). I happen to already use a tool called 3Hub for drag & drop transfers to and from S3. I wanted to know how many files I had in a particular bucket (I don't think billing breaks it down by buckets).

So, using 3Hub,

- list the contents of the bucket (looks basically like a finder or explorer window)

- go to the bottom of the list, click 'show all'

- select all (ctrl+a)

- choose copy URLs from right-click menu

- paste the list into a text file (I use TextWrangler for Mac)

- look at the line count

I had 20521 files in the bucket and did the file count in less than a minute.

- 769

- 1

- 8

- 26

I used the python script from scalablelogic.com (adding in the count logging). Worked great.

#!/usr/local/bin/python

import sys

from boto.s3.connection import S3Connection

s3bucket = S3Connection().get_bucket(sys.argv[1])

size = 0

totalCount = 0

for key in s3bucket.list():

totalCount += 1

size += key.size

print 'total size:'

print "%.3f GB" % (size*1.0/1024/1024/1024)

print 'total count:'

print totalCount

- 2,777

- 1

- 19

- 22

-

Just so you're aware, this doesn't work with boto3. I contributed a suggestion below as a different answer. – fuzzygroup Oct 24 '19 at 11:18

The issue @Mayank Jaiswal mentioned about using cloudwatch metrics should not actually be an issue. If you aren't getting results, your range just might not be wide enough. It's currently Nov 3, and I wasn't getting results no matter what I tried. I went to the s3 bucket and looked at the counts and the last record for the "Total number of objects" count was Nov 1.

So here is how the cloudwatch solution looks like using javascript aws-sdk:

import aws from 'aws-sdk';

import { startOfMonth } from 'date-fns';

const region = 'us-east-1';

const profile = 'default';

const credentials = new aws.SharedIniFileCredentials({ profile });

aws.config.update({ region, credentials });

export const main = async () => {

const cw = new aws.CloudWatch();

const bucket_name = 'MY_BUCKET_NAME';

const end = new Date();

const start = startOfMonth(end);

const results = await cw

.getMetricStatistics({

// @ts-ignore

Namespace: 'AWS/S3',

MetricName: 'NumberOfObjects',

Period: 3600 * 24,

StartTime: start.toISOString(),

EndTime: end.toISOString(),

Statistics: ['Average'],

Dimensions: [

{ Name: 'BucketName', Value: bucket_name },

{ Name: 'StorageType', Value: 'AllStorageTypes' },

],

Unit: 'Count',

})

.promise();

console.log({ results });

};

main()

.then(() => console.log('Done.'))

.catch((err) => console.error(err));

Notice two things:

- The start of the range is set to the beginning of the month

- The period is set to a day. Any less and you might get an error saying that you have requested too many data points.

- 1,075

- 11

- 23

Here's the boto3 version of the python script embedded above.

import sys

import boto3

s3 = boto3.resource("s3")

s3bucket = s3.Bucket(sys.argv[1])

size = 0

totalCount = 0

for key in s3bucket.objects.all():

totalCount += 1

size += key.size

print("total size:")

print("%.3f GB" % (size * 1.0 / 1024 / 1024 / 1024))

print("total count:")

print(totalCount)

- 124,992

- 159

- 614

- 958

- 1,109

- 12

- 12

aws s3 ls s3://bucket-name/folder-prefix-if-any --recursive | wc -l

- 35

- 3

-

-

The lines don't directly correspond to number of files. Because they also have an entire line just for the date and directory. – CMCDragonkai Jun 15 '20 at 01:43

-

The command works for a limited number of files. In my case, the files count is more than a million and it never gives any result. But it is a good option for limited files. – Jugal Panchal Jun 19 '20 at 19:19

you can use the below command in the command line provided that you replace the bucket path as it is a template (using default profile or add --profile {aws_profile})

aws s3 ls s3://{bucket}/{folder} --recursive --no-paginate --summarize

the point is you have to have the --summarize option in so that it will print out both the total size & the number of objects in the end, also don't forget to disable the pagination using --no-paginate since you want to have this calculation for the whole bucket/folder

- 955

- 6

- 17

3Hub is discontinued. There's a better solution, you can use Transmit (Mac only), then you just connect to your bucket and choose Show Item Count from the View menu.

- 1,663

- 2

- 23

- 34

-

Transmit unfortunately only shows up to a 1000 items (and the Item Count therefore is also maxed out on 1000) – Tino Oct 11 '16 at 07:52

You can download and install s3 browser from http://s3browser.com/. When you select a bucket in the center right corner you can see the number of files in the bucket. But, the size it shows is incorrect in the current version.

Gubs

- 439

- 3

- 11

You can potentially use Amazon S3 inventory that will give you list of objects in a csv file

- 657

- 11

- 14

Can also be done with gsutil du (Yes, a Google Cloud tool)

gsutil du s3://mybucket/ | wc -l

- 13,412

- 10

- 59

- 99

If you're looking for specific files, let's say .jpg images, you can do the following:

aws s3 ls s3://your_bucket | grep jpg | wc -l

- 6,834

- 3

- 47

- 39

Following is how you can do it using java client.

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-java-sdk-s3</artifactId>

<version>1.11.519</version>

</dependency>

import com.amazonaws.ClientConfiguration;

import com.amazonaws.Protocol;

import com.amazonaws.auth.AWSStaticCredentialsProvider;

import com.amazonaws.auth.BasicAWSCredentials;

import com.amazonaws.services.s3.AmazonS3;

import com.amazonaws.services.s3.AmazonS3ClientBuilder;

import com.amazonaws.services.s3.model.ObjectListing;

public class AmazonS3Service {

private static final String S3_ACCESS_KEY_ID = "ACCESS_KEY";

private static final String S3_SECRET_KEY = "SECRET_KEY";

private static final String S3_ENDPOINT = "S3_URL";

private AmazonS3 amazonS3;

public AmazonS3Service() {

ClientConfiguration clientConfiguration = new ClientConfiguration();

clientConfiguration.setProtocol(Protocol.HTTPS);

clientConfiguration.setSignerOverride("S3SignerType");

BasicAWSCredentials credentials = new BasicAWSCredentials(S3_ACCESS_KEY_ID, S3_SECRET_KEY);

AWSStaticCredentialsProvider credentialsProvider = new AWSStaticCredentialsProvider(credentials);

AmazonS3ClientBuilder.EndpointConfiguration endpointConfiguration = new AmazonS3ClientBuilder.EndpointConfiguration(S3_ENDPOINT, null);

amazonS3 = AmazonS3ClientBuilder.standard().withCredentials(credentialsProvider).withClientConfiguration(clientConfiguration)

.withPathStyleAccessEnabled(true).withEndpointConfiguration(endpointConfiguration).build();

}

public int countObjects(String bucketName) {

int count = 0;

ObjectListing objectListing = amazonS3.listObjects(bucketName);

int currentBatchCount = objectListing.getObjectSummaries().size();

while (currentBatchCount != 0) {

count += currentBatchCount;

objectListing = amazonS3.listNextBatchOfObjects(objectListing);

currentBatchCount = objectListing.getObjectSummaries().size();

}

return count;

}

}

- 4,004

- 2

- 19

- 31

With AWS CLI updates and CloudWatch changes the CLI syntax that works for me (as of April 2023) is:

aws --profile cloudwatch get-metric-statistics --namespace AWS/S3

--metric-name NumberOfObjects

--dimensions Name=BucketName,Value= Name=StorageType,Value=AllStorageTypes

--start-time --end-time --period 86400 --statistic Average

Since the S3 stats are 24 hour data points you have to use start and end time that are Days apart and the period is 86400. You can pull a series of data, but CW will return it in random order therefore, add

--query 'sort_by (Datapoints, &Timestamp)'

to the end of the command to get the results sorted in order..

aws --profile cloudwatch get-metric-statistics --namespace AWS/S3 --metric-name NumberOfObjects

--dimensions Name=BucketName,Value= Name=StorageType,Value=AllStorageTypes

--start-time --end-time --period 86400 --statistic Average --query 'sort_by (Datapoints, &Timestamp)'

- 81

- 4

The easiest way is to use the developer console, for example, if you are on chrome, choose Developer Tools, and you can see following, you can either find and count or do some match, like 280-279 + 1 = 2

...

- 61

- 1

- 4

-

2Could you please add some more detail on how you have used the developer tools to figure out the total number of objects in a bucket. – Jugal Panchal Jun 19 '20 at 20:56