I am trying to use deferred shading to implement SSAO and I have problems to access my textures in the deferred fragment shader. The code is in C++/Qt5 and makes use of Coin3D to generate the rest of the UI (but this shouldn't really matter here).

The fragment shader of the deferred pass is:

#version 150 compatibility

uniform sampler2D color;

uniform sampler2D position;

uniform sampler2D normal;

uniform vec3 dim;

uniform vec3 camPos;

uniform vec3 camDir;

void main()

{

// screen position

vec2 t = gl_TexCoord[0].st;

// the color

vec4 c = texture2D(color, t);

gl_FragColor = c + vec4(1.0, t.x, t.y, 1.0);

}

The code for running the deferred pass is

_geometryBuffer.Unbind();

// push state

{

glMatrixMode(GL_MODELVIEW);

glPushMatrix();

glLoadIdentity();

glMatrixMode(GL_PROJECTION);

glPushMatrix();

glLoadIdentity();

glPushAttrib(GL_DEPTH_BUFFER_BIT |

GL_COLOR_BUFFER_BIT |

GL_LIGHTING_BIT |

GL_SCISSOR_BIT |

GL_POLYGON_BIT |

GL_CURRENT_BIT);

glDisable(GL_DEPTH_TEST);

glDisable(GL_ALPHA_TEST);

glDisable(GL_LIGHTING);

glDisable(GL_COLOR_MATERIAL);

glDisable(GL_SCISSOR_TEST);

glDisable(GL_CULL_FACE);

}

// bind shader

// /!\ IMPORTANT to do before specifying locations

_deferredShader->bind();

_CheckGLErrors("deferred");

// specify positions

_deferredShader->setUniformValue("camPos", ...);

_deferredShader->setUniformValue("camDir", ...);

_geometryBuffer.Bind(GBuffer::TEXTURE_TYPE_NORMAL, 2);

_deferredShader->setUniformValue("normal", GLint(2));

_geometryBuffer.Bind(GBuffer::TEXTURE_TYPE_POSITION, 1);

_deferredShader->setUniformValue("position", GLint(1));

_geometryBuffer.Bind(GBuffer::TEXTURE_TYPE_DIFFUSE, 0);

_deferredShader->setUniformValue("color", GLint(0));

_CheckGLErrors("bind");

// draw screen quad

{

glBegin(GL_QUADS);

glTexCoord2f(0, 0);

glColor3f(0, 0, 0);

glVertex2f(-1, -1);

glTexCoord2f(1, 0);

glColor3f(0, 0, 0);

glVertex2f( 1, -1);

glTexCoord2f(1, 1);

glColor3f(0, 0, 0);

glVertex2f( 1, 1);

glTexCoord2f(0, 1);

glColor3f(0, 0, 0);

glVertex2f(-1, 1);

glEnd();

}

_deferredShader->release();

// for debug

_geometryBuffer.Unbind(2);

_geometryBuffer.Unbind(1);

_geometryBuffer.Unbind(0);

_geometryBuffer.DeferredPassBegin();

_geometryBuffer.DeferredPassDebug();

// pop state

{

glPopAttrib();

glMatrixMode(GL_PROJECTION);

glPopMatrix();

glMatrixMode(GL_MODELVIEW);

glPopMatrix();

}

I know that the textures have been correctly processed in the geometry buffer creation because I can dump them into files and get the expected result.

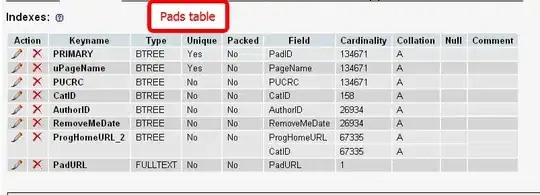

The deferred pass doesn't work. The shader compiled correctly and I get the following result on screen:

And the last part of my code (DeferredPassBegin/Debug) is to draw the FBO to the screen (as shown in screenshot) as a proof that the GBuffer is correct.

The current result seems to mean that the textures are not correctly bound to their respective uniform, but I know that the content is valid as I dumped the textures to files and got the same results as shown above.

My binding functions in GBuffer are:

void GBuffer::Unbind()

{

glBindFramebuffer(GL_FRAMEBUFFER, 0);

glBindFramebuffer(GL_DRAW_FRAMEBUFFER, 0);

glBindFramebuffer(GL_READ_FRAMEBUFFER, 0);

}

void GBuffer::Bind(TextureType type, uint32_t idx)

{

glActiveTexture(GL_TEXTURE0 + idx);

glEnable(GL_TEXTURE_2D);

glBindTexture(GL_TEXTURE_2D, _textures[static_cast<uint32_t>(type)]);

}

void GBuffer::Unbind(uint32_t idx)

{

glActiveTexture(GL_TEXTURE0 + idx);

glDisable(GL_TEXTURE_2D);

glBindTexture(GL_TEXTURE_2D, 0);

}

Finally, the textures are 512/512, and I created them in my GBuffer with:

WindowWidth = WindowHeight = 512;

// Create the FBO

glGenFramebuffers(1, &_fbo);

glBindFramebuffer(GL_FRAMEBUFFER, _fbo);

const uint32_t NUM = static_cast<uint32_t>(NUM_TEXTURES);

// Create the gbuffer textures

glGenTextures(NUM, _textures);

glGenTextures(1, &_depthTexture);

for (unsigned int i = 0 ; i < NUM; i++) {

glBindTexture(GL_TEXTURE_2D, _textures[i]);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA32F, WindowWidth, WindowHeight, 0, GL_RGBA, GL_FLOAT, NULL);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0 + i + _firstIndex, GL_TEXTURE_2D, _textures[i], 0);

}

// depth

glBindTexture(GL_TEXTURE_2D, _depthTexture);

glTexImage2D(GL_TEXTURE_2D, 0, GL_DEPTH_COMPONENT32F, WindowWidth, WindowHeight, 0, GL_DEPTH_COMPONENT, GL_FLOAT, NULL);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, GL_TEXTURE_2D, _depthTexture, 0);

GLenum buffers[NUM];

for(uint32_t i = 0; i < NUM; ++i){

buffers[i] = GLenum(GL_COLOR_ATTACHMENT0 + i + _firstIndex);

}

glDrawBuffers(NUM, buffers);

GLenum status = glCheckFramebufferStatus(GL_FRAMEBUFFER);

if (status != GL_FRAMEBUFFER_COMPLETE) {

printf("FB error, status: 0x%x\n", status);

return _valid = false;

}

// unbind textures

glBindTexture(GL_TEXTURE_2D, 0);

// restore default FBO

glBindFramebuffer(GL_FRAMEBUFFER, 0);

How can I debug farther at this stage? I know that the texture data is valid, but I can't seem to bind it to the shader correctly (but I have other shaders that use textures loaded from files and which work fine).

--- Edit 1 ---

As asked, the code for DeferredPassBegin/Debug (mostly coming from this tutorial )

void GBuffer::DeferredPassBegin() {

glBindFramebuffer(GL_FRAMEBUFFER, 0);

glBindFramebuffer(GL_READ_FRAMEBUFFER, _fbo);

}

void GBuffer::DeferredPassDebug() {

GLsizei HalfWidth = GLsizei(_texWidth / 2.0f);

GLsizei HalfHeight = GLsizei(_texHeight / 2.0f);

SetReadBuffer(TEXTURE_TYPE_POSITION);

glBlitFramebuffer(0, 0, _texWidth, _texHeight,

0, 0, HalfWidth, HalfHeight, GL_COLOR_BUFFER_BIT, GL_LINEAR);

SetReadBuffer(TEXTURE_TYPE_DIFFUSE);

glBlitFramebuffer(0, 0, _texWidth, _texHeight,

0, HalfHeight, HalfWidth, _texHeight, GL_COLOR_BUFFER_BIT, GL_LINEAR);

SetReadBuffer(TEXTURE_TYPE_NORMAL);

glBlitFramebuffer(0, 0, _texWidth, _texHeight,

HalfWidth, HalfHeight, _texWidth, _texHeight, GL_COLOR_BUFFER_BIT, GL_LINEAR);

}