I am currently exporting my data (from a destination table in Bigquery) to a bucket in GCS. Doing this programmatically using the Bigquery API.

There is a constraint while exporting data from Bigquery to GCS - the data should not be greater than 1GB.

- Since my data in the destination table is more than 1GB, I split the files into multiple parts.

- The number of parts in which the file will be split will obviously depend on the size of the data that I have in my destination table.

Here is the code snippet for the function exportDataToGCS() where this is happening:

http = authorize();

bigquery_service = build('bigquery', 'v2', http=http)

query_request = bigquery_service.jobs()

DESTINATION_PATH = constants.GCS_BUCKET_PATH + canonicalDate + '/'

query_data = {

'projectId': 'ga-cnqr',

'configuration': {

'extract': {

'sourceTable': {

'projectId': constants.PROJECT_ID,

'datasetId': constants.DEST_TABLES_DATASET_ID,

'tableId': canonicalDate,

},

'destinationUris': [DESTINATION_PATH + canonicalDate + '-*.gz'],

'destinationFormat': 'CSV',

'printHeader': 'false',

'compression': 'GZIP'

}

}

}

query_response = query_request.insert(projectId=constants.PROJECT_NUMBER,

body=query_data).execute()

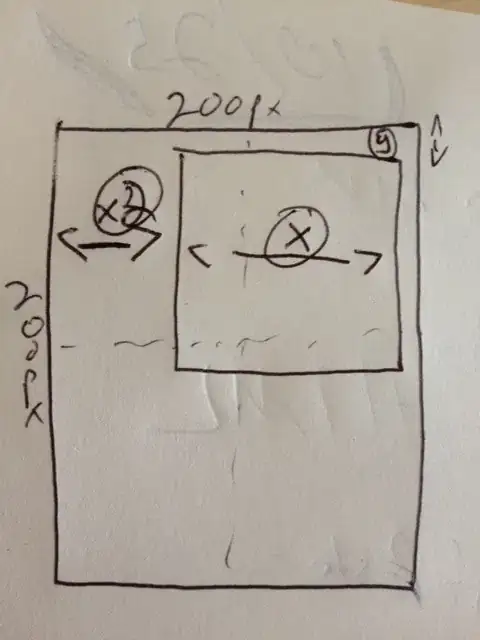

After this function is executed, in my GCS bucket, my files show up in the following manner:

However, I am curious to know whether there can be any scenarios where the file was supposed to be split in 10 parts, but only 3 parts made it to the bucket because the above function failed.

That is, could there be a partial export ?

Could reasons like network drop or the process running the function being killed etc lead to this? Is this process a blocking call? Asynchronous?

Thanks in advance.

Update 1: Status parameter in query response

This is how I am checking for the DONE status.

while True:

status = query_request.get(projectId=constants.PROJECT_NUMBER, jobId=query_response['jobReference']['jobId']).execute()

if 'DONE' == status['status']['state']:

logging.info("Finished exporting for the date : " + stringCanonicalDate);

return