I need to do computer visions tasks in order to detect watter bottles or soda cans. I will obtain 'frontal' images of bottles, soda cans or any other random objects (one by one) and my algorithm should determine whether it's a bottle, a can or any of them.

Some details about object detecting scenario:

- As mentioned, I will test one single object per image/video frame.

- Not all watter bottles are the same. There could be color in plastic, lid or label variation. Maybe some could not get label or lid.

- Same about variation goes for soda cans. No wrinkled soda cans are gonna be tested though.

- There could be small size variation between objects.

- I could have a green (or any custom color) background.

- I will do any needed filters on image.

- This will be run on a Raspberry Pi.

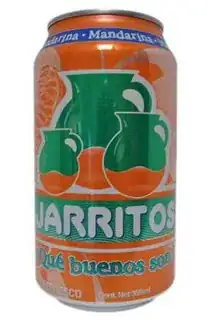

Just in case, an example of each:

I've tested a couple times OpenCV face detection algorithms and I know it works pretty good but I'd need to obtain an special Haar Cascades features XML file for detecting each custom object on this approach.

So, the distinct alternatives I have in mind are:

- Creating a custom Haar Classifier.

- Considering shapes.

- Considering outlines.

I'd like to get a simple algorithm and I think creating a custom Haar classifier could be even not needed. What would you suggest?

Update

I strongly considered the shape/aspect ratio approach.

However I guess I'm facing some issues as bottles come in distinct sizes or even shapes each. But this made me think or set following considerations:

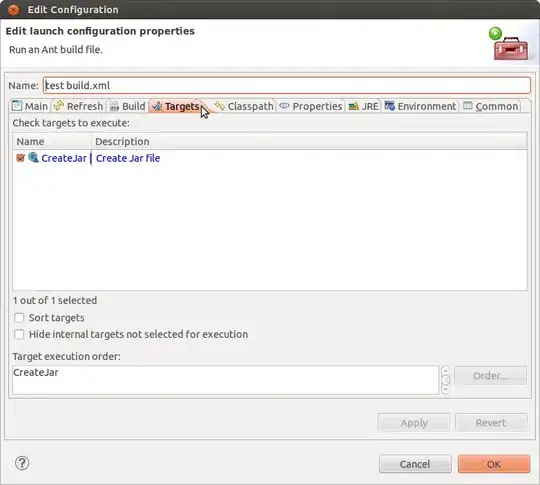

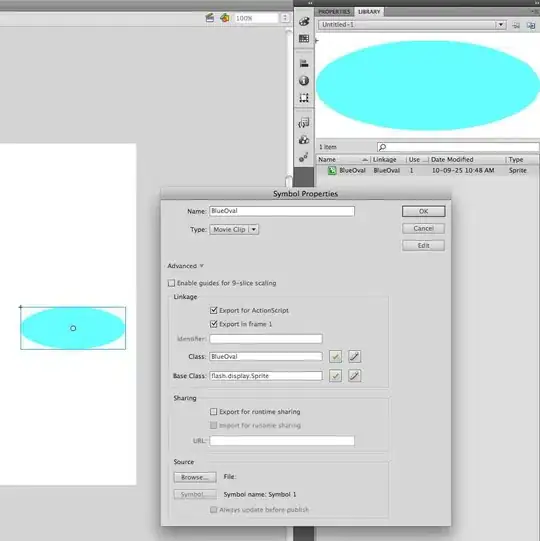

- I'm applying a threshold with THRESH_BINARY method. (Thanks to the answers).

- I will use a white background on detection.

- Soda cans are all same size.

- So, a bounding box for soda cans with high accuracy might distinguish a can.

What I've achieved:

Threshold really helped me, I could notice that on white background tests I would obtain for cans:

And this is what it's obtained for bottles:

So, darker areas left dominancy is noticeable. There are some cases in cans where this might turn into false negatives. And for bottles, light and angle may lead to not consistent results but I really really think this could be a shorter approach.

So, I'm quite confused now how I should evaluate that darkness dominancy, I've read that findContours leads to it but I'm quite lost on how to seize such function. For example, in case of soda cans, it may find several contours, so I get lost on what to evaluate.

Note: I'm open to test any other algorithms or libraries distinct to Open CV.