I'm trying to get the company name, sector, and industry for stocks. I download the HTML for 'https://finance.yahoo.com/q/in?s={}+Industry'.format(sign), and then attempt to parse it with .xpath() from lxml.html.

To get the XPath for the data I'm trying to scrape, I go to the site in Chrome, right-click on the item, click Inspect Element, right-click on the highlighted area, and click Copy XPath. This has always worked for me in the past.

This problem can be reproduced with the following code (I'm using Apple as an example):

import requests

from lxml import html

page_p = 'https://finance.yahoo.com/q/in?s=AAPL+Industry'

name_p = '//*[@id="yfi_rt_quote_summary"]/div[1]/div/h2/text()'

sect_p = '//*[@id="yfncsumtab"]/tbody/tr[2]/td[1]/table[2]/tbody/tr/td/table/tbody/tr[1]/td/a/text()'

indu_p = '//*[@id="yfncsumtab"]/tbody/tr[2]/td[1]/table[2]/tbody/tr/td/table/tbody/tr[2]/td/a/text()'

page = requests.get(page_p)

tree = html.fromstring(page.text)

name = tree.xpath(name_p)

sect = tree.xpath(sect_p)

indu = tree.xpath(indu_p)

print('Name: {}\nSector: {}\nIndustry: {}'.format(name, sect, indu))

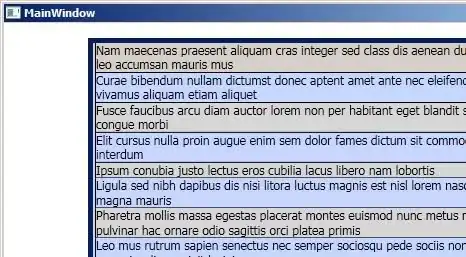

Which gives this output:

Name: ['Apple Inc. (AAPL)']

Sector: []

Industry: []

It's not encountering any download difficulties, as it's able to retrieve name, but the other two don't work. If I replace their paths with tr[1]/td/a/text() and tr[1]/td/a/text(), respectively, it returns this:

Name: ['Apple Inc. (AAPL)']

Sector: ['Consumer Goods', 'Industry Summary', 'Company List', 'Appliances', 'Recreational Goods, Other']

Industry: ['Electronic Equipment', 'Apple Inc.', 'AAPL', 'News', 'Industry Calendar', 'Home Furnishings & Fixtures', 'Sporting Goods']

Obviously I could just slice out the 1st item in each list to get the data I need.

What I don't understand is that when I add tbody/ to the start (//tbody/tr[#]/td/a/text()) it fails again, even though the console in Chrome clearly shows both trs as being children of a tbody element.

Why does this happen?