I've been struggling to make the tess-two OCR project work, and when I finally did, it did recognize text when it's clear and when there are multiple lines there.

The whole point of this is that I need to use OCR to extract credit card number when the user takes a photo of it.

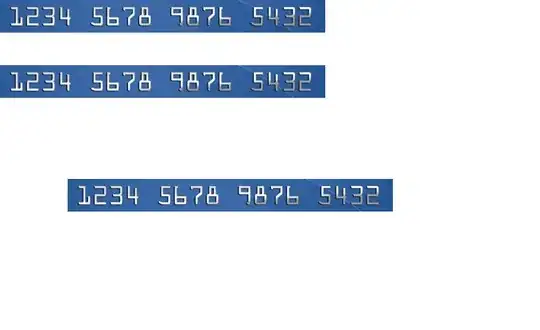

Here is an example of a credit card number:

This is just an example I used many pictures. for instance with this image I got the following text:

1238 5578 8875 5877

1238 5578 8875 5877

1238 5578 8875 5877

Here is the code I use for this:

TessBaseAPI baseApi = new TessBaseAPI();

baseApi.init("/mnt/sdcard/tesseract-ocr", "eng");

baseApi.setImage(bm);

baseApi.setPageSegMode(6);

String whiteList = "/1234567890";

baseApi.setVariable(TessBaseAPI.VAR_CHAR_WHITELIST, whiteList);

String recognizedText = baseApi.getUTF8Text();

baseApi.end();

Any help would be much appreciated.

Thanks !