I am currently working on implementing a thresholding algorithm called Bradley Adaptive Thresholding.

I have been following mainly two links in order to work out how to implement this algorithm. I have also successfully been able to implement two other thresholding algorithms, mainly, Otsu's Method and Balanced Histogram Thresholding.

Here are the two links that I have been following in order to create the Bradley Adaptive Thresholding algorithm.

http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.420.7883&rep=rep1&type=pdf

Bradley Adaptive Thresholding Github Example

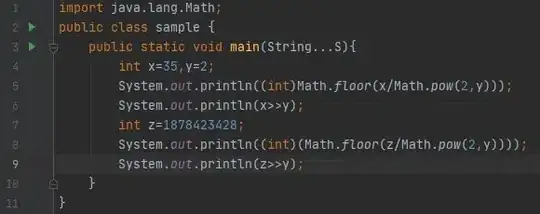

Here is the section of my source code in Python where I am running the algorithm and saving the image. I use the Python Imaging Library and no other tools to accomplish what I want to do.

def get_bradley_binary(inp_im):

w, h = inp_im.size

s, t = (w / 8, 0.15)

int_im = Image.new('L', (w, h))

out_im = Image.new('L', (w, h))

for i in range(w):

summ = 0

for j in range(h):

index = j * w + i

summ += get_pixel_offs(inp_im, index)

if i == 0:

set_pixel_offs(int_im, index, summ)

else:

temp = get_pixel_offs(int_im, index - 1) + summ

set_pixel_offs(int_im, index, temp)

for i in range(w):

for j in range(h):

index = j * w + i

x1,x2,y1,y2 = (i-s/2, i+s/2, j-s/2, j+s/2)

x1 = 0 if x1 < 0 else x1

x2 = w - 1 if x2 >= w else x2

y1 = 0 if y1 < 0 else y1

y2 = h - 1 if y2 >= h else y2

count = (x2 - x1) * (y2 - y1)

a1 = get_pixel_offs(int_im, y2 * w + x2)

a2 = get_pixel_offs(int_im, y1 * w + x2)

a3 = get_pixel_offs(int_im, y2 * w + x1)

a4 = get_pixel_offs(int_im, y1 * w + x1)

summ = a1 - a2 - a3 + a4

temp = get_pixel_offs(inp_im, index)

if temp * count < summ * (1.0 - t):

set_pixel_offs(out_im, index, 0)

else:

set_pixel_offs(out_im, index, 255)

return out_im

Here is the section of my code that illustrates the implementation of these set and get methods that you have not seen before.

def get_offs(image, x, y):

return y * image.size[0] + x

def get_xy(image, offs):

return (offs % image.size[0], int(offs / image.size[0]))

def set_pixel_xy(image, x, y, data):

image.load()[x, y] = data

def set_pixel_offs(image, offs, data):

x, y = get_xy(image, offs)

image.load()[x, y] = data

def get_pixel_offs(image, offs):

return image.getdata()[offs]

def get_pixel_xy(image, x, y):

return image.getdata()[get_offs(image, x, y)]

And finally, here are the input and output images. These are the same images that are used in the original research paper in the first link that I provided you. Note: The output image is almost completely white and it may be hard to see, but I provided it anyway in case anyone really wanted to have it for reference.