Note: I am placing this question in both the MATLAB and Python tags as I am the most proficient in these languages. However, I welcome solutions in any language.

Question Preamble

I have taken an image with a fisheye lens. This image consists of a pattern with a bunch of square objects. What I want to do with this image is detect the centroid of each of these squares, then use these points to perform an undistortion of the image - specifically, I am seeking the right distortion model parameters. It should be noted that not all of the squares need to be detected. As long as a good majority of them are, then that's totally fine.... but that isn't the point of this post. The parameter estimation algorithm I have already written, but the problem is that it requires points that appear collinear in the image.

The base question I want to ask is given these points, what is the best way to group them together so that each group consists of a horizontal line or vertical line?

Background to my problem

This isn't really important with regards to the question I'm asking, but if you'd like to know where I got my data from and to further understand the question I'm asking, please read. If you're not interested, then you can skip right to the Problem setup section below.

An example of an image I am dealing with is shown below:

It is a 960 x 960 image. The image was originally higher resolution, but I subsample the image to facilitate faster processing time. As you can see, there are a bunch of square patterns that are dispersed in the image. Also, the centroids I have calculated are with respect to the above subsampled image.

The pipeline I have set up to retrieve the centroids is the following:

- Perform a Canny Edge Detection

- Focus on a region of interest that minimizes false positives. This region of interest is basically the squares without any of the black tape that covers one of their sides.

- Find all distinct closed contours

For each distinct closed contour...

a. Perform a Harris Corner Detection

b. Determine if the result has 4 corner points

c. If this does, then this contour belonged to a square and find the centroid of this shape

d. If it doesn't, then skip this shape

Place all detected centroids from Step #4 into a matrix for further examination.

Here's an example result with the above image. Each detected square has the four points colour coded according to the location of where it is with respect to the square itself. For each centroid that I have detected, I write an ID right where that centroid is in the image itself.

With the above image, there are 37 detected squares.

Problem Setup

Suppose I have some image pixel points stored in a N x 3 matrix. The first two columns are the x (horizontal) and y (vertical) coordinates where in image coordinate space, the y coordinate is inverted, which means that positive y moves downwards. The third column is an ID associated with the point.

Here is some code written in MATLAB that takes these points, plots them onto a 2D grid and labels each point with the third column of the matrix. If you read the above background, these are the points that were detected by my algorithm outlined above.

data = [ 475. , 605.75, 1.;

571. , 586.5 , 2.;

233. , 558.5 , 3.;

669.5 , 562.75, 4.;

291.25, 546.25, 5.;

759. , 536.25, 6.;

362.5 , 531.5 , 7.;

448. , 513.5 , 8.;

834.5 , 510. , 9.;

897.25, 486. , 10.;

545.5 , 491.25, 11.;

214.5 , 481.25, 12.;

271.25, 463. , 13.;

646.5 , 466.75, 14.;

739. , 442.75, 15.;

340.5 , 441.5 , 16.;

817.75, 421.5 , 17.;

423.75, 417.75, 18.;

202.5 , 406. , 19.;

519.25, 392.25, 20.;

257.5 , 382. , 21.;

619.25, 368.5 , 22.;

148. , 359.75, 23.;

324.5 , 356. , 24.;

713. , 347.75, 25.;

195. , 335. , 26.;

793.5 , 332.5 , 27.;

403.75, 328. , 28.;

249.25, 308. , 29.;

495.5 , 300.75, 30.;

314. , 279. , 31.;

764.25, 249.5 , 32.;

389.5 , 249.5 , 33.;

475. , 221.5 , 34.;

565.75, 199. , 35.;

802.75, 173.75, 36.;

733. , 176.25, 37.];

figure; hold on;

axis ij;

scatter(data(:,1), data(:,2),40, 'r.');

text(data(:,1)+10, data(:,2)+10, num2str(data(:,3)));

Similarly in Python, using numpy and matplotlib, we have:

import numpy as np

import matplotlib.pyplot as plt

data = np.array([[ 475. , 605.75, 1. ],

[ 571. , 586.5 , 2. ],

[ 233. , 558.5 , 3. ],

[ 669.5 , 562.75, 4. ],

[ 291.25, 546.25, 5. ],

[ 759. , 536.25, 6. ],

[ 362.5 , 531.5 , 7. ],

[ 448. , 513.5 , 8. ],

[ 834.5 , 510. , 9. ],

[ 897.25, 486. , 10. ],

[ 545.5 , 491.25, 11. ],

[ 214.5 , 481.25, 12. ],

[ 271.25, 463. , 13. ],

[ 646.5 , 466.75, 14. ],

[ 739. , 442.75, 15. ],

[ 340.5 , 441.5 , 16. ],

[ 817.75, 421.5 , 17. ],

[ 423.75, 417.75, 18. ],

[ 202.5 , 406. , 19. ],

[ 519.25, 392.25, 20. ],

[ 257.5 , 382. , 21. ],

[ 619.25, 368.5 , 22. ],

[ 148. , 359.75, 23. ],

[ 324.5 , 356. , 24. ],

[ 713. , 347.75, 25. ],

[ 195. , 335. , 26. ],

[ 793.5 , 332.5 , 27. ],

[ 403.75, 328. , 28. ],

[ 249.25, 308. , 29. ],

[ 495.5 , 300.75, 30. ],

[ 314. , 279. , 31. ],

[ 764.25, 249.5 , 32. ],

[ 389.5 , 249.5 , 33. ],

[ 475. , 221.5 , 34. ],

[ 565.75, 199. , 35. ],

[ 802.75, 173.75, 36. ],

[ 733. , 176.25, 37. ]])

plt.figure()

plt.gca().invert_yaxis()

plt.plot(data[:,0], data[:,1], 'r.', markersize=14)

for idx in np.arange(data.shape[0]):

plt.text(data[idx,0]+10, data[idx,1]+10, str(int(data[idx,2])), size='large')

plt.show()

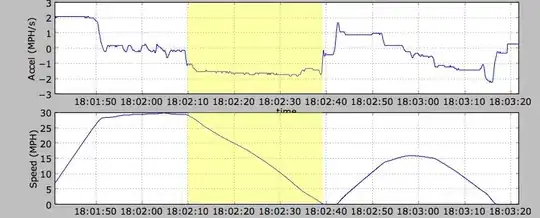

We get:

Back to the question

As you can see, these points are more or less in a grid pattern and you can see that we can form lines between the points. Specifically, you can see that there are lines that can be formed horizontally and vertically.

For example, if you reference the image in the background section of my problem, we can see that there are 5 groups of points that can be grouped in a horizontal manner. For example, points 23, 26, 29, 31, 33, 34, 35, 37 and 36 form one group. Points 19, 21, 24, 28, 30 and 32 form another group and so on and so forth. Similarly in a vertical sense, we can see that points 26, 19, 12 and 3 form one group, points 29, 21, 13 and 5 form another group and so on.

Question to ask

My question is this: What is a method that can successfully group points in horizontal groupings and vertical groupings separately, given that the points could be in any orientation?

Conditions

There must be at least three points per line. If there is anything less than that, then this does not qualify as a segment. Therefore, the points 36 and 10 don't qualify as a vertical line, and similarly the isolated point 23 shouldn't quality as a vertical line, but it is part of the first horizontal grouping.

The above calibration pattern can be in any orientation. However, for what I'm dealing with, the worst kind of orientation you can get is what you see above in the background section.

Expected Output

The output would be a pair of lists where the first list has elements where each element gives you a sequence of point IDs that form a horizontal line. Similarly, the second list has elements where each element gives you a sequence of point IDs that form a vertical line.

Therefore, the expected output for the horizontal sequences would look something like this:

MATLAB

horiz_list = {[23, 26, 29, 31, 33, 34, 35, 37, 36], [19, 21, 24, 28, 30, 32], ...};

vert_list = {[26, 19, 12, 3], [29, 21, 13, 5], ....};

Python

horiz_list = [[23, 26, 29, 31, 33, 34, 35, 37, 36], [19, 21, 24, 28, 30, 32], ....]

vert_list = [[26, 19, 12, 3], [29, 21, 13, 5], ...]

What I have tried

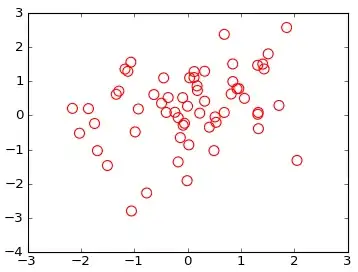

Algorithmically, what I have tried is to undo the rotation that is experienced at these points. I've performed Principal Components Analysis and I tried projecting the points with respect to the computed orthogonal basis vectors so that the points would more or less be on a straight rectangular grid.

Once I have that, it's just a simple matter of doing some scanline processing where you could group points based on a differential change on either the horizontal or vertical coordinates. You'd sort the coordinates by either the x or y values, then examine these sorted coordinates and look for a large change. Once you encounter this change, then you can group points in between the changes together to form your lines. Doing this with respect to each dimension would give you either the horizontal or vertical groupings.

With regards to PCA, here's what I did in MATLAB and Python:

MATLAB

%# Step #1 - Get just the data - no IDs

data_raw = data(:,1:2);

%# Decentralize mean

data_nomean = bsxfun(@minus, data_raw, mean(data_raw,1));

%# Step #2 - Determine covariance matrix

%# This already decentralizes the mean

cov_data = cov(data_raw);

%# Step #3 - Determine right singular vectors

[~,~,V] = svd(cov_data);

%# Step #4 - Transform data with respect to basis

F = V.'*data_nomean.';

%# Visualize both the original data points and transformed data

figure;

plot(F(1,:), F(2,:), 'b.', 'MarkerSize', 14);

axis ij;

hold on;

plot(data(:,1), data(:,2), 'r.', 'MarkerSize', 14);

Python

import numpy as np

import numpy.linalg as la

# Step #1 and Step #2 - Decentralize mean

centroids_raw = data[:,:2]

mean_data = np.mean(centroids_raw, axis=0)

# Transpose for covariance calculation

data_nomean = (centroids_raw - mean_data).T

# Step #3 - Determine covariance matrix

# Doesn't matter if you do this on the decentralized result

# or the normal result - cov subtracts the mean off anyway

cov_data = np.cov(data_nomean)

# Step #4 - Determine right singular vectors via SVD

# Note - This is already V^T, so there's no need to transpose

_,_,V = la.svd(cov_data)

# Step #5 - Transform data with respect to basis

data_transform = np.dot(V, data_nomean).T

plt.figure()

plt.gca().invert_yaxis()

plt.plot(data[:,0], data[:,1], 'b.', markersize=14)

plt.plot(data_transform[:,0], data_transform[:,1], 'r.', markersize=14)

plt.show()

The above code not only reprojects the data, but it also plots both the original points and the projected points together in a single figure. However, when I tried reprojecting my data, this is the plot I get:

The points in red are the original image coordinates while the points in blue are reprojected onto the basis vectors to try and remove the rotation. It still doesn't quite do the job. There is still some orientation with respect to the points so if I tried to do my scanline algorithm, points from the lines below for horizontal tracing or to the side for vertical tracing would be inadvertently grouped and this isn't correct.

Perhaps I'm overthinking the problem, but any insights you have regarding this would be greatly appreciated. If the answer is indeed superb, I would be inclined to award a high bounty as I've been stuck on this problem for quite some time.

I hope this question wasn't long winded. If you don't have an idea of how to solve this, then I thank you for your time in reading my question regardless.

Looking forward to any insights that you may have. Thanks very much!