I found this tutorial http://codethink.no-ip.org/wordpress/archives/673#comment-118063 from this SO question Screen capture video in iOS programmatically of how to do something like this, and it was a bit outdated for iOS, so I renewed it, and am very close to having it work, but putting the UIImages together just isn't quite working right now.

Here is how I call the method in viewDidLoad

[captureView performSelector:@selector(startRecording) withObject:nil afterDelay:1.0];

[captureView performSelector:@selector(stopRecording) withObject:nil afterDelay:5.0];

and captureView is an IBOutlet connected to my view.

And then I have the class ScreenCapture.h & .m

Here is .h

@protocol ScreenCaptureViewDelegate <NSObject>

- (void) recordingFinished:(NSString*)outputPathOrNil;

@end

@interface ScreenCaptureView : UIView {

//video writing

AVAssetWriter *videoWriter;

AVAssetWriterInput *videoWriterInput;

AVAssetWriterInputPixelBufferAdaptor *avAdaptor;

//recording state

BOOL _recording;

NSDate* startedAt;

void* bitmapData;

}

//for recording video

- (bool) startRecording;

- (void) stopRecording;

//for accessing the current screen and adjusting the capture rate, etc.

@property(retain) UIImage* currentScreen;

@property(assign) float frameRate;

@property(nonatomic, assign) id<ScreenCaptureViewDelegate> delegate;

@end

And here is my .m

@interface ScreenCaptureView(Private)

- (void) writeVideoFrameAtTime:(CMTime)time;

@end

@implementation ScreenCaptureView

@synthesize currentScreen, frameRate, delegate;

- (void) initialize {

// Initialization code

self.clearsContextBeforeDrawing = YES;

self.currentScreen = nil;

self.frameRate = 10.0f; //10 frames per seconds

_recording = false;

videoWriter = nil;

videoWriterInput = nil;

avAdaptor = nil;

startedAt = nil;

bitmapData = NULL;

}

- (id) initWithCoder:(NSCoder *)aDecoder {

self = [super initWithCoder:aDecoder];

if (self) {

[self initialize];

}

return self;

}

- (id) init {

self = [super init];

if (self) {

[self initialize];

}

return self;

}

- (id)initWithFrame:(CGRect)frame {

self = [super initWithFrame:frame];

if (self) {

[self initialize];

}

return self;

}

- (CGContextRef) createBitmapContextOfSize:(CGSize) size {

CGContextRef context = NULL;

CGColorSpaceRef colorSpace;

int bitmapByteCount;

int bitmapBytesPerRow;

bitmapBytesPerRow = (size.width * 4);

bitmapByteCount = (bitmapBytesPerRow * size.height);

colorSpace = CGColorSpaceCreateDeviceRGB();

if (bitmapData != NULL) {

free(bitmapData);

}

bitmapData = malloc( bitmapByteCount );

if (bitmapData == NULL) {

fprintf (stderr, "Memory not allocated!");

return NULL;

}

context = CGBitmapContextCreate (bitmapData,

size.width,

size.height,

8, // bits per component

bitmapBytesPerRow,

colorSpace,

(CGBitmapInfo) kCGImageAlphaNoneSkipFirst);

CGContextSetAllowsAntialiasing(context,NO);

if (context== NULL) {

free (bitmapData);

fprintf (stderr, "Context not created!");

return NULL;

}

CGColorSpaceRelease( colorSpace );

return context;

}

static int frameCount = 0; //debugging

- (void) drawRect:(CGRect)rect {

NSDate* start = [NSDate date];

CGContextRef context = [self createBitmapContextOfSize:self.frame.size];

//not sure why this is necessary...image renders upside-down and mirrored

CGAffineTransform flipVertical = CGAffineTransformMake(1, 0, 0, -1, 0, self.frame.size.height);

CGContextConcatCTM(context, flipVertical);

[self.layer renderInContext:context];

CGImageRef cgImage = CGBitmapContextCreateImage(context);

UIImage* background = [UIImage imageWithCGImage: cgImage];

CGImageRelease(cgImage);

self.currentScreen = background;

//debugging

if (frameCount < 40) {

NSString* filename = [NSString stringWithFormat:@"Documents/frame_%d.png", frameCount];

NSString* pngPath = [NSHomeDirectory() stringByAppendingPathComponent:filename];

[UIImagePNGRepresentation(self.currentScreen) writeToFile: pngPath atomically: YES];

frameCount++;

}

//NOTE: to record a scrollview while it is scrolling you need to implement your UIScrollViewDelegate such that it calls

// 'setNeedsDisplay' on the ScreenCaptureView.

if (_recording) {

float millisElapsed = [[NSDate date] timeIntervalSinceDate:startedAt] * 1000.0;

[self writeVideoFrameAtTime:CMTimeMake((int)millisElapsed, 1000)];

}

float processingSeconds = [[NSDate date] timeIntervalSinceDate:start];

float delayRemaining = (1.0 / self.frameRate) - processingSeconds;

CGContextRelease(context);

//redraw at the specified framerate

[self performSelector:@selector(setNeedsDisplay) withObject:nil afterDelay:delayRemaining > 0.0 ? delayRemaining : 0.01];

}

- (void) cleanupWriter {

avAdaptor = nil;

videoWriterInput = nil;

videoWriter = nil;

startedAt = nil;

if (bitmapData != NULL) {

free(bitmapData);

bitmapData = NULL;

}

}

- (void)dealloc {

[self cleanupWriter];

}

- (NSURL*) tempFileURL {

NSString* outputPath = [[NSString alloc] initWithFormat:@"%@/%@", [NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) objectAtIndex:0], @"output.mp4"];

NSURL* outputURL = [[NSURL alloc] initFileURLWithPath:outputPath];

NSFileManager* fileManager = [NSFileManager defaultManager];

if ([fileManager fileExistsAtPath:outputPath]) {

NSError* error;

if ([fileManager removeItemAtPath:outputPath error:&error] == NO) {

NSLog(@"Could not delete old recording file at path: %@", outputPath);

}

}

return outputURL;

}

-(BOOL) setUpWriter {

NSError* error = nil;

videoWriter = [[AVAssetWriter alloc] initWithURL:[self tempFileURL] fileType:AVFileTypeQuickTimeMovie error:&error];

NSParameterAssert(videoWriter);

//Configure video

NSDictionary* videoCompressionProps = [NSDictionary dictionaryWithObjectsAndKeys:

[NSNumber numberWithDouble:1024.0*1024.0], AVVideoAverageBitRateKey,

nil ];

NSDictionary* videoSettings = [NSDictionary dictionaryWithObjectsAndKeys:

AVVideoCodecH264, AVVideoCodecKey,

[NSNumber numberWithInt:self.frame.size.width], AVVideoWidthKey,

[NSNumber numberWithInt:self.frame.size.height], AVVideoHeightKey,

videoCompressionProps, AVVideoCompressionPropertiesKey,

nil];

videoWriterInput = [AVAssetWriterInput assetWriterInputWithMediaType:AVMediaTypeVideo outputSettings:videoSettings];

NSParameterAssert(videoWriterInput);

videoWriterInput.expectsMediaDataInRealTime = YES;

NSDictionary* bufferAttributes = [NSDictionary dictionaryWithObjectsAndKeys:

[NSNumber numberWithInt:kCVPixelFormatType_32ARGB], kCVPixelBufferPixelFormatTypeKey, nil];

avAdaptor = [AVAssetWriterInputPixelBufferAdaptor assetWriterInputPixelBufferAdaptorWithAssetWriterInput:videoWriterInput sourcePixelBufferAttributes:bufferAttributes];

//add input

[videoWriter addInput:videoWriterInput];

[videoWriter startWriting];

[videoWriter startSessionAtSourceTime:CMTimeMake(0, 1000)];

return YES;

}

- (void) completeRecordingSession {

[videoWriterInput markAsFinished];

// Wait for the video

int status = videoWriter.status;

while (status == AVAssetWriterStatusUnknown) {

NSLog(@"Waiting...");

[NSThread sleepForTimeInterval:0.5f];

status = videoWriter.status;

}

@synchronized(self) {

[videoWriter finishWritingWithCompletionHandler:^{

[self cleanupWriter];

BOOL success = YES;

id delegateObj = self.delegate;

NSString *outputPath = [[NSString alloc] initWithFormat:@"%@/%@", [NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) objectAtIndex:0], @"output.mp4"];

NSURL *outputURL = [[NSURL alloc] initFileURLWithPath:outputPath];

NSLog(@"Completed recording, file is stored at: %@", outputURL);

if ([delegateObj respondsToSelector:@selector(recordingFinished:)]) {

[delegateObj performSelectorOnMainThread:@selector(recordingFinished:) withObject:(success ? outputURL : nil) waitUntilDone:YES];

}

}];

}

}

- (bool) startRecording {

bool result = NO;

@synchronized(self) {

if (! _recording) {

result = [self setUpWriter];

startedAt = [NSDate date];

_recording = true;

}

}

return result;

}

- (void) stopRecording {

@synchronized(self) {

if (_recording) {

_recording = false;

[self completeRecordingSession];

}

}

}

-(void) writeVideoFrameAtTime:(CMTime)time {

if (![videoWriterInput isReadyForMoreMediaData]) {

NSLog(@"Not ready for video data");

}

else {

@synchronized (self) {

UIImage *newFrame = self.currentScreen;

CVPixelBufferRef pixelBuffer = NULL;

CGImageRef cgImage = CGImageCreateCopy([newFrame CGImage]);

CFDataRef image = CGDataProviderCopyData(CGImageGetDataProvider(cgImage));

int status = CVPixelBufferPoolCreatePixelBuffer(kCFAllocatorDefault, avAdaptor.pixelBufferPool, &pixelBuffer);

if(status != 0){

//could not get a buffer from the pool

NSLog(@"Error creating pixel buffer: status=%d", status);

}

// set image data into pixel buffer

CVPixelBufferLockBaseAddress( pixelBuffer, 0 );

uint8_t *destPixels = CVPixelBufferGetBaseAddress(pixelBuffer);

CFDataGetBytes(image, CFRangeMake(0, CFDataGetLength(image)), destPixels); //XXX: will work if the pixel buffer is contiguous and has the same bytesPerRow as the input data

if(status == 0){

BOOL success = [avAdaptor appendPixelBuffer:pixelBuffer withPresentationTime:time];

if (!success)

NSLog(@"Warning: Unable to write buffer to video");

}

//clean up

CVPixelBufferUnlockBaseAddress( pixelBuffer, 0 );

CVPixelBufferRelease( pixelBuffer );

CFRelease(image);

CGImageRelease(cgImage);

}

}

}

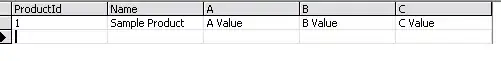

And I as you can see in the drawRect method I save all the images, and they look great, but then when I try to make the video, it creates just a still image that looks like this, when the images look like this.

Here is the output, it is a video but just this. When the picture looks normal (not slanted and all weird)

My question is what is going wrong when the video is being made?

Thanks for the help and your time, I know this is a long question.