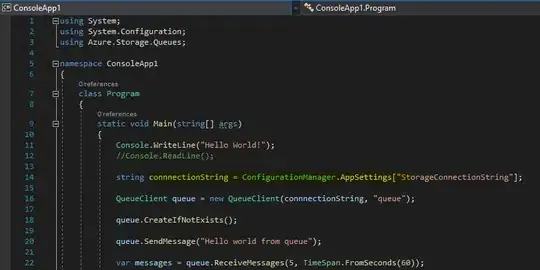

I am working on an application where file uploads happen often, and can be pretty large in size.

Those files are being uploaded to a Web API, which will then get the Stream from the request, and pass it on to my storage service, that then uploads it to Azure Blob Storage.

I need to make sure that:

- No temp files are written on the Web API instance

- The request stream is not fully read into memory before passing it on to the storage service (to prevent

OutOfMemoryExceptions).

I've looked at this article, which describes how to disable input stream buffering, but because many file uploads from many different users happen simultaneously, it's important that it actually does what it says on the tin.

This is what I have in my controller at the moment:

if (this.Request.Content.IsMimeMultipartContent())

{

var provider = new MultipartMemoryStreamProvider();

await this.Request.Content.ReadAsMultipartAsync(provider);

var fileContent = provider.Contents.SingleOrDefault();

if (fileContent == null)

{

throw new ArgumentException("No filename.");

}

var fileName = fileContent.Headers.ContentDisposition.FileName.Replace("\"", string.Empty);

// I need to make sure this stream is ready to be processed by

// the Azure client lib, but not buffered fully, to prevent OoM.

var stream = await fileContent.ReadAsStreamAsync();

}

I don't know how I can reliably test this.

EDIT: I forgot to mention that uploading directly to Blob Storage (circumventing my API) won't work, as I am doing some size checking (e.g. can this user upload 500mb? Has this user used his quota?).