I was wondering about the order of sent and received bytes by/from TCP socket.

I got implemented socket, it's up and working, so that's good. I have also something called "a message" - it's a byte array that contains string (serialized to bytes) and two integers (converted to bytes). It has to be like that - project specifications :/

Anyway, I was wondering about how it is working on bytes: In byte array, we have order of bytes - 0,1,2,... Length-1. They sit in memory.

How are they sent? Is last one the first to send? Or is it the first? Receiving, I think, is quite easy - first byte to appear gets on first free place in buffer.

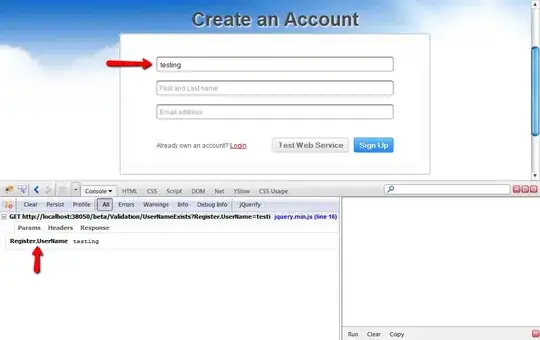

I think a little image I made nicely shows what I mean.