The best short answer I can give is to measure, measure, measure. Stopwatch is nice to get a feeling for where time is spent but eventually you'll end up sprinkling large swats of your code with it or you will have to find a better tool for this purpose. I would suggest getting a dedicated profiler tool for this, there are many available for C# and .NET.

I've managed to shave off about 43% of the total runtime in three steps.

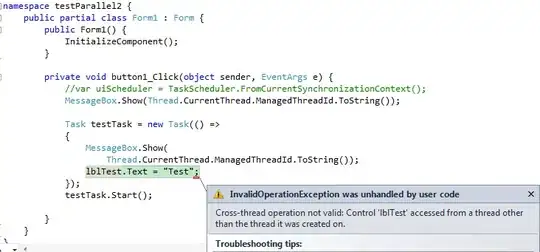

First I measured your code and got this:

This seems to indicate that there are two hotspots here that we can try to combat:

- String splitting (SplitInternal)

- Dictionary maintenance (FindEntry, Insert, get_Item)

The last piece of the time spent is in reading the file and I really doubt we can gain much by changing that part of the code. One other answer here mentions using specific buffersizes, I tried this and could not gain measurable differences.

The first, string splitting, is somewhat easy but involves rewriting a very simple call to string.Split into a bit more code. The loop that processes one line I rewrote to this:

while ((line = streamReader.ReadLine()) != null)

{

int lastPos = 0;

for (int index = 0; index <= line.Length; index++)

{

if (index == line.Length || line[index] == ' ')

{

if (lastPos < index)

{

string word = line.Substring(lastPos, index - lastPos);

// process word here

}

lastPos = index + 1;

}

}

}

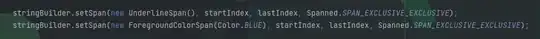

I then rewrote the processing of one word to this:

int currentCount;

wordCount.TryGetValue(word, out currentCount);

wordCount[word] = currentCount + 1;

This relies on the fact that:

TryGetValue is cheaper than checking if the word exists and then retrieving its current count- If

TryGetValue fails to get the value (key does not exist) then it will initialize the currentCount variable here to its default value, which is 0. This means that we don't really need to check if the word actually existed.

- We can add new words to the dictionary through the indexer (it will either overwrite the existing value or add a new key+value to the dictionary)

The final loop thus looks like this:

while ((line = streamReader.ReadLine()) != null)

{

int lastPos = 0;

for (int index = 0; index <= line.Length; index++)

{

if (index == line.Length || line[index] == ' ')

{

if (lastPos < index)

{

string word = line.Substring(lastPos, index - lastPos);

int currentCount;

wordCount.TryGetValue(word, out currentCount);

wordCount[word] = currentCount + 1;

}

lastPos = index + 1;

}

}

}

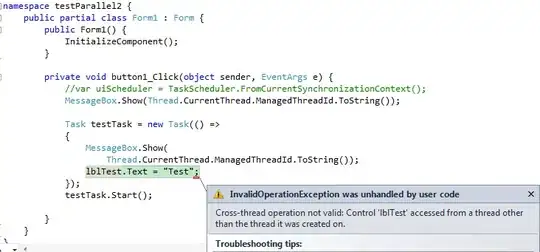

The new measurement shows this:

Details:

- We went from 6876ms to 5013ms

- We lost the time spent in

SplitInternal, FindEntry and get_Item

- We gained time spent in

TryGetValue and Substring

Here's difference details:

As you can see, we lost more time than we gained new time which resulted in a net improvement.

However, we can do better. We're doing 2 dictionary lookups here which involves calculating the hash code of the word, and comparing it to keys in the dictionary. The first lookup is part of the TryGetValue and the second is part of wordCount[word] = ....

We can remove the second dictionary lookup by creating a smarter data structure inside the dictionary at the cost of more heap memory used.

We can use Xanatos' trick of storing the count inside an object so that we can remove that second dictionary lookup:

public class WordCount

{

public int Count;

}

...

var wordCount = new Dictionary<string, WordCount>();

...

string word = line.Substring(lastPos, index - lastPos);

WordCount currentCount;

if (!wordCount.TryGetValue(word, out currentCount))

wordCount[word] = currentCount = new WordCount();

currentCount.Count++;

This will only retrieve the count from the dictionary, the addition of 1 extra occurance does not involve the dictionary. The result from the method will also change to return this WordCount type as part of the dictionary instead of just an int.

Net result: ~43% savings.

Final piece of code:

public class WordCount

{

public int Count;

}

public static IDictionary<string, WordCount> Parse(string path)

{

var wordCount = new Dictionary<string, WordCount>();

using (var fileStream = new FileStream(path, FileMode.Open, FileAccess.Read, FileShare.None, 65536))

using (var streamReader = new StreamReader(fileStream, Encoding.Default, false, 65536))

{

string line;

while ((line = streamReader.ReadLine()) != null)

{

int lastPos = 0;

for (int index = 0; index <= line.Length; index++)

{

if (index == line.Length || line[index] == ' ')

{

if (lastPos < index)

{

string word = line.Substring(lastPos, index - lastPos);

WordCount currentCount;

if (!wordCount.TryGetValue(word, out currentCount))

wordCount[word] = currentCount = new WordCount();

currentCount.Count++;

}

lastPos = index + 1;

}

}

}

}

return wordCount;

}