I am building a messaging application using Netty 4.1 Beta3 for designing my server and the server understands MQTT protocol.

This is my MqttServer.java class that sets up the Netty server and binds it to a specific port.

EventLoopGroup bossPool=new NioEventLoopGroup();

EventLoopGroup workerPool=new NioEventLoopGroup();

try {

ServerBootstrap boot=new ServerBootstrap();

boot.group(bossPool,workerPool);

boot.channel(NioServerSocketChannel.class);

boot.childHandler(new MqttProxyChannel());

boot.bind(port).sync().channel().closeFuture().sync();

} catch (Exception e) {

e.printStackTrace();

}finally {

workerPool.shutdownGracefully();

bossPool.shutdownGracefully();

}

}

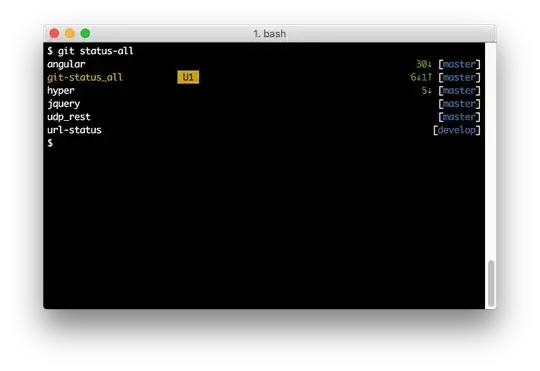

Now I did a load testing of my application on my Mac having the following configuration

The netty performance was exceptional. I had a look at the jstack while executing my code and found that netty NIO spawns about 19 threads and none of them seem to be stuck up waiting for channels or something else.

Then I executed my code on a linux machine

This is a 2 core 15GB machine. The problem is that the packet sent by my MQTT client seems to take a long time to pass through the netty pipeline and also on taking jstack I found that there were 5 netty threads and all were stuck up like this

."nioEventLoopGroup-3-4" #112 prio=10 os_prio=0 tid=0x00007fb774008800 nid=0x2a0e runnable [0x00007fb768fec000]

java.lang.Thread.State: RUNNABLE

at sun.nio.ch.EPollArrayWrapper.epollWait(Native Method)

at sun.nio.ch.EPollArrayWrapper.poll(EPollArrayWrapper.java:269)

at sun.nio.ch.EPollSelectorImpl.doSelect(EPollSelectorImpl.java:79)

at sun.nio.ch.SelectorImpl.lockAndDoSelect(SelectorImpl.java:86)

- locked <0x00000006d0fdc898> (a

io.netty.channel.nio.SelectedSelectionKeySet)

- locked <0x00000006d100ae90> (a java.util.Collections$UnmodifiableSet)

- locked <0x00000006d0fdc7f0> (a sun.nio.ch.EPollSelectorImpl)

at sun.nio.ch.SelectorImpl.select(SelectorImpl.java:97)

at io.netty.channel.nio.NioEventLoop.select(NioEventLoop.java:621)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:309)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:834)

at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:137)

at java.lang.Thread.run(Thread.java:745)

Is this some performance issue related to epoll on linux machine. If yes then what changes should be made to netty configuration to handle this or to improve performance.

Edit

Java Version on local system is :-

java version "1.8.0_40" Java(TM) SE Runtime Environment (build 1.8.0_40-b27) Java HotSpot(TM) 64-Bit Server VM (build 25.40-b25, mixed mode)

Java version on AWS is :-

openjdk version "1.8.0_40-internal" OpenJDK Runtime Environment (build 1.8.0_40-internal-b09) OpenJDK 64-Bit Server VM (build 25.40-b13, mixed mode)