My application receives instructions from my client.

On each instruction I want to execute code from the received timestamp + 10 seconds.

To test the accuracy of my code I mocked up this simple console test:

static DateTime d1 = DateTime.Now;

static Timer _timer = null;

static void Main(string[] args)

{

_timer = new Timer(Callback, null,200, Timeout.Infinite);

d1 = DateTime.Now;

Console.ReadLine();

}

private static void Callback(Object state)

{

TimeSpan s = DateTime.Now - d1;

Console.WriteLine("Done, took {0} secs.", s.Milliseconds);

_timer.Change(200, Timeout.Infinite);

}

On running it I had hoped to display:

Done, took 200 milsecs.

Done, took 200 milsecs.

Done, took 200 milsecs.

Done, took 200 milsecs.

Done, took 200 milsecs.

Done, took 200 milsecs.

But it does not.

I get random values for 'milsecs'.

NB My actual code will not always be using 200 milliseconds.

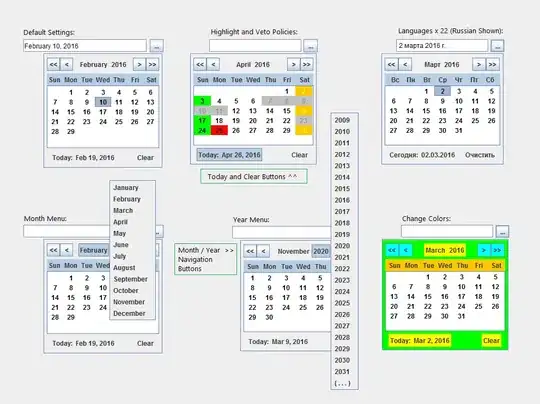

Example Screenshot: