Note: This is problem 4.3 from Cracking the Coding Interview 5th Edition

Problem:Given a sorted(increasing order) array, write an algorithm to create a binary search tree with minimal height

Here is my algorithm, written in Java to do this problem

public static IntTreeNode createBST(int[] array) {

return createBST(array, 0, array.length-1);

}

private static IntTreeNode createBST(int[] array, int left, int right) {

if(right >= left) {

int middle = array[(left + right)/2;

IntTreeNode root = new IntTreeNode(middle);

root.left = createBST(array, left, middle - 1);

root.right = createBST(array, middle + 1, right);

return root;

} else {

return null;

}

}

I checked this code against the author's and it's nearly identical.

However I am having a hard time with analyzing the time complexity of this algorithm.

I know this wouldn't run in O(logn) like Binary Search because you're not doing the same amount of work at each level of recursion. E.G at the first level, 1 unit of work, 2nd level - 2 units of work, 3rd level - 4 units of work, all the way to log2(n) level - n units of work.

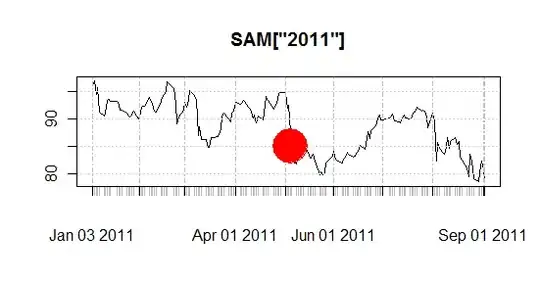

So based off that, the number of steps this algorithms takes would be upper bounded by this mathematical expression

which after watching Infinite geometric series, I evaluated to

or 2n which would be in O(n)

Do you guys agree with my work here and that this algorithm would run in O(n) or did I miss something or it actually runs in O(nlogn) or some other function class?