After reading the following paper https://people.freebsd.org/~lstewart/articles/cpumemory.pdf ("What every programmer should know about memory") I wanted to try one of the author's test, that is, measuring the effects of TLB on the final execution time.

I am working on a Samsung Galaxy S3 that embeds a Cortex-A9.

According to the documentation:

we have two micro TLBs for instruction and data cache in L1 (http://infocenter.arm.com/help/index.jsp?topic=/com.arm.doc.ddi0388e/Chddiifa.html)

The main TLB is located in L2 (http://infocenter.arm.com/help/index.jsp?topic=/com.arm.doc.ddi0388e/Chddiifa.html)

Data micro TLB has 32 entries (instruction micro TLB has either 32 or 64 entries)

- L1' size == 32 Kbytes

- L1 cache line == 32 bytes

- L2' size == 1MB

I wrote a small program that allocates an array of structs with N entries. Each entry's size is == 32 bytes so it fits in a cache line. I perform several read access and I measure the execution time.

typedef struct {

int elmt; // sizeof(int) == 4 bytes

char padding[28]; // 4 + 28 = 32B == cache line size

}entry;

volatile entry ** entries = NULL;

//Allocate memory and init to 0

entries = calloc(NB_ENTRIES, sizeof(entry *));

if(entries == NULL) perror("calloc failed"); exit(1);

for(i = 0; i < NB_ENTRIES; i++)

{

entries[i] = mmap(NULL, 4096, PROT_READ | PROT_WRITE, MAP_PRIVATE | MAP_ANONYMOUS, 0, 0);

if(entries[i] == MAP_FAILED) perror("mmap failed"); exit(1);

}

entries[LAST_ELEMENT]->elmt = -1

//Randomly access and init with random values

n = -1;

i = 0;

while(++n < NB_ENTRIES -1)

{

//init with random value

entries[i]->elmt = rand() % NB_ENTRIES;

//loop till we reach the last element

while(entries[entries[i]->elmt]->elmt != -1)

{

entries[i]->elmt++;

if(entries[i]->elmt == NB_ENTRIES)

entries[i]->elmt = 0;

}

i = entries[i]->elmt;

}

gettimeofday(&tStart, NULL);

for(i = 0; i < NB_LOOPS; i++)

{

j = 0;

while(j != -1)

{

j = entries[j]->elmt

}

}

gettimeofday(&tEnd, NULL);

time = (tEnd.tv_sec - tStart.tv_sec);

time *= 1000000;

time += tEnd.tv_usec - tStart.tv_usec;

time *= 100000

time /= (NB_ENTRIES * NBLOOPS);

fprintf(stdout, "%d %3lld.%02lld\n", NB_ENTRIES, time / 100, time % 100);

I have an outer loop that makes NB_ENTRIES vary from 4 to 1024.

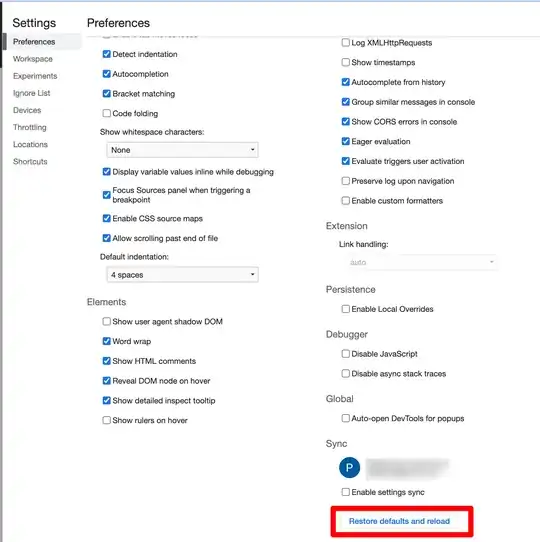

As one can see in the figure below, while NB_ENTRIES == 256 entries, executing time is longer.

When NB_ENTRIES == 404 I get an "out of memory" (why? micro TLBs exceeded? main TLBs exceeded? Page Tables exceeded? virtual memory for the process exceeded?)

Can someone explain me please what is really going on from 4 to 256 entries, then from 257 to 404 entries?

EDIT 1

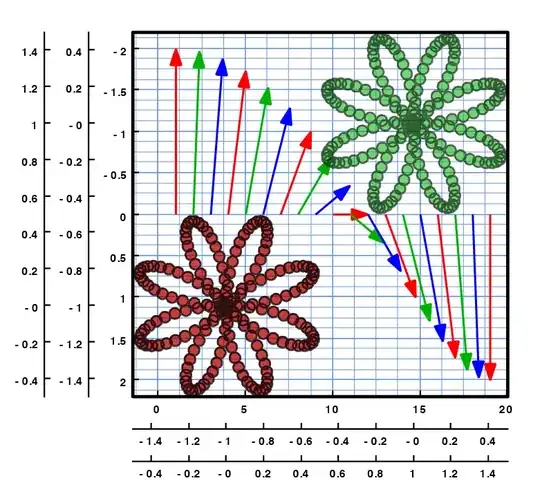

As it has been suggested, I ran membench (src code) and below the results:

EDIT 2

In the following paper (page 3) they ran (I suppose) the same benchmark. But the different steps are clearly visible from their plots, which is not my case.

Right now, according to their results and explanations, I only can identify few things.

- plots confirm that L1 cache line size is 32 bytes because as they said

"once the array size exceeds the size of the data cache (32KB), the reads begin to generate misses [...] an inflection point occurs when every read generates a misse".

In my case the very first inflection point appears when stride == 32 Bytes. - The graph shows that we have a second-level (L2) cache. I think it is depicted by the yellow line (1MB == L2 size) - Therefore the two last plots above the latter probably reflects the latency while accessing Main Memory (+ TLB?).

However from this benchmark, I am not able to identify:

- the cache associativity. Normally D-Cache and I-Cache are 4-way associative (Cortex-A9 TRM).

- The TLB effects. As they said,

in most systems, a secondary increase in latency is indicative of the TLB, which caches a limited number of virtual to physical translations.[..] The absence of a rise in latency attributable to TLB indicates that [...]"

large page sizes have probably been used/implemented.

EDIT 3

This link explains the TLB effects from another membench graph. One can actually retrieve the same effects on my graph.

On a 4KB page system, as you grow your strides, while they're still < 4K, you'll enjoy less and less utilization of each page [...] you'll have to access the 2nd level TLB on each access [...]

The cortex-A9 supports 4KB pages mode. Indeed as one can see in my graph up to strides == 4K, latencies are increasing, then, when it reachs 4K

you suddenly start benefiting again since you're actually skipping whole pages.