I was optimizing my code to support both iOS8 and iOS7, and the app works perfectly in iOS8. It was originally build only to support iOS8 but I have been adjusting the minor settings so it should support iOS7 as well. Now the only problem that I am expecting is that in iOS7 - the controls in the bottom right corner are ignored. The home button in bottom left corner works without problems, and if I change the position of the two controls in the bottom right, to be bottom left, then they work as well. It is like there is a "mask" on top of the right side of the page view controller. It is a book application, so the page view is made to supporting swiping between pages like a normal book app layout.

So basically what my question is, is if there is a way to remove this disabled overlay or if I should adjust some other settings to fetch these inputs? I have also tried to NSLog tap commands when the user hits the bottom right controls, but no logs are coming, only when the controls are in the left side of screen.

I also tried following: By removing the tap recognizer from the pageview, but neither did it work.

self.view.gestureRecognizers = self.pageViewController.gestureRecognizers;

// Find the tap gesture recognizer so we can remove it!

UIGestureRecognizer* tapRecognizer = nil;

for (UIGestureRecognizer* recognizer in self.pageViewController.gestureRecognizers) {

if ( [recognizer isKindOfClass:[UITapGestureRecognizer class]] ) {

tapRecognizer = recognizer;

break;

}

}

if ( tapRecognizer ) {

[self.view removeGestureRecognizer:tapRecognizer];

[self.pageViewController.view removeGestureRecognizer:tapRecognizer];

}

EDIT

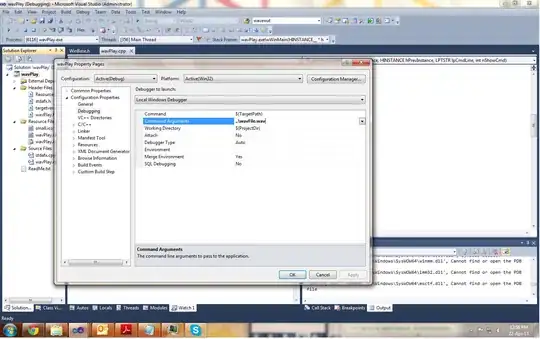

@Rory McKinnel - Here is the screenshot of the view hierarchy.

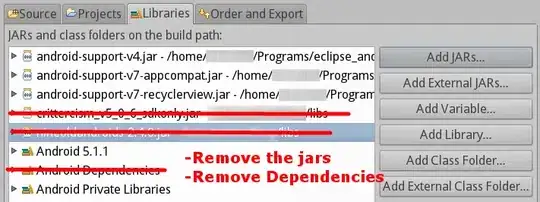

Here is a test where I put a close button of another view in the same position as the play button, but this still works. It seems like it is only in the pageview there is a problem.

UPDATE TO ISSUE

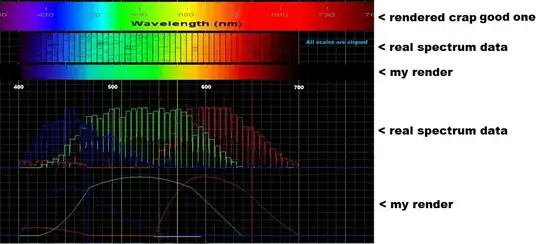

I played around with some logs and so to see what areas where affected etc. In the app, there is a function that makes it possible to tap a word in the book to have it highlighted and read out using an audio file. This tap event also only applies to the left side of the screen. If I tap a word in the "ignored" area, the event is not executed and not even the log is made for the function.

I have measured and here is an estimate on how big each side covers. When I tap in the "ignored" part of the screen, the only function that happens is the swipe to next page.

For example: I can tap and hear the word: "Adipisicing" but not the word "elit" in the first line on the image.