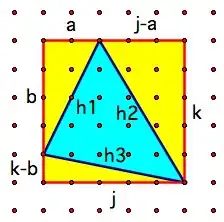

I'm trying to draw ellipses around points of a group on a graph, with matplotlib. I would like to obtain something like this:

A dataset for a group (the red one for example) could look like this:

[[-23.88315146 -3.26328266] # first point

[-25.94906669 -1.47440904] # second point

[-26.52423229 -4.84947907]] # third point

I can easily draw the points on a graph, but I encounter problems to draw the ellipses.

The ellipses have diameters of 2 * standard deviation, and its center has the coordinates (x_mean, y_mean). The width of one ellipse equals the x standard deviation * 2. Its height equals the y standard deviation * 2.

However, I don't know how to calculate the angle of the ellipses (you can see on the picture the ellipses are not perfectly vertical).

Do you have an idea about how to do that ?

Note: This question is a simplification of LDA problem (Linear Discriminant Analysis). I'm trying to simplify the problem to its most basic expression.