I have a requirement to create a audio level visualizer with a custom pattern. I have the image set as png's. My current approach is like this

1) Get the audio level of the microphone

2) Load the relevant image to the UIImageView based on the volume level.

// audio level timer

self.levelTimer = [NSTimer scheduledTimerWithTimeInterval: 0.001 target: self selector: @selector(levelTimerCallback:) userInfo: nil repeats: YES];

ImageView

- (void)levelTimerCallback:(NSTimer *)timer {

[self.audioRecorder updateMeters];

const double ALPHA = 0.05;

double peakPowerForChannel = pow(10, (0.05 * [self.audioRecorder peakPowerForChannel:0]));

lowPassResults = ALPHA * peakPowerForChannel + (1.0 - ALPHA) * lowPassResults;

//NSLog(@"Average input: %f Peak input: %f Low pass results: %f", [self.audioRecorder averagePowerForChannel:0], [self.audioRecorder peakPowerForChannel:0], lowPassResults);

if (lowPassResults > 0.0 && lowPassResults <= 0.05){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim1"];

}

if (lowPassResults > 0.06 && lowPassResults <= 0.10){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim2"];

}

if (lowPassResults > 0.11 && lowPassResults <= 0.15){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim3"];

}

if (lowPassResults > 0.16 && lowPassResults <= 0.20){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim4"];

}

if (lowPassResults > 0.21 && lowPassResults <= 0.25){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim5"];

}

if (lowPassResults > 0.26 && lowPassResults <= 0.30){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim6"];

}

if (lowPassResults > 0.31 && lowPassResults <= 0.35){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim7"];

}

if (lowPassResults > 0.36 && lowPassResults <= 0.40){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim8"];

}

if (lowPassResults > 0.41 && lowPassResults <= 0.45){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim9"];

}

if (lowPassResults > 0.46 && lowPassResults <= 0.50){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim10"];

}

if (lowPassResults > 0.51 && lowPassResults <= 0.55){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim11"];

}

if (lowPassResults > 0.56 && lowPassResults <= 0.60){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim12"];

}

if (lowPassResults > 0.61 && lowPassResults <= 0.65){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim13"];

}

if (lowPassResults > 0.66 && lowPassResults <= 0.70){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim14"];

}

if (lowPassResults > 0.71 && lowPassResults <= 0.75){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim15"];

}

if (lowPassResults > 0.76 && lowPassResults <= 0.80){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim16"];

}

if (lowPassResults > 0.81 && lowPassResults <= 0.85){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim17"];

}

if (lowPassResults > 0.86 && lowPassResults <= 0.90){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim18"];

}

if (lowPassResults > 0.86){

imgViewRecordAnimation.image = nil;

imgViewRecordAnimation.image = [UIImage imageNamed:@"anim19"];

}

}

But the output is not smooth as a real visualizer animation. What should be the best approach? Share there's any better ways to do this.

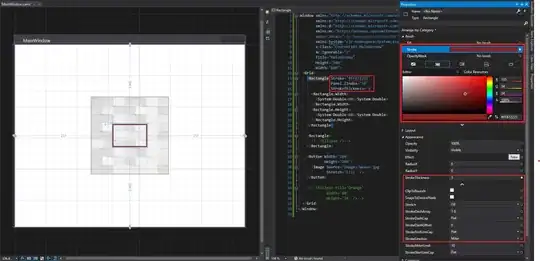

Couple of images