You can use simple map:

df.rdd.map(lambda row:

Row(row.__fields__ + ["day"])(row + (row.date_time.day, ))

)

Another option is to register a function and run SQL query:

sqlContext.registerFunction("day", lambda x: x.day)

sqlContext.registerDataFrameAsTable(df, "df")

sqlContext.sql("SELECT *, day(date_time) as day FROM df")

Finally you can define udf like this:

from pyspark.sql.functions import udf

from pyspark.sql.types import IntegerType

day = udf(lambda date_time: date_time.day, IntegerType())

df.withColumn("day", day(df.date_time))

EDIT:

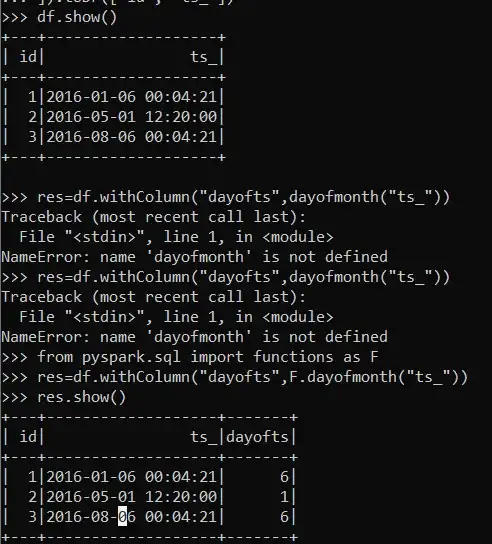

Actually if you use raw SQL day function is already defined (at least in Spark 1.4) so you can omit udf registration. It also provides a number of different date processing functions including:

It is also possible to use simple date expressions like:

current_timestamp() - expr("INTERVAL 1 HOUR")

It mean you can build relatively complex queries without passing data to Python. For example:

df = sc.parallelize([

(1, "2016-01-06 00:04:21"),

(2, "2016-05-01 12:20:00"),

(3, "2016-08-06 00:04:21")

]).toDF(["id", "ts_"])

now = lit("2016-06-01 00:00:00").cast("timestamp")

five_months_ago = now - expr("INTERVAL 5 MONTHS")

(df

# Cast string to timestamp

# For Spark 1.5 use cast("double").cast("timestamp")

.withColumn("ts", unix_timestamp("ts_").cast("timestamp"))

# Find all events in the last five months

.where(col("ts").between(five_months_ago, now))

# Find first Sunday after the event

.withColumn("next_sunday", next_day(col("ts"), "Sun"))

# Compute difference in days

.withColumn("diff", datediff(col("ts"), col("next_sunday"))))