I wanted to create a generic upload code,which could insert all data ,be it text,images,video files etc into a blob field and retrieve data from the same. I had assumed that converting the file into bytes and then uploading it, and using similar approach to download it would work. What beats me is that though I am able to successfully download files in any format using the bytes approach, uploading files by converting into bytes work only with pdf and text-related documents. While uploading image files, or even xls files, I observed that the byte size of blob field is lesser(update: mismatched) than that of original file. These files were found to be unreadable after downloading.

(I have come across a procedure in https://community.oracle.com/thread/1128742?tstart=0 which would help me in achieving what I want. But this would mean considerable rewriting in my current code. I hope there is a way out in my current code itself.)

My 'insert to blob' code as of now is :

Table structure :

desc project_storage

Name Null Type

---------- ---- -------------

PROJECT_ID NUMBER(38)

FILE_NAME VARCHAR2(200)

DOCUMENTS BLOB

ALIAS VARCHAR2(50)

FILE_TYPE VARCHAR2(200)

Code:

@RequestMapping(value="/insertProject.htm", method = { RequestMethod.POST}, headers="Accept=*/*")

public String insert(@RequestParam Map<String,Object> parameters,@RequestParam CommonsMultipartFile file,Model model,HttpServletRequest req){

try

{

log.info("Datasource || attempt to insert into project storage -Start()");

if(file!=null)

{

String file_type=file.getContentType();

String file_name=file.getOriginalFlename();

String file_storage=file.getStorageDescription();

long file_size=file.getSize();

System.out.println("File type is : "+file_type);

System.out.println("File name is :"+file_name);

System.out.println("File size is"+file_size);

System.out.println("Storage Description :"+file_storage);

ByteArrayOutputStream barr = new ByteArrayOutputStream();

ObjectOutputStream objOstream = new ObjectOutputStream(barr);

objOstream.writeObject(file);

objOstream.flush();

objOstream.close();

byte[] bArray = barr.toByteArray();

// byte [] bArray=file.getBytes();

// InputStream inputStream = new ByteArrayInputStream(bArray);

//inputStream.read();

Object objArray[]=new Object[]{file_name,bArray,parameters.get("alias"),file_type}; //bArray is our target.

int result=dbUtil.saveData("Insert into Project_Storage(Project_Id,File_Name,Documents,Alias,File_Type) values(to_number(?),?,?,?,?)", objArray);

model.addAttribute(result);

}

}

catch(Exception e){

System.out.println("Exception while inserting the documents"+e.toString());

e.printStackTrace();

}

log.info("Datasource || attempt to insert new project -end()");

return "admin/Result";

}

Where am I going wrong? What exactly is being populated into the blob field when the files are xls,image or files?

EDIT: Tried uploading a dummy excel file which is as follows:

The console output is as follows :

[6/29/15 20:28:41:803 IST] 0000004d SystemOut O File type is : application/octet-stream

[6/29/15 20:28:41:803 IST] 0000004d SystemOut O File name is :Game.xls

[6/29/15 20:28:41:804 IST] 0000004d SystemOut O File size is: 6144 //this is original size of file.

[6/29/15 20:28:41:804 IST] 0000004d SystemOut O Storage Description :in memory

[6/29/15 20:28:41:805 IST] 0000004d SystemOut O DBUtil || saveData || Query : Insert into Project_Storage(Project_Id,File_Name,Documents,Alias,File_Type) values(to_number(?),?,?,?,?)|| Object : [Ljava.lang.Object;@1eecbb7

[6/29/15 20:28:42:020 IST] 0000004d servlet I

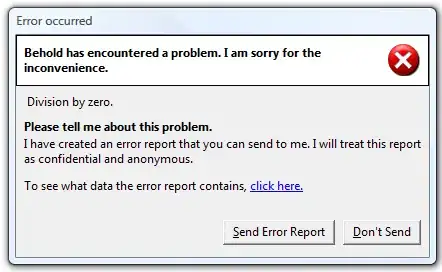

The downloaded file is of size 6.7 KB, and contains the data as follows:

This shows that this is an issue of data corruption. I had previously assumed only partial entry of data is occuring. EDIT :- The file is being read through a form.

<span class="btn btn-default btn-file" id="btn_upload">

Browse <input type="file" name="file"></span>