Is it possible to implement a video codec using GLSL shaders, and if practical, would it be any more efficient than a cpu codec?

-

AMD and Intel have at least implemented this with [VCE](http://developer.amd.com/community/blog/2014/02/19/introducing-video-coding-engine-vce/) and QuickSync respectively. – Alan Jul 04 '15 at 02:06

-

1That doesn't seem implemented in shaders, amd's website calls it "dedicated fixed-function". – user1090729 Jul 04 '15 at 02:54

-

Why not simply use the dedicated h264 codec hardware present on modern GPUs? Much more energy efficient and much easier to implement. – datenwolf May 30 '16 at 10:02

-

The question is of a theoretical nature. For this purpose you could assume that such a decoder is not available on your gpu or that you wish to use a codec that's not supported by your hardware, be it vp9, h265 or whatever example you come up with. – user1090729 May 31 '16 at 07:40

2 Answers

Since GPUs are parallel processors, the codecs would have to be designed to exploit the pipeline. Codecs are either encoders or decoders, shaders are vertex or fragment.

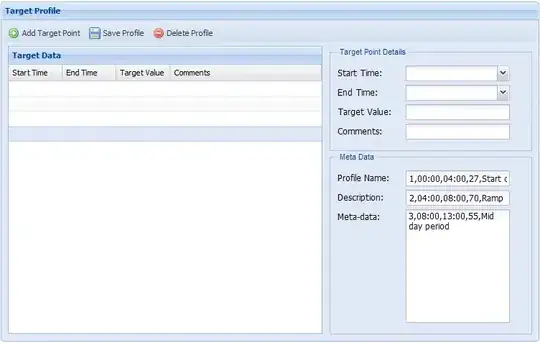

The pipeline architecture (stack diagram) would be:

The design should push as much of the work as possible into vertex shaders for efficient parallelism. A quadtree algorithm might be a good choice to isolate the fragments.

The implementation would depend on the GPU targets. Khronos Vulkan chipsets (GL5+) are particularly well suited to this problem, allowing for multithreaded pipelines.

A high end GPU codec implementation could easily outperform some hardware codecs, and certainly blow the doors off any similar CPU codecs (software codecs).

Dedicated hardware will always win eventually. Any good GPU codec could serve as a model for a much faster hardware codec, just as a good software codec could become a faster GPU codec.

- 3,522

- 1

- 31

- 44

-

Works for audio textures too. What is very interesting is that any glsl decoder could simply be included in a media container (mp4, etc). Standardized external codecs (h264, etc) would no longer be required. Every producer could have their own secret encoder! – Dominic Cerisano May 30 '16 at 16:09

-

Would that really be practical, though? Wouldn't you have to copy it back from the gpu to be able to play it over some other sound card? – user1090729 May 31 '16 at 07:31

-

-

Could you expand on that? What does that have to do with the technical implications of the approach? – user1090729 Jun 01 '16 at 07:36

-

-

The link above is about CUDA, not GLSL. I'm, personally, curious if it's possible to implement a GPU video decoder using OpenGL to perform perallelizable parts of the algorithm (per-block things like DCT) so that it is portable and, among other things, can be used on mobile devices where there's usually not as much CPU power as on desktop systems and OpenGL is the only universally available API that gives apps access to the GPU. – Grishka Jun 11 '16 at 18:45

-

It would only be faster in GLSL vs CUDA. Codec algorithms (for eg. H264) are mostly bitwise operations. GLSL can easily handle that as part of a GPU codec pipeline to improve compression/decompression bottlenecks. – Dominic Cerisano Aug 03 '16 at 01:51

Well, All Turing complete systems are capable of accomplishing the same things, the only difference is speed and efficiency. So if you read this question as "are GLSL shaders Turing complete?" the answer can be found here:

Are GPU shaders Turing complete

TLDR; Shader model 3.0 Yes Others Maybe (but probably no)

As for more efficient, Probably not. Other GPGPU/CUDA/SIMD will most likely work better.