ORIGINAL PROBLEM

I have created a custom script to retrieve data from a remote SQL server into our local copy in our office. I had some issues with the script where selected tables had some data inserted twice, thus creating duplicates. I know that for all the tables in all databases there should be no duplicates.

This issue has made me paranoid that other tables may have had this problem historically, and therefore I'd like to verify this.

SOLUTION

I have created a SQL script to insert the count and distinct count of all columns into a table for all the databases on our server (excluding the 4 system databases):

DECLARE @TableFullName AS NVARCHAR(MAX)

DECLARE @SQLQuery AS NVARCHAR(MAX)

DECLARE @TableHasDuplicates AS BIT

DECLARE @TempTableRowCount AS INT

DECLARE @ResultsTable TABLE ([CompleteTableName] NVARCHAR(200), [CountAll] INT, [CountDistinct] INT)

DECLARE @CountAll INT

DECLARE @CountDistinct INT

SET NOCOUNT ON

DECLARE @AllTables TABLE ([CompleteTableName] NVARCHAR(200))

INSERT INTO @AllTables ([CompleteTableName])

EXEC sp_msforeachdb 'SELECT ''['' + [TABLE_CATALOG] + ''].['' + [TABLE_SCHEMA] + ''].['' + [TABLE_NAME] + '']'' FROM [?].INFORMATION_SCHEMA.TABLES'

SET NOCOUNT OFF;

DECLARE [table_cursor] CURSOR FOR

(SELECT *

FROM @AllTables

WHERE [CompleteTableName] NOT LIKE '%master%' AND [CompleteTableName] NOT LIKE '%msdb%' AND [CompleteTableName] NOT LIKE '%tempdb%' AND [CompleteTableName] NOT LIKE '%model%');

OPEN [table_cursor]

PRINT N'There were ' + CAST(@CountAll AS NVARCHAR(10)) + ' tables with potential duplicate data'

FETCH NEXT FROM [table_cursor]

INTO @TableFullName

WHILE @@FETCH_STATUS = 0

BEGIN

SET @SQLQuery = 'SELECT @CntAll = COUNT(*) FROM ' + @TableFullName + ' SELECT @CntDistinct = COUNT(*) FROM (SELECT DISTINCT * FROM ' + @TableFullName + ') AS [sq] IF @CntAll > @CntDistinct SELECT @BitResult=1 ELSE SELECT @BitResult=0';

EXEC sp_executesql @SQLQuery, N'@BitResult BIT OUTPUT, @CntAll INT OUTPUT, @CntDistinct INT OUTPUT', @BitResult = @TableHasDuplicates OUTPUT, @CntAll = @CountAll OUTPUT, @CntDistinct = @CountDistinct OUTPUT;

IF @TableHasDuplicates = 1

BEGIN

INSERT INTO @ResultsTable ([CompleteTableName], [CountAll], [CountDistinct])

SELECT @TableFullName, @CountAll, @CountDistinct

END;

FETCH NEXT FROM [table_cursor]

INTO @TableFullName

END

CLOSE [table_cursor];

DEALLOCATE [table_cursor];

SELECT *

FROM @ResultsTable

An overview of how it works is the table variable @AllTables uses sp_msforeachdb with INFORMATION_SCHEMA.TABLES to list all the tables in all databases (there are 16537 tables). A table cursor is used to store all non-system entries and then I use dynamic SQL to undertake a count and distinct count which is stored in another table variable @ResultsTable.

THE PROBLEM WITH THIS SOLUTION

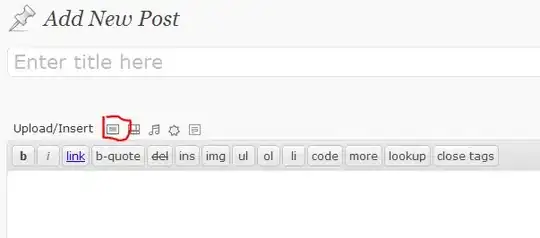

When I run this query, it will run for circa 3 minutes then throw an error saying that the tempdb PRIMARY filegroup is full:

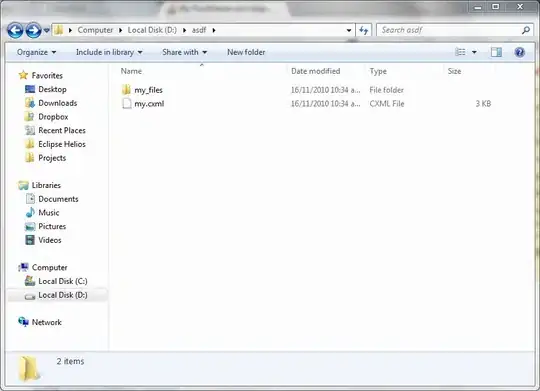

I am my own DBA, and I used Brent Ozar's guide to setting up my SQL server instance, and my tempdb is set up with 8 x 3GB mdf/ndf files (the server has 8 cores):

These files show as having 23997MB available under 'General' properties.

MY QUESTIONS

- If I have circa 24GB of tempdb free space, why is this relatively simple query running out of tempdb space?

- Is there a better/more efficiency way of getting a count and distinct count of all tables in all databases?