I'm deploying a Django app on Heroku and I'm seeing that most of the time in my requests is spent in the psycopg2:connect function.

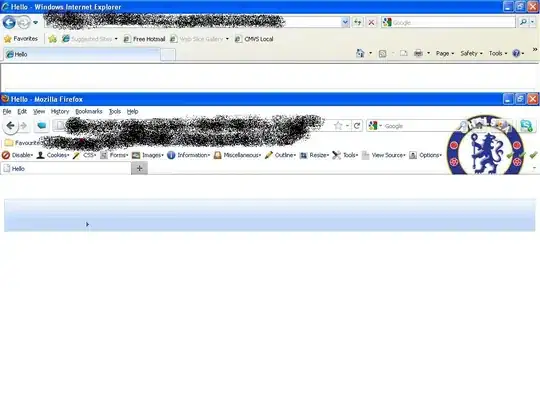

See the New Relic graphs (blue is psycopg2:connect):

I don't think spending 60% of the time in the db connection is adequate...

I tried using connection pooling with django-postgrespool but couldn't notice any difference.

I'm using waitress as the server (as per this article http://blog.etianen.com/blog/2014/01/19/gunicorn-heroku-django/).

The app is running on a Hobby dyno with a Hobby basic Postgresql database (will upgrading make this better?).

Any pointers as to how I can speed up these connections?

[UPDATE] I did some more digging and this doesn't seem to be a problem when using the django rest framework browsable api:

In the previous screenshot, requests made after 14:20 are made to the same views but without ?format=json, and you can see that the psycopg2:connect is a lot faster. Maybe there's a configuration issue somewhere in django rest framework?