After trying a lot of different fonts and OCR engines I tend to get the best results using Consolas. It is a monospaced typeface like OCR-A, but easier to read for humans. Consolas is included in several Microsoft products.

There is also an open source font Inconsolata, which is influenced by Consolas. Inconsolata is a good replacement for Consolas, especially considering the licensing details.

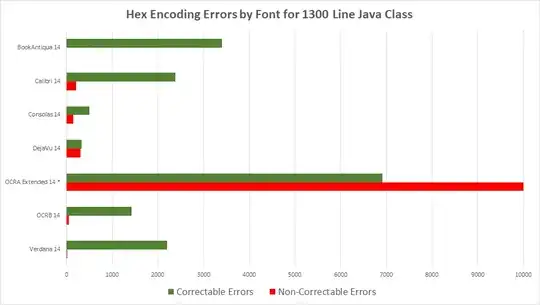

In my tests, the numbers and spaces in the Calibri font were not always recognized properly. OCR-A gave lots of reading errors. I did not give MIRC a try, since it is not easily readable for most humans.

Note: tesseract requires a lot of testing and fine-tuning before being reliable. In our case we switched to a commercially licensed OCR engine (ABBYY), especially since reliability was very important and we needed to support multiple (European) languages.

Update: 2017 Jan 31 - Changed 'based on Consolas' to 'influenced by Consolas' due to potential copyright issues.