I posted another question with a similar regards a few days ago:

I managed to get at least a "working" solution now, meaning that the process itself seems to work correctly. But, as I am a bloody beginner concerning Spark, I seem to have missed some things on how to build these kind of applications in a correct way (performance-/computational-wise)...

What I want to do:

Load history data from ElasticSearch upon application startup

Start listening to a Kafka topic on startup (with sales events, passed as JSON strings) with Spark Streaming

- For each incoming RDD, do an aggregation per user

- Union the results from 3. with the history

- Aggregate the new values, such as total revenue, per user

- Use the results from 5. as new "history" for the next iteration

My code is the following:

import kafka.serializer.StringDecoder

import org.apache.spark.streaming._

import org.apache.spark.streaming.kafka._

import org.apache.spark.{SparkContext, SparkConf}

import org.apache.spark.sql.{DataFrame, SaveMode, SQLContext}

import org.elasticsearch.spark.sql._

import org.apache.log4j.Logger

import org.apache.log4j.Level

object ReadFromKafkaAndES {

def main(args: Array[String]) {

Logger.getLogger("org").setLevel(Level.WARN)

Logger.getLogger("akka").setLevel(Level.WARN)

Logger.getLogger("kafka").setLevel(Level.WARN)

val checkpointDirectory = "/tmp/Spark"

val conf = new SparkConf().setAppName("Read Kafka JSONs").setMaster("local[4]")

conf.set("es.nodes", "localhost")

conf.set("es.port", "9200")

val topicsSet = Array("sales").toSet

val sc = new SparkContext(conf)

val ssc = new StreamingContext(sc, Seconds(15))

ssc.checkpoint(checkpointDirectory)

//Create SQLContect

val sqlContext = new SQLContext(sc)

//Get history data from ES

var history = sqlContext.esDF("data/salesaggregation")

//Kafka settings

val kafkaParams = Map[String, String]("metadata.broker.list" -> "localhost:9092")

// Create direct kafka stream with brokers and topics

val messages = KafkaUtils.createDirectStream[String, String, StringDecoder, StringDecoder](

ssc, kafkaParams, topicsSet)

//Iterate

messages.foreachRDD { rdd =>

//If data is present, continue

if (rdd.count() > 0) {

//Register temporary table for the aggregated history

history.registerTempTable("history")

println("--- History -------------------------------")

history.show()

//Parse JSON as DataFrame

val saleEvents = sqlContext.read.json(rdd.values)

//Register temporary table for sales events

saleEvents.registerTempTable("sales")

val sales = sqlContext.sql("select userId, cast(max(saleTimestamp) as Timestamp) as latestSaleTimestamp, sum(totalRevenue) as totalRevenue, sum(totalPoints) as totalPoints from sales group by userId")

println("--- Sales ---------------------------------")

sales.show()

val agg = sqlContext.sql("select a.userId, max(a.latestSaleTimestamp) as latestSaleTimestamp, sum(a.totalRevenue) as totalRevenue, sum(a.totalPoints) as totalPoints from ((select userId, latestSaleTimestamp, totalRevenue, totalPoints from history) union all (select userId, cast(max(saleTimestamp) as Timestamp) as latestSaleTimestamp, sum(totalRevenue) as totalRevenue, sum(totalPoints) as totalPoints from sales group by userId)) a group by userId")

println("--- Aggregation ---------------------------")

agg.show()

//This is our new "history"

history = agg

//Cache results

history.cache()

//Drop temporary table

sqlContext.dropTempTable("history")

}

}

// Start the computation

ssc.start()

ssc.awaitTermination()

}

}

The computations seem to work correctly:

--- History -------------------------------

+--------------------+--------------------+-----------+------------+------+

| latestSaleTimestamp| productList|totalPoints|totalRevenue|userId|

+--------------------+--------------------+-----------+------------+------+

|2015-07-22 10:03:...|Buffer(47, 1484, ...| 91| 12.05| 23|

|2015-07-22 12:50:...|Buffer(256, 384, ...| 41| 7.05| 24|

+--------------------+--------------------+-----------+------------+------+

--- Sales ---------------------------------

+------+--------------------+------------------+-----------+

|userId| latestSaleTimestamp| totalRevenue|totalPoints|

+------+--------------------+------------------+-----------+

| 23|2015-07-29 09:17:...| 255.59| 208|

| 24|2015-07-29 09:17:...|226.08999999999997| 196|

+------+--------------------+------------------+-----------+

--- Aggregation ---------------------------

+------+--------------------+------------------+-----------+

|userId| latestSaleTimestamp| totalRevenue|totalPoints|

+------+--------------------+------------------+-----------+

| 23|2015-07-29 09:17:...| 267.6400001907349| 299|

| 24|2015-07-29 09:17:...|233.14000019073484| 237|

+------+--------------------+------------------+-----------+

but if the applications runs several iterations, I can see that the performance deteriorates:

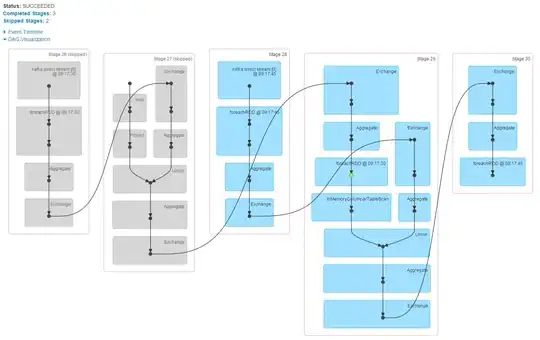

I also see a high number of skipped tasks, which increases with every iteration:

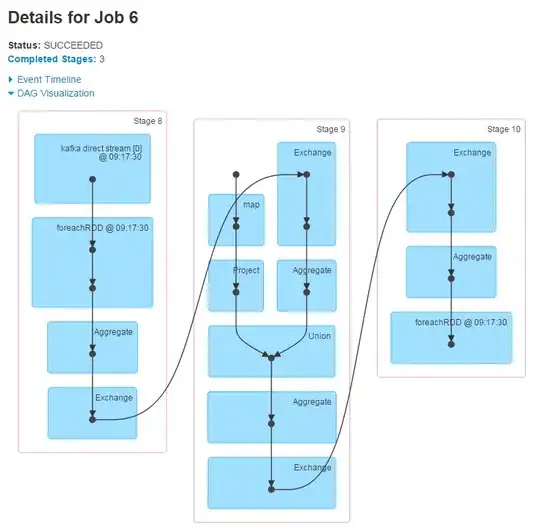

The first iteration's graphs look like

The second iteration's graphs look like

The more iterations have passed, the longer the graph will get, with lots of skipped steps.

Basically, I think the problem is with storing the iterations' results for the next iteration. Unfortunately, also after trying a lot of different things and reading the docs, I'm not able to come up with a solution for this. Any help is warmly appreciated. Thanks!