My question is about building a simple program that detect digits inside images, i did some researches and found this topic Simple OCR digits on stack and i found it very educational, so i wanted to us it for my own need.

My training data image is like:

The code i used to build the dataset is: (i did some modifications to Abid Rahman's code so it can hundle my case)

import sys

import numpy as np

import cv2

im = cv2.imread('data_set_trans.png')

im3 = im.copy()

gray = cv2.cvtColor(im,cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray,(5,5),0)

thresh = cv2.adaptiveThreshold(blur,255,1,1,11,2)

################# Now finding Contours ###################

contours,hierarchy = cv2.findContours(thresh,cv2.RETR_LIST,cv2.CHAIN_APPROX_SIMPLE)

samples = np.empty((0,100))

responses = []

keys = [i for i in range(48,58)]

for cnt in contours:

if cv2.contourArea(cnt)>20:

[x,y,w,h] = cv2.boundingRect(cnt)

if h>=10:

cv2.rectangle(im,(x,y),(x+w,y+h),(0,0,255),2)

roi = thresh[y:y+h,x:x+w]

roismall = cv2.resize(roi,(10,10))

cv2.imshow('norm',im)

print "Begin wait"

key = cv2.waitKey(1)

key = raw_input('What is the number ?') #cv2.waitKey didnt work for me so i add this line

if key == -1: # (-1 to quit)

sys.exit()

else:

responses.append(int(key))

sample = roismall.reshape((1,100))

samples = np.append(samples,sample,0)

responses = np.array(responses,np.float32)

responses = responses.reshape((responses.size,1))

print "training complete"

np.savetxt('generalsamples.data',samples)

np.savetxt('generalresponses.data',responses)

I used the same training data image as testing part, in order to get the best results accuracy and see if i am on the right way:

import cv2

import numpy as np

import collections

####### training part ###############

samples = np.loadtxt('generalsamples.data',np.float32)

responses = np.loadtxt('generalresponses.data',np.float32)

responses = responses.reshape((responses.size,1))

model = cv2.KNearest()

model.train(samples,responses)

############################# testing part #########################

im = cv2.imread('one_white_1.png')

out = np.zeros(im.shape,np.uint8)

gray = cv2.cvtColor(im,cv2.COLOR_BGR2GRAY)

thresh = cv2.adaptiveThreshold(gray,255,1,1,11,2)

contours,hierarchy = cv2.findContours(thresh,cv2.RETR_LIST,cv2.CHAIN_APPROX_SIMPLE)

for cnt in contours:

if cv2.contourArea(cnt)>20:

[x,y,w,h] = cv2.boundingRect(cnt)

if h>=10:

cv2.rectangle(im,(x,y),(x+w,y+h),(0,255,0),2)

roi = thresh[y:y+h,x:x+w]

roismall = cv2.resize(roi,(10,10))

roismall = roismall.reshape((1,100))

roismall = np.float32(roismall)

retval, results, neigh_resp, dists = model.find_nearest(roismall, k = 1)

string = str(int((results[0][0])))

cv2.putText(out,string,(x,y+h),1,1,(0,255,0))

cv2.imshow('im',im)

cv2.imshow('out',out)

#cv2.waitKey(0)

raw_input('Tape to exit')

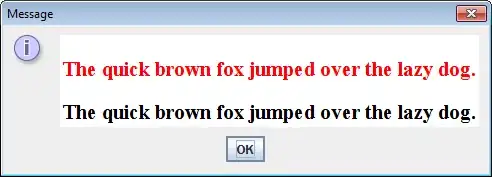

The result was as like that:

As you can see it's completely wrong.

I don't know what i'm missing or if it my case is more particular and can't be handled by this digit OCR system ????

If someone could help me by any idea

I notice that i am using python 2.7 open-cv 2.4.11 numpy 1.9 and mac os 10.10.4

Thanks