I believe you're correct in that there isn't an easy way to do welch corrected anova with resampling, but it should be possible to hobble a few things together to make it work.

require('Ecdat')

I'll use the “Star” dataset from the “Ecdat" package which looks at the effects of small class sizes on standardized test scores.

star<-Star

attach(star)

head(star)

tmathssk treadssk classk totexpk sex freelunk race schidkn

2 473 447 small.class 7 girl no white 63

3 536 450 small.class 21 girl no black 20

5 463 439 regular.with.aide 0 boy yes black 19

11 559 448 regular 16 boy no white 69

12 489 447 small.class 5 boy yes white 79

13 454 431 regular 8 boy yes white 5

Some exploratory analysis:

#bloxplots

boxplot(treadssk ~ classk, ylab="Total Reading Scaled Score")

title("Reading Scores by Class Size")

#histograms

hist(treadssk, xlab="Total Reading Scaled Score")

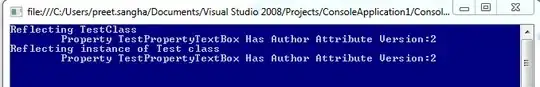

Run regular anova

model1 = aov(treadssk ~ classk, data = star)

summary(model1)

Df Sum Sq Mean Sq F value Pr(>F)

classk 2 37201 18601 18.54 9.44e-09 ***

Residuals 5745 5764478 1003

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

A look at the anova residuals

#qqplot

qqnorm(residuals(model1),ylab="Reading Scaled Score")

qqline(residuals(model1),ylab="Reading Scaled Score")

qqplot shows that ANOVA residuals deviate from the normal qqline

#Fitted Y vs. Residuals

plot(fitted(model1), residuals(model1))

Fitted Y vs. Residuals shows converging trend in the residuals, can test with a Shapiro-Wilk test just to be sure

shapiro.test(treadssk[1:5000]) #shapiro.test contrained to sample sizes between 3 and 5000

Shapiro-Wilk normality test

data: treadssk[1:5000]

W = 0.92256, p-value < 2.2e-16

Just confirms that we aren't going to be able to assume a normal distribution.

We can use bootstrap to estimate the true F-dist.

#Bootstrap version (with 10,000 iterations)

mean_read = mean(treadssk)

grpA = treadssk[classk=="regular"] - mean_read[1]

grpB = treadssk[classk=="small.class"] - mean_read[2]

grpC = treadssk[classk=="regular.with.aide"] - mean_read[3]

sim_classk <- classk

R = 10000

sim_Fstar = numeric(R)

for (i in 1:R) {

groupA = sample(grpA, size=2000, replace=T)

groupB = sample(grpB, size=1733, replace=T)

groupC = sample(grpC, size=2015, replace=T)

sim_score = c(groupA,groupB,groupC)

sim_data = data.frame(sim_score,sim_classk)

}

Now we need to get the set of unique pairs of the Group factor

allPairs <- expand.grid(levels(sim_data$sim_classk), levels(sim_data$sim_classk))

## http://stackoverflow.com/questions/28574006/unique-combination-of-two-columns-in-r/28574136#28574136

allPairs <- unique(t(apply(allPairs, 1, sort)))

allPairs <- allPairs[ allPairs[,1] != allPairs[,2], ]

allPairs

[,1] [,2]

[1,] "regular" "small.class"

[2,] "regular" "regular.with.aide"

[3,] "regular.with.aide" "small.class"

Since oneway.test() applies a Welch correction by default, we can use that on our simulated data.

allResults <- apply(allPairs, 1, function(p) {

#http://stackoverflow.com/questions/28587498/post-hoc-tests-for-one-way-anova-with-welchs-correction-in-r

dat <- sim_data[sim_data$sim_classk %in% p, ]

ret <- oneway.test(sim_score ~ sim_classk, data = sim_data, na.action = na.omit)

ret$sim_classk <- p

ret

})

length(allResults)

[1] 3

allResults[[1]]

One-way analysis of means (not assuming equal variances)

data: sim_score and sim_classk

F = 1.7741, num df = 2.0, denom df = 1305.9, p-value = 0.170