I am trying to crawl the contents of a webpage but I don't understand why I am getting this error : http.client.IncompleteRead: IncompleteRead(2268 bytes read, 612 more expected)

here is th link I am trying to crawl : www.rc2.vd.ch

Here is the Python code I am using to crawl :

import requests

from bs4 import BeautifulSoup

def spider_list():

url = 'http://www.rc2.vd.ch/registres/hrcintapp-pub/companySearch.action?lang=FR&init=false&advancedMode=false&printMode=false&ofpCriteria=N&actualDate=18.08.2015&rowMin=0&rowMax=0&listSize=0&go=none&showHeader=false&companyName=&companyNameSearchType=CONTAIN&companyOfsUid=&companyOfrcId13Part1=&companyOfrcId13Part2=&companyOfrcId13Part3=&limitResultCompanyActive=ACTIVE&searchRows=51&resultFormat=STD_COMP_NAME&display=Rechercher#result'

source_code = requests.get(url)

plain_text = source_code.text

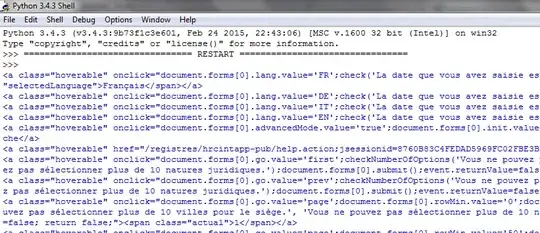

soup = BeautifulSoup(plain_text, 'html.parser')

for link in soup.findAll('a', {'class': 'hoverable'}):

print(link)

spider_list()

I tried with an other website link and it works fine, but why can't I crawl this one?

If it's not possible to do it with this code then how can I do it ?

------------ EDIT ------------

here is the full error message :

Traceback (most recent call last):

File "C:/Users/Nuriddin/PycharmProjects/project/a.py", line 19, in <module>

spider_list()

File "C:/Users/Nuriddin/PycharmProjects/project/a.py", line 12, in spider_list

source_code = requests.get(url)

File "C:\Python34\lib\site-packages\requests\api.py", line 69, in get

return request('get', url, params=params, **kwargs)

File "C:\Python34\lib\site-packages\requests\api.py", line 50, in request

response = session.request(method=method, url=url, **kwargs)

File "C:\Python34\lib\site-packages\requests\sessions.py", line 465, in request

resp = self.send(prep, **send_kwargs)

File "C:\Python34\lib\site-packages\requests\sessions.py", line 605, in send

r.content

File "C:\Python34\lib\site-packages\requests\models.py", line 750, in content

self._content = bytes().join(self.iter_content(CONTENT_CHUNK_SIZE)) or bytes()

File "C:\Python34\lib\site-packages\requests\models.py", line 673, in generate

for chunk in self.raw.stream(chunk_size, decode_content=True):

File "C:\Python34\lib\site-packages\requests\packages\urllib3\response.py", line 303, in stream

for line in self.read_chunked(amt, decode_content=decode_content):

File "C:\Python34\lib\site-packages\requests\packages\urllib3\response.py", line 450, in read_chunked

chunk = self._handle_chunk(amt)

File "C:\Python34\lib\site-packages\requests\packages\urllib3\response.py", line 420, in _handle_chunk

returned_chunk = self._fp._safe_read(self.chunk_left)

File "C:\Python34\lib\http\client.py", line 664, in _safe_read

raise IncompleteRead(b''.join(s), amt)

http.client.IncompleteRead: IncompleteRead(4485 bytes read, 628 more expected)