I'm trying to get 3D coordinates of several points in space, but I'm getting odd results from both undistortPoints() and triangulatePoints().

Since both cameras have different resolution, I've calibrated them separately, got RMS errors of 0,34 and 0,43, then used stereoCalibrate() to get more matrices, got an RMS of 0,708, and then used stereoRectify() to get remaining matrices. With that in hand I've started the work on gathered coordinates, but I get weird results.

For example, input is: (935, 262), and the undistortPoints() output is (1228.709125, 342.79841) for one point, while for another it's (934, 176) and (1227.9016, 292.4686) respectively. Which is weird, because both of these points are very close to the middle of the frame, where distortions are the smallest. I didn't expect it to move them by 300 pixels.

When passed to traingulatePoints(), the results get even stranger - I've measured the distance between three points in real life (with a ruler), and calculated the distance between pixels on each picture. Because this time the points were on a pretty flat plane, these two lengths (pixel and real) matched, as in |AB|/|BC| in both cases was around 4/9. However, triangulatePoints() gives me results off the rails, with |AB|/|BC| being 3/2 or 4/2.

This is my code:

double pointsBok[2] = { bokList[j].toFloat()+xBok/2, bokList[j+1].toFloat()+yBok/2 };

cv::Mat imgPointsBokProper = cv::Mat(1,1, CV_64FC2, pointsBok);

double pointsTyl[2] = { tylList[j].toFloat()+xTyl/2, tylList[j+1].toFloat()+yTyl/2 };

//cv::Mat imgPointsTyl = cv::Mat(2,1, CV_64FC1, pointsTyl);

cv::Mat imgPointsTylProper = cv::Mat(1,1, CV_64FC2, pointsTyl);

cv::undistortPoints(imgPointsBokProper, imgPointsBokProper,

intrinsicOne, distCoeffsOne, R1, P1);

cv::undistortPoints(imgPointsTylProper, imgPointsTylProper,

intrinsicTwo, distCoeffsTwo, R2, P2);

cv::triangulatePoints(P1, P2, imgWutBok, imgWutTyl, point4D);

double wResult = point4D.at<double>(3,0);

double realX = point4D.at<double>(0,0)/wResult;

double realY = point4D.at<double>(1,0)/wResult;

double realZ = point4D.at<double>(2,0)/wResult;

The angles between points are kinda sorta good but usually not:

`7,16816 168,389 4,44275` vs `5,85232 170,422 3,72561` (degrees)

`8,44743 166,835 4,71715` vs `12,4064 158,132 9,46158`

`9,34182 165,388 5,26994` vs `19,0785 150,883 10,0389`

I've tried to use undistort() on the entire frame, but got results just as odd. The distance between B and C points should be pretty much unchanged at all times, and yet this is what I get:

7502,42

4876,46

3230,13

2740,67

2239,95

Frame by frame.

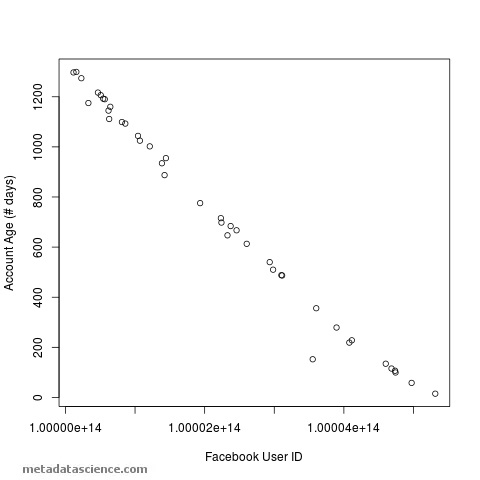

Pixel distance (bottom) vs real distance (top) - should be very similar:

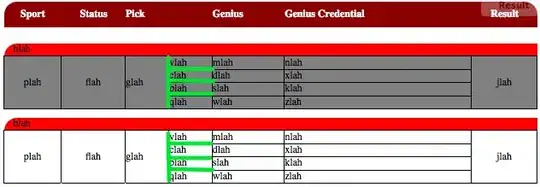

Angle:

Also, shouldn't both undistortPoints() and undistort() give the same results (another set of videos here)?