I encountered this problem while using Scrapy's FifoDiskQueue. In windows, FifoDiskQueue will cause directories and files to be created by one file descriptor and consumed (and if no more message in the queue, removed) by another file descriptor.

I will get error messages like the following, randomly:

2015-08-25 18:51:30 [scrapy] INFO: Error while handling downloader output

Traceback (most recent call last):

File "C:\Python27\lib\site-packages\twisted\internet\defer.py", line 588, in _runCallbacks

current.result = callback(current.result, *args, **kw)

File "C:\Python27\lib\site-packages\scrapy\core\engine.py", line 154, in _handle_downloader_output

self.crawl(response, spider)

File "C:\Python27\lib\site-packages\scrapy\core\engine.py", line 182, in crawl

self.schedule(request, spider)

File "C:\Python27\lib\site-packages\scrapy\core\engine.py", line 188, in schedule

if not self.slot.scheduler.enqueue_request(request):

File "C:\Python27\lib\site-packages\scrapy\core\scheduler.py", line 54, in enqueue_request

dqok = self._dqpush(request)

File "C:\Python27\lib\site-packages\scrapy\core\scheduler.py", line 83, in _dqpush

self.dqs.push(reqd, -request.priority)

File "C:\Python27\lib\site-packages\queuelib\pqueue.py", line 33, in push

self.queues[priority] = self.qfactory(priority)

File "C:\Python27\lib\site-packages\scrapy\core\scheduler.py", line 106, in _newdq

return self.dqclass(join(self.dqdir, 'p%s' % priority))

File "C:\Python27\lib\site-packages\queuelib\queue.py", line 43, in __init__

os.makedirs(path)

File "C:\Python27\lib\os.py", line 157, in makedirs

mkdir(name, mode)

WindowsError: [Error 5] : './sogou_job\\requests.queue\\p-50'

In Windows, Error 5 means access is denied. A lot of explanations on the web quote the reason as lacking administrative rights, like this MSDN post. But the reason is not related to access rights. When I run the scrapy crawl command in a Administrator command prompt, the problem still occurs.

I then created a small test like this to try on windows and linux:

#!/usr/bin/python

import os

import shutil

import time

for i in range(1000):

somedir = "testingdir"

try:

os.makedirs(somedir)

with open(os.path.join(somedir, "testing.txt"), 'w') as out:

out.write("Oh no")

shutil.rmtree(somedir)

except WindowsError as e:

print 'round', i, e

time.sleep(0.1)

raise

When I run this, I will get:

round 13 [Error 5] : 'testingdir'

Traceback (most recent call last):

File "E:\FHT360\FHT360_Mobile\Source\keywordranks\test.py", line 10, in <module>

os.makedirs(somedir)

File "C:\Users\yj\Anaconda\lib\os.py", line 157, in makedirs

mkdir(name, mode)

WindowsError: [Error 5] : 'testingdir'

The round is different every time. So if I remove raise in the end, I will get something like this:

round 5 [Error 5] : 'testingdir'

round 67 [Error 5] : 'testingdir'

round 589 [Error 5] : 'testingdir'

round 875 [Error 5] : 'testingdir'

It simply fails randomly, with a small probability, ONLY in Windows. I tried this test script in cygwin and linux, this error never happens there. I also tried the same code in another Windows machine and it occurs there.

What are possible reasons for this?

[Update]

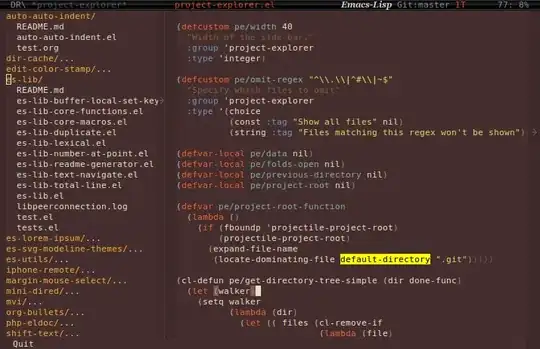

Screenshot of proof [管理员 means Administrator in Chinese]:

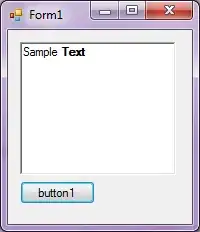

Also proof that the test case still fails in an administrator command prompt:

@pss said that he couldn't reproduce the issue. I tried our Windows 7 Server. I installed a fresh new python 2.7.10 64-bit. I had to set a really large upper bound for round and only started to see errors appearing after round 19963: