I've install Spark - 1.4.1 (have R 3.1.3 version). Currently testing SparkR to run statistical models. I'm able to run some sample code such as,

Sys.setenv(SAPRK_HOME = "C:\\hdp\\spark-1.4.1-bin-hadoop2.6")

.libPaths(c(file.path(Sys.getenv("SPARK_HOME"), "R", "lib"), .libPaths()))

#load the Sparkr library

library(SparkR)

# Create a spark context and a SQL context

sc <- sparkR.init(master = "local")

sqlContext <- sparkRSQL.init(sc)

#create a sparkR DataFrame

DF <- createDataFrame(sqlContext, faithful)

sparkR.stop()

So Next,I'm installing rJava package in to SparkR. But its not installing. Giving below error.

> install.packages("rJava")

Installing package into 'C:/hdp/spark-1.4.1-bin-hadoop2.6/R/lib'

(as 'lib' is unspecified)

trying URL 'http://ftp.iitm.ac.in/cran/bin/windows/contrib/3.1/rJava_0.9-7.zip'

Content type 'text/html; charset="utf-8"' length 898 bytes

opened URL

downloaded 898 bytes

Error in read.dcf(file.path(pkgname, "DESCRIPTION"), c("Package", "Type")) :

cannot open the connection

In addition: Warning messages:

1: In unzip(zipname, exdir = dest) : error 1 in extracting from zip file

2: In read.dcf(file.path(pkgname, "DESCRIPTION"), c("Package", "Type")) :

cannot open compressed file 'rJava/DESCRIPTION', probable reason 'No such file or directory'

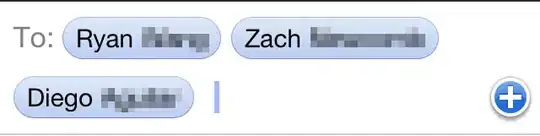

Also when, I'm running SparkR command on shell it is started as 32-bit application. I highlighted the version info as below.

So, please help me to resolve this issue.