I'm looking for a way that, given an input image and a neural network, it will output a labeled class for each pixel in the image (sky, grass, mountain, person, car etc).

I've set up Caffe (the future-branch) and successfully run the FCN-32s Fully Convolutional Semantic Segmentation on PASCAL-Context model. However, I'm unable to produce clear labeled images with it.

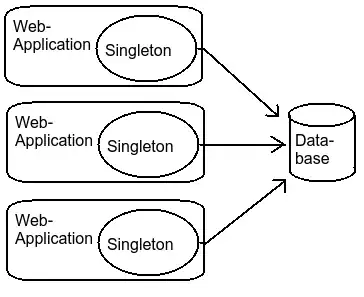

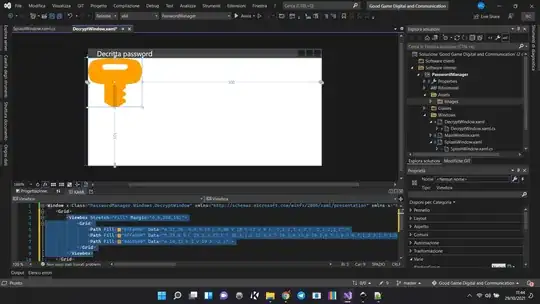

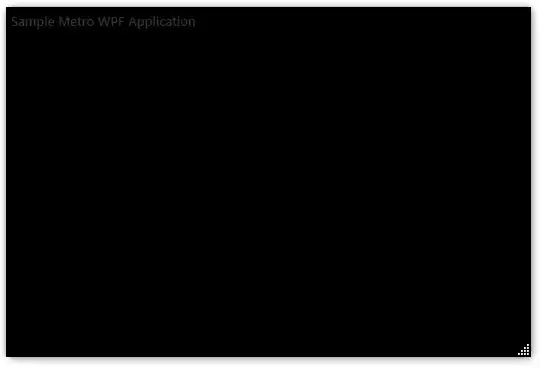

Images that visualizes my problem:

Input image

ground truth

And my result:

This might be some resolution issue. Any idea of where I'm going wrong?