Update: I just realized that I studied algorithms from MIT's videos. Here is the link to the first of those videos. Keep going to next lecture as far as you want.

Clearly, Log(n) has no value without fixing what n is and what base of log we are using. The purpose of mentioning log(n) so often is to help people understand the rate of growth of a particular algorithm or piece of code. It is only to help people see things in perspective. To build your perspective, see the comparison below:

1 < logn < n < nlogn < n2 < 2^n < n! < n^n

The line above says that after some value of n on the number line, the rate of growth of the above written functions is in the order mentioned there. This way, decision makers can decide which approach they want to take in solving their problem (and students can pass their Algorithm Design and Analysis exam).

Coming to your question, when books say 'binary search's run time is Log(n)', essentially they mean that the if you have n elements, the running time for binary search would be proportional to Log(n) and if you have 17n elements then you can expect the answer from your algorithm in a time duration that is proportional to Log(17n). In this case, the base of Log function is 2 because in binary search, we have exactly <= 2 paths to pick from at every node.

Since, the log function's base can be easily converted from any number to any other number by multiplying a constant, telling what the base is becomes irrelevant as in Big O notations, constants are ignored.

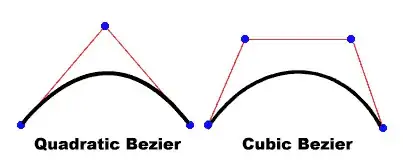

Coming to the answer to your second question, images will explain it the best.

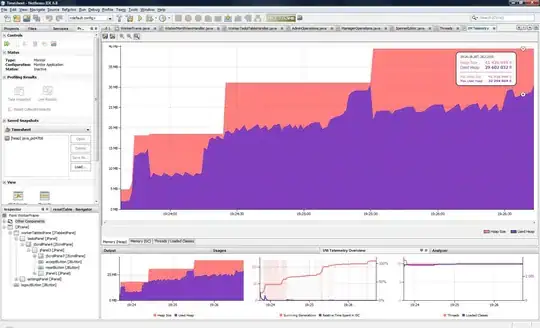

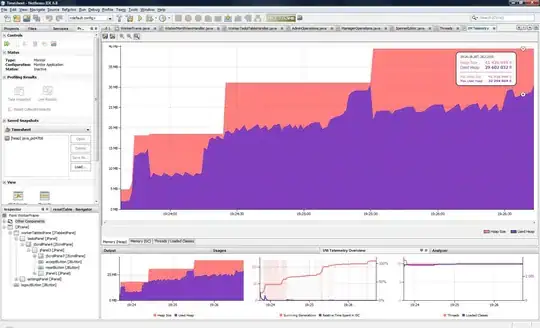

Big O is only about the upper bound on a function. In the image below, f(n) = O(g(n)). In other words, there are positive constants c and k, such that 0 ≤ f(n) ≤ cg(n) for all n ≥ k.

- Importance of k is that after 'k' this Big O will stay true, no matter what value of n. If we can't fix a 'k', we cannot say that the growth rate will always stay below the function mentioned in O(...).

- Importance of c is in saying that it is the function between O(...) that's really important.

Omega is simply the inversion of Big O. If f(n) = O(g(n)), then g(n) = Ω(f(n)). In other words, Ω() is about your function staying above what is mentioned in Ω(...) for a given value of another 'k' and another 'c'.

The pictorial visualization is

Finally, Big theta is about finding a mathematical function that grows at same rate as your given function. But how do you prove that this function runs same as your function. By using two constant values.

Since it runs same as your given function, you should be able to multiply two constants 'c1' and 'c2' that will be able to put c1 * g(n) above your function f(n) and put c2 * g(n) below your function f(n).

The thing behind Big theta is to provide a function with same rate of growth. Note that there may be no constant 'c' that will be able to get f(n) and g(n) to overlap. Nobody is concerned with that. The only concern is to be able to sandwich the f(n) between a g(n) using two constants so that we can confidently say that we found the rate of growth of f(n).

How to apply the above learned ideas to your question?

Let's take each of them one by one. You can use some online tool to plot these functions and see first hand, how these function behave when you go along the number line.

- f(n) = n - 100 and g(n) = n - 200

Here, the rate of growth can be found out by differentiating both functions wrt n. d(f(n))/dn = d(g(n))/dn = 1. Therefore, even though the running times of f(n) and g(n) may be different, their rate of growth is same. Can you pick 'c1' and 'c2' such that c1 * g(n) < f(n) < c2 * g(n)?

- f(n) = 100n + log(n) and g(n) = n + 2(log (n))

Differentiate and tell if you can relate the functions as Big O or Big Theta or Big Omega.

- f(n) = log (2n) and g(n) = log (3n)

Same as above.

(The images are taken from different pages on this website: http://xlinux.nist.gov/dads/HTML/)

My experience: Try to compare the growth rate of a lot of different functions. Eventually you will get the hang of it for all of them and it will become very intuitive for you. Given concentrated effort for one week or two, this concept cannot remain esoteric for anyone.